2024 State of Reliable AI Survey

Each year, Monte Carlo Carlo surveys real data professionals about the state of their data quality. This year, we turned our gaze to the shadow of AI to understand what’s happening right now, how it impacts data quality, and what data professionals are doing about it.

And the results speak for themselves. While responses indicated that nearly 100% of data teams are actively pursuing AI applications, 68% said they weren’t completely confident in the quality of the data that powers it.

Like any data product, when it comes to AI, garbage data in means garbage responses out. The success—and safety—of AI depends on the reliability of the data that powers it. And the results of this year’s survey suggests that most data teams still have some work to do.

The Wakefield Research survey—which polled 200 data leaders and professionals—was commissioned by Monte Carlo in April 2024, and comes as data teams are grappling with the adoption of generative AI.

Among the findings are several statistics that indicate the current state of the AI race and professional sentiment about the technology:

- 100% of data professionals feel pressure from their leadership to implement a GenAI strategy and/or build GenAI products

- 91% of data leaders (VP or above) have built or are currently building a GenAI product

- 82% of respondents rated the potential usefulness of GenAI at least an 8 on a scale of 1-10, but 90% believe their leaders do not have realistic expectations for its technical feasibility or ability to drive business value.

- 84% of respondents indicate that it is the data team’s responsibility to implement a GenAI strategy, versus 12% whose organizations have built dedicated GenAI teams.

While AI is widely expected to be among the most transformative technological advancements of the last decade, these findings suggest a troubling disconnect between data teams and business stakeholders.

Data leaders clearly feel the pressure and responsibility to participate in the GenAI revolution, but some may be forging ahead in spite of more primordial priorities—and in some cases, against their better judgment.

The State of Reliable AI Infrastructure

Even before the advent of GenAI, organizations were dealing with an exponentially greater volume of data than in decades past. Since adopting GenAI programs, 91% of data leaders report that both applications and the number of critical data sources has increased even further—deepening the complexity and scale of their data estates in the process.

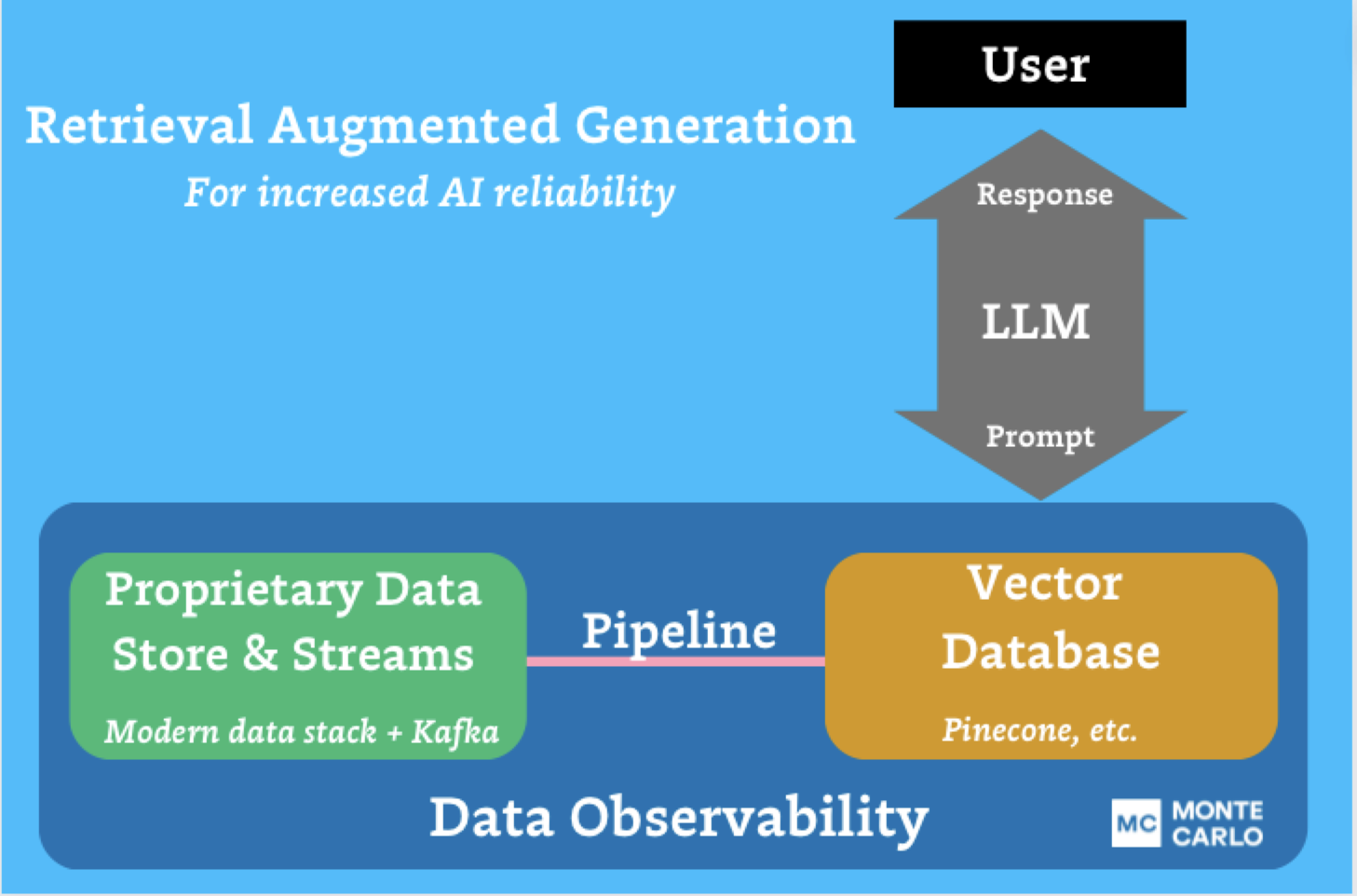

“Data is the lifeblood of all AI – without secure, compliant, and reliable data, enterprise AI initiatives will fail before they get off the ground. Data quality is a critical but often overlooked component of ensuring ethical and accurate models, and the fact that 68% of data leaders surveyed did not feel completely confident that their data reflects the unsung importance of this puzzle piece,” said Lior Solomon, VP of Data, Drata. “The most advanced AI projects will prioritize data reliability at each stage of the model development life cycle, from ingestion in the database to fine-tuning or RAG.”

What’s more, the survey revealed that data teams are using a myriad of approaches to tackle GenAI, suggesting that not only is the volume and complexity of data increasing, but that there’s no one-size-fits-most method for getting these AI models customer-ready.

How data teams are approaching AI:

- 49% building their own LLM

- 49% using model-as-a-service providers like OpenAI or Anthropic

- 48% implementing a retrieval-augmented generation (RAG) architecture

- 48% fine-tuning models-as-a-service or their own LLMs

As the complexity of the AI’s architecture—and the data that powers it—continues to expand, one perennial problem expands with it: data quality issues.

The Key Question: Is Your Data GenAI Ready?

Data quality has always been a challenge for data teams. However, survey results reveal that the introduction of GenAI has exacerbated both the scope and severity of this problem.

Two-thirds of survey respondents reported experiencing a data incident that cost their organization $100,000 or more in the past 6 months. This is a shocking figure when you consider that 70% of data leaders surveyed reported that it takes longer than 4 hours to find a data incident. What’s worse, previous surveys commissioned by Monte Carlo reveal that data teams face, on average, 67 data incidents per month.

This is because while the data estate has been evolving rapidly over the last 24 months, the general approach to data quality has not. Despite the growing sources and applications of data for AI, many respondents still rely on tedious and unreliable data quality methods. In fact, more than half (54%) of data teams that responded depend on either manual testing for detection if they’ve implemented any data quality tactics at all for the data feeding their LLMs.

This anemic approach allows for more data incidents to slip through the cracks, which can multiply hallucinations, diminish the accuracy or safety of outputs, and erode confidence in both the AI products and the companies that build them—all the while wreaking financial havoc in the process.

So, is this Faustian bargain placing AI’s success—and corporate reputations—at risk?

“In 2024, data leaders are being tasked with not only shepherding their companies’ GenAI initiatives from experimentation to production, but also ensuring that they’re successful. Enterprise AI relies on three key pillars: security, compliance, and privacy; scalability; and trust,” said Barr Moses, co-founder and CEO of Monte Carlo. “As validated by our survey, AI applications will fail without a proactive, end-to-end approach to tackling data trust. Prioritizing automatic, end-to-end approaches to Data quality must be P0 for data leaders, and automated, ML-driven approaches like data observability will drive the future of reliable AI.”

To learn more about how organizations are making data and AI more reliable with Monte Carlo, schedule a demo in the link below.

Our promise: we will show you the product.

Product demo.

Product demo.  What is data observability?

What is data observability?  What is a data mesh--and how not to mesh it up

What is a data mesh--and how not to mesh it up  The ULTIMATE Guide To Data Lineage

The ULTIMATE Guide To Data Lineage