5 Non-Obvious Things to Consider When Building Your Data Platform

Here are 5 important questions to answer when migrating to the self-service data platform of your dreams.

Building a data platform—i.e., a central repository for all your company’s data, which enables the acquisition, storage, delivery, and governance of that data while maintaining security across the data lifecycle—has become a rite of passage for today’s data teams. Data platforms are critical in a modern organization because they help optimize operations by allowing leaders to glean actionable insights from data more easily.

In this brave new data-driven world, though, ensuring you’re getting started on the right foot as you build your data platform can be challenging. Some considerations as you build are obvious: the tools you’ll need, for example, the users you’ll serve, the data sources you’ll leverage, and the ultimate uses of the platform. But there are several organizational and cultural considerations that even the best-intended data teams might overlook in their eagerness to build.

We sat down with Noah Abramson and Angie Delatorre from Toast, a leading point of sale provider for restaurants and a recent unicorn, to learn about their approach to building a high-functioning, modern data platform.

During our conversation, we learned that there are five important considerations when building your data platform. Let’s dive in.

Consideration 1: How will you gain stakeholder buy-in?

A data platform is only helpful if its users—i.e., stakeholders across the business—are open to and familiar with it. Before creating a data platform, it’s critical to get all the teams that might take advantage of the platform on board.

At Toast, this was blessedly simple.

“Toast is a super data-driven company,” says Noah. “I think that’s been really beneficial for our [data] team, to be honest. Our data engineering team director has done a really nice job positioning us to be as valuable as possible for the rest of the company, and enabling people with those types of data insights to then have support to make decisions, then measure those decisions and outcomes.”

Employees in every division across the organization should understand how the data platform will ultimately provide value to them. That’s the initial job of the data team: to explain and showcase that value, and to establish a method of measuring success even as the company scales. The Toast data team began by understanding the business problems affecting their colleagues, then positioned the data team as purveyors of potential solutions.

“Our director gets our team involved early into these problems and helps us understand how we’re going to solve it, how we’re going to measure that we’re solving it correctly, and how we’re going to start to track that data—not just now but also in the future,” says Noah.

Over time, Toast developed a system that removed bottlenecks by building a self-serve analytics model to service the broader company and remove bottlenecks.

“[Our process was originally] super centralized, and we owned the entire stack,” explains Noah. “As the company started to grow, it got overwhelming. We pivoted to a self-service model, and we became the team that you would consult with as you were building these dashboards and owning the data.”

Consideration 2: Who owns what in the data stack?

To be used most effectively, data should be viewed as a shared resource across the organization. Various teams take ownership of the company’s data at various points in its lifecycle: the data engineering team may own the raw data, for example, before they hand it off to the analytics engineering team for analysis and insights, which can then be parsed and applied by the business intelligence team.

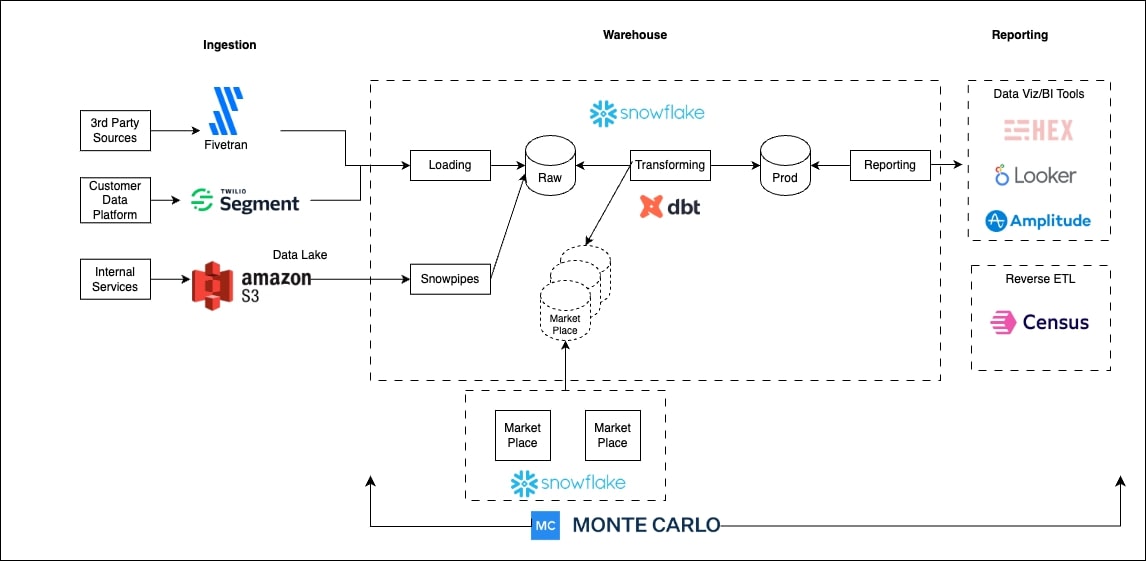

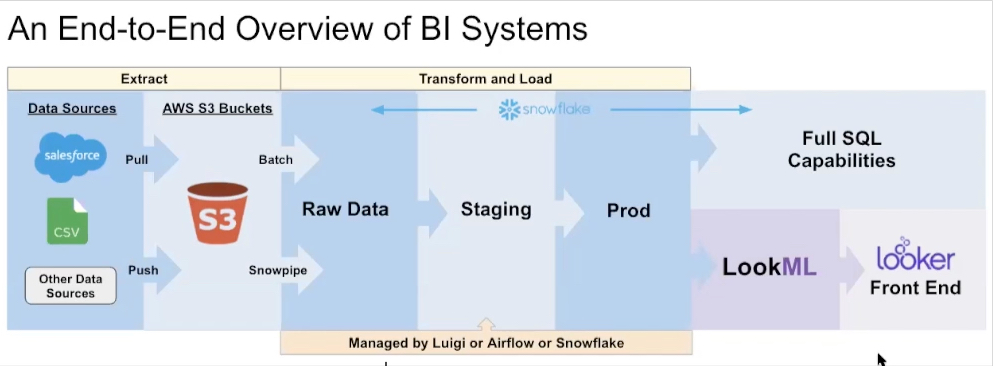

The end-to-end data stack comprises multiple tools and technologies that support each of these teams. Toast derives data from sources including Salesforce, NetSuite, Workday, and Toast itself. That data flows into S3, the team’s data lake, which is then copied into Snowflake, its cloud data warehouse. The team uses Looker as its front-end tool, and all jobs are orchestrated via Airflow.

At Toast, the data platform team owns the company’s external-facing data insights and analytics.

“One of our big value ads [as an organization] is giving business insights to our customers: restaurants,” says Noah. “How did they do over time? How much were their sales yesterday? Who is their top customer? It’s the data platform team’s job to engage with our restaurant customers.”

Noah’s team, in contrast, is largely internal.

“We say our customers are all Toast employees,” he says. “We try to enable all of them with as much data as possible. Our team services all internal data requests from product to go-to-market to customer support to hardware operations.” It’s thus Noah’s team’s job to build out data flows into overarching systems and help stakeholders across the organization derive insights from tools including Snowflake and Looker.

Consideration 3: How will you measure success?

If you can’t measure, you can’t manage—and that truism applies when it comes to assessing the impact of data on the business. When building a data platform, it’s important both to measure how stakeholders can leverage data to support business needs and to ascertain the quality and efficiency of the data team’s performance.

“We really listen to what the business needs,” says Noah regarding how his team thinks about measuring data-related KPIs. “At the top level, they come up with a couple different objectives to hit on: e.g., growing customers, growing revenue, cutting costs in some spend area.”

Noah and his team then take these high-level business objectives and use them to build out Objectives and Key Results (OKRs).

“We’re able to do that in a few ways,” says Noah. “If you think about growing the customer base, for example, we ask, ‘how do we enable people with data to make more decisions?’ If somebody has a new product idea, how do we play with that and let them put it out there and then measure it?”

Additionally, the team focuses on measuring the scalability of its processes. “Not only do we listen to the business needs and obviously support them, but we also look internally and address scalability,” says Angie. “If a job used to take one hour, and now it takes three hours, we always need to go back and look at those instances, so that shapes our OKRs as well.”

Consideration 4: Will you centralize or decentralize your org structure?

Every data team is different, and each team’s needs will change over time. Should your company pursue a centralized organizational structure for your data team? Will centralization impose too many bottlenecks? Will a decentralized approach lead to duplication and complexity? Understanding what each option looks like—and choosing the model that’s best for your business at a given point in time—is an important consideration as you build your data platform.

Over the years, Toast’s own structure has run the gamut: from centralized to decentralized to hybrid. Originally, all requests flowed through the data team. “And that worked,” says Noah. “But as the company started to grow, it got overwhelming.”

Toast pivoted to a decentralized model that emphasized self-service for analysts and positioned the data team in a more consultative role. This change arose due to several vestiges of rapid growth, including a huge volume of incoming data, an increased number of people relying on that data, and the limited resources of the growing data engineering team.

As Toast grew, the company knew it would need to leverage data in new ways to support growth.

“We needed to think about how to enable all of these new business lines that were being spun up to have the same level of insights that our go-to-market team had,” says Noah. “We have this high expectation of data usage, and we wanted to enable all the people [across the organization] to have that same access that we’ve built out for go-to-market and sales.”

That led to questions of prioritization for a stretched-thin data engineering team with limited bandwidth. “Our most current evolution of our team really addresses those types of [business] needs,” says Noah. “In our current setup, a super new paradigm that we’re living in is data engineering, analytics engineering, and then visualizations on top of it. We are now owning efficiently getting data into Snowflake and with all of our many different sources.”

In turn, the analytics engineering team is focused on creating a data model that can service the various ways different Toast constituents think about data and the business.

“I think the new analytics engineering team—and we’re really excited about it—is going to zoom out a little bit and understand all the problems and see how we can build a data model to service that,” says Noah. Toast’s current hybrid organizational structure works best for its current needs—and the team is always willing to reevaluate and pivot should its needs and circumstances change.

Consideration 5: How will you tackle data reliability and trust?

As volumes of data continue to increase in lockstep with the willingness of various business units to leverage them, data reliability—that is, an organization’s ability to deliver high data availability and health throughout the entire data lifecycle—becomes increasingly important. Whether you choose to build your own data reliability tool or buy one, it will become an essential part of a functional data platform.

As the Toast team began working with ever-growing volumes of data, ensuring data reliability became mission-critical. “There’s a lot of moving parts,” says Noah of the data stack. “There’s a lot of logic in the staging areas and lots of things that happen. So that kind of begs the question, how do we observe all of this data? And how do we make sure when data gets to production and Looker that it is what we want it to be, and it’s accurate, and it’s timely, and all of those fun things that we actually care about?”

Originally, Noah and two other engineers spent a day building a data freshness tool they called Breadbox. The tool could conduct basic data observability tasks including storing raw counts, storing percent nulls, ensuring data would land in the data lake when expected, and more.

“That was really cool,” notes Noah, “but as our data grew, we didn’t keep up. As all of these new sources came in and demanded a different type of observation, we were spending time building the integration into the tool and not as much time in building out the new test for that tool.”

Once the team reached that pivotal level of growth, it was time to consider purchasing a data observability platform rather than pouring time and resources into perfecting its own.

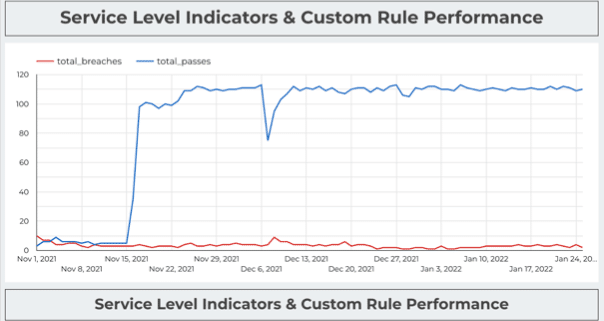

“With Monte Carlo, we got the thing up and running within a few hours and then let it go,” says Noah. “We’ve been comparing our custom tool to Monte Carlo in this implementation process. We didn’t write any code. We didn’t do anything except click a few buttons. And it’s giving us insights that we spent time writing and building or maintaining.”

Ultimately, it was a no-brainer for Toast to purchase the tool it needed while allowing the data engineering team to focus on adding value to the business.

When it comes to building or buying, Angie says, the right choice will differ for any given company.

“I think that our build worked very well for what it was,” she says. “But when you talk about scalability, we’re growing so rapidly and onboarding people, and it’s going to be tough to teach them how to use this tool. And if something breaks, it’s tough to [fix].”

In the long run, she says, “I want our team focusing on enriching data and enabling Toast. And if there is a best-in-class tools that does this, we are very willing to pay for that and enable our expertise to do what we do best and enable business users to do their thing with the data.”

Since implementing Monte Carlo, Toast’s data engineering team can do just that. What’s more, Monte Carlo offers additional value to the company beyond what a bespoke tool could provide.

“There are a few things [about Monte Carlo] that I think are extremely valuable,” says Angie. “Machine learning, being able to go in and say ‘this is expected,’ or ‘this is fixed.’ Another thing is the record of the incidents and how they happened. I think it’s extremely valuable to be able to go back and have that record.”

Monte Carlo’s simplicity also helps the team avoid alert fatigue, she suggests.

“It just cuts down on time,” she says. “You’re directed exactly toward what the problem could be, and from there, you can expand.”

The best data platform for your team

Each data platform at each company will look a little different—and it should. When it comes to creating the best data platform for your team and organization, it’s important to ask yourself nuanced questions about your company’s culture, business objectives, structure, and more.

Interested in learning more about how to build reliable data platforms? ! Book a time to speak with us in the form below.

Our promise: we will show you the product.

Product demo.

Product demo.  3 Steps to AI-Ready Data

3 Steps to AI-Ready Data  What is a data mesh--and how not to mesh it up

What is a data mesh--and how not to mesh it up  The ULTIMATE Guide To Data Lineage

The ULTIMATE Guide To Data Lineage