5 Skills Data Engineers Should Master to Keep Pace with GenAI

If you’re a data engineer experiencing GenAI-induced whiplash, you’re not alone. On one hand, everyone’s talking about whether GenAI’s not-insignificant data engineering skills are going to automate away their jobs.

On the other, business leaders are realizing that slapping a quick ChatGPT integration into their product isn’t going to cut it — and they’re looking to data engineers for a better solution.

Organizations need to connect LLMs with their proprietary data and business context to actually create value for their customers and employees. They need robust data pipelines, high-quality data, well-guarded privacy, and cost-effective scalability.

Who can deliver? Data engineers. Or rather, data engineers who aren’t just keeping pace with GenAI — they’re mastering the must-have skills to become the architects of their organizations’ AI applications.

The truth is, GenAI isn’t going anywhere. Ambitious CEOs and boards are looking for data teams who can use this technology to drive the business forward. Our own research shows that nearly 50% of data teams surveyed are already feeling significant pressure from their CEOs to invest in GenAI at the expense of potentially higher-returning investments.

Data engineers, take note. We’re at the precipice of a sea change: automating away some of the more mundane tasks of data engineering, while ushering in the potential to build enormously effective applications for your organization. Adopting these five skills will help you stay ahead of the AI curve, and become downright indispensable.

Table of Contents

1) Retrieval-augmented generation (RAG)

Mastering retrieval-augmented generation (RAG) is our number-one recommendation for data engineers who want to start building GenAI applications that drive meaningful value for the business. Right now, RAG is the essential technique to make GenAI models useful by giving an LLM access to an integrated, dynamic dataset while responding to prompts.

For example, customer service chatbots can use RAG to offer personalized assistance. With RAG, when a customer makes an inquiry about an order, the system can retrieve their specific details from the database and generate a response with relevant follow-up options, like tracking a shipment or managing returns.

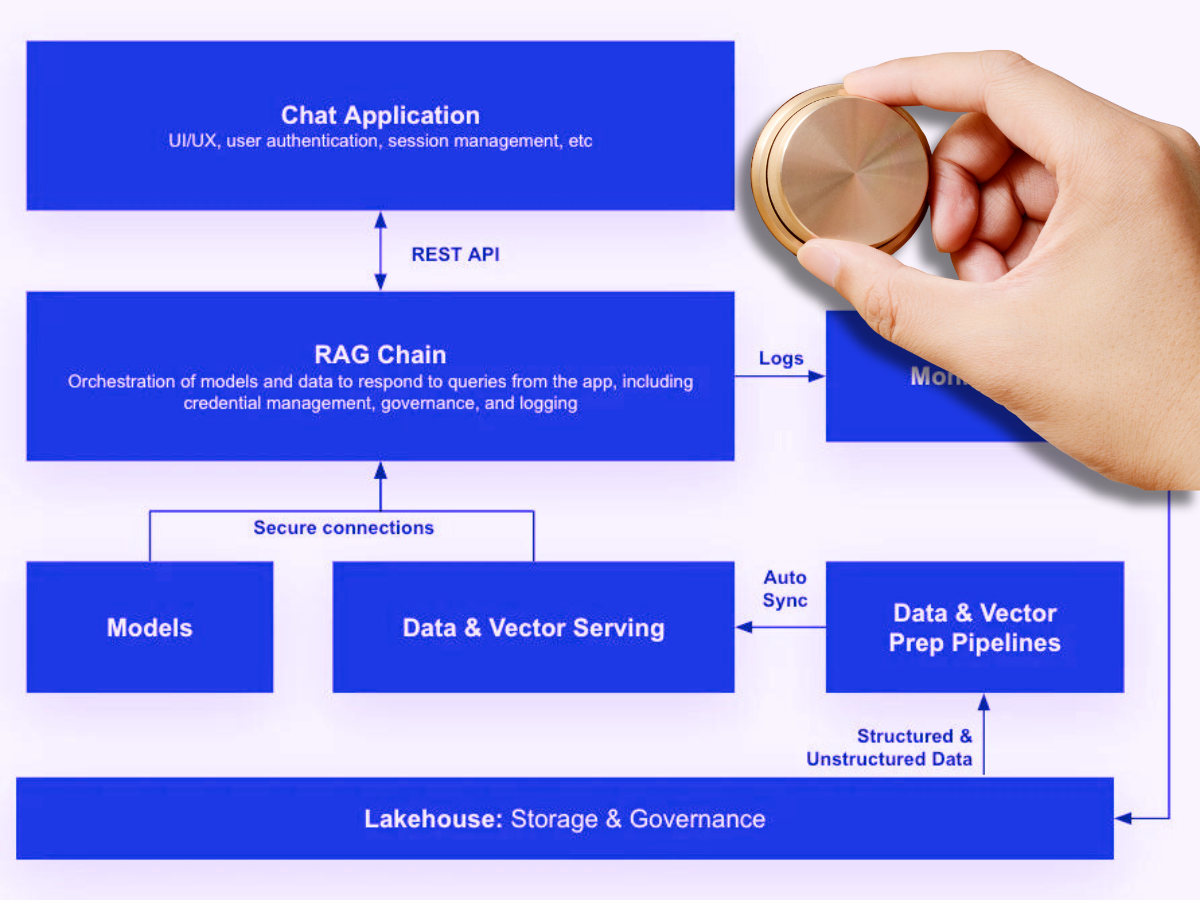

RAG effectively bridges the gap between a query and the relevant data needed to answer it, following a structured approach:

- RAG architecture combines data retrieval with a text generator model.

- The RAG chain starts with a user query, which triggers the system to fetch relevant data from the database. This data, alongside the query, is then passed to the LLM to generate a specific, accurate answer.

- The success of a RAG system hinges on the quality of the underlying data. The database must be a reliable source of current and accurate information, continually updated through a robust data pipeline.

Unlike fine-tuning LLMs (more on that in a minute), RAG doesn’t require extensive computing resources and improves transparency for data teams, who can trace what data the model used to generate its responses.

One note: mastering RAG typically requires implementing a vector database to efficiently manage the queryable data. More on that in a minute, too.

While the AI landscape is evolving quickly, as of today, RAG is widely considered the most efficient way for organizations to integrate real-world data into their GenAI-powered applications. In our book, that makes it a must-have skill for forward-thinking data engineers.

2) Fine-tuning LLMs

Like RAG, the practice of fine-tuning LLMs is a path toward driving business value from GenAI. But instead of integrating a dynamic database to an existing LLM, fine-tuning involves training an LLM on a smaller, task-specific, and labeled dataset. By adjusting the model’s parameters and embeddings based on your curated dataset, you can improve information retrieval and meet nuanced, domain-specific requirements.

Fine-tuning shines in domain-specific areas where you need precision, deep nuance, and expert-level AI responses, such as drafting legal documents or handling highly technical customer support questions. This approach can help you overcome information bias and other limitations like language repetitions or inconsistencies.

But it has a few drawbacks, including eating up a lot of computing power and time. And if your fine-tuned model starts making mistakes or hallucinating, you don’t have much visibility into its black-box environment of reasoning and response generation. It’s hard to pinpoint why something’s gone wrong.

If you’re debating whether to dive deep into fine-tuning or RAG, the latter is generally a more versatile and impactful skill. However, combining fine-tuning with RAG can offer the best of both worlds, giving you the enviable ability to build highly accurate and context-aware AI applications.

3) Vector databases

As we mentioned earlier, vector databases are a newly crucial component of the data/AI stack — and data engineers who want to stay ahead of GenAI need to get comfortable working with them. These databases are not just a trend, but a fundamental shift in how data is managed, accessed, and utilized in real-time AI applications.

A vector database is a specialized type of database designed to store, manage, and retrieve complex, multi-dimensional data vectors. These vectors are often derived from complex data types like images, text, or audio, transformed through processes like embedding or feature extraction to represent the data in a form that’s computationally efficient for analysis. This representation allows AI models to understand and process the nuances of data beyond traditional relational databases that handle discrete, structured data. Vector databases are engineered to perform fast and efficient searches across these high-dimensional spaces, identifying data points (vectors) that are most similar to a query vector — crucial for applications like recommendation systems, where you need to quickly find items similar to a user’s interests, or in natural language processing tasks, where the goal is to find text with meaning similar to a query.

For a data engineer, working with a vector database involves not just managing the storage and retrieval of vector data but also ensuring the database’s architecture is optimized for the specific access patterns and performance requirements of AI applications. This includes understanding indexing mechanisms that support efficient high-dimensional searches, scalability to handle growing data volumes, and integration with data pipelines for continuous updating and processing of vector data.

Data engineers also need to proactively monitor the health and quality of real-time data as it moves through a vector database and the associated pipelines, so they can intervene and prevent any data degradation or anomalies that could compromise the performance of their AI applications. This means leveraging data observability tooling like Monte Carlo — the first solution to monitor vector database pipelines, including Pinecone, as part of our commitment to facilitate the adoption of reliable GenAI.

Given the central role of vector databases in many GenAI-powered applications, managing and optimizing this new layer of the data stack — including maintaining data quality — will be an invaluable skill for data engineers going forward.

4) Business knowledge

Even the most finely tuned models or sophisticated vector databases won’t make an impact if your data product isn’t solving a meaningful problem. So don’t neglect the most fundamental data engineering skill that AI will never replace: business knowledge and stakeholder engagement.

As a data engineer, you can’t build and operationalize market-ready AI until you understand what problems your stakeholders are facing, and how GenAI can solve them. That requires building relationships with your business users — sitting shoulder-to-shoulder to understand the nuances of their problems, and the role data can play in achieving their goals. You need to go beyond technical conversations and requirement-gathering to truly get close to the business.

GenAI can handle a lot of data engineering tasks, including writing passable Python scripts, assisting with data integrations, and performing basic ETL processes. But what it can’t do is replicate human insight, collaboration, and creativity around solving problems. To stay irreplaceable, data engineers need to become business-minded strategists and see opportunities to develop GenAI applications that will directly contribute to the organization’s success.

5) Prompt engineering

Finally, one of the most important skills when it comes to integrating and leveraging GenAI is learning how to speak AI. That’s where prompt engineering comes in.

Prompt engineering refers to the skillful crafting of inputs (or “prompts”) to guide AI models in generating specific, relevant, and accurate outputs. This process is crucial in applications of GenAI, such as natural language processing, content creation, and data analysis tasks, where the quality of the output heavily depends on how well the input prompt is designed.

For data engineers, prompt engineering extends beyond formulating queries. It involves a deep understanding of the model’s architecture and capabilities, the nuances of language, and the specific requirements of the task at hand. The goal is to construct prompts that efficiently leverage the AI’s understanding and generation capabilities to produce desired results, whether that’s answering a complex query, generating insightful analyses, or creating content that aligns with certain criteria.

You also may need to install guardrails to prevent your end users’ prompts from abusing your AI applications — such as your chatbot happily selling brand new cars for a dollar.

Mastering prompt engineering usually involves a lot of experimentation, staying on top of the latest AI models and best practices, and incorporating feedback loops into your approach. By honing your prompt engineering skills, you can help your organization develop GenAI applications that deliver meaningful value and positive experiences to your end users.

The neverending story: data quality

Mastering these new methodologies and technologies will keep data engineers on the cutting edge of GenAI development. But you still need the age-old requirement to create value: high-quality data.

So along with these emerging skills, keep investing in your ability to monitor, trace, resolve, and prevent data downtime. Data observability tooling like Monte Carlo will help you ensure that data is accurate and reliable along every stage of its lifecycle, including within GenAI applications.

Our promise: we will show you the product.

Product demo.

Product demo.  What is data observability?

What is data observability?  What is a data mesh--and how not to mesh it up

What is a data mesh--and how not to mesh it up  The ULTIMATE Guide To Data Lineage

The ULTIMATE Guide To Data Lineage