The 6 Data Quality Dimensions with Examples

It’s clear that data quality is becoming more of a focus for more data teams. So why are there still so many questions like these:

A quick search on subreddits for data engineers, data analysts, data scientists, and more can yield a plethora of users seeking data quality advice. And while the comment below may seem like the accepted way of doing data quality management…

… there’s actually a much better way.

Building and adhering to data quality dimensions can enable data teams to improve and maintain the reliability of their data. In this article, we’ll dive into the six commonly accepted data quality dimensions with examples, how they’re measured, and how they can better equip data teams to manage data quality effectively.

Table of Contents

What are Data Quality Dimensions?

Data quality dimensions are a framework for effective data quality management. Defining the dimensions of data quality is a helpful way to understand and measure the current quality of your organization’s data, and they’re also helpful in setting realistic goals and KPIs for data quality measurement.

The six dimensions of data quality are accuracy, completeness, integrity, validity, timeliness, and uniqueness.

By ensuring these data quality dimensions are met, data teams can better support downstream business intelligence use cases, building data trust.

Let’s break down each of the seven data quality dimensions with examples to understand how they contribute to reliable data.

What are the 6 Data Quality Dimensions?

Data Accuracy

Data accuracy is the degree to which data correctly represents the real-world events or objects it is intended to describe, like destinations on a map corresponding to real physical locations or more commonly, erroneous data in a spreadsheet.

Data teams can measure data accuracy by:

- Precision: The ratio of relevant data to retrieved data

- Recall: Measures sensitivity and refers to the ratio of relevant data to the entire dataset

- F-1 score: The harmonic mean of precision and recall and calculates the frequency of accurate predictions made by a model across an entire dataset

And, data teams can determine data accuracy through:

- Statistical analysis: A comprehensive review of data patterns and trends

- Sampling techniques: Inferences made about an overarching dataset based on a sample of that dataset

- Automated validation processes: Leveraging technology to automatically ensure the correctness and applicability of data

Data Completeness

Data completeness describes whether the data you’ve collected reasonably covers the full scope of the question you’re trying to answer, and if there are any gaps, missing values, or biases introduced that will impact your results.

For example, missing transactions can lead to under-reported revenue. Gaps in customer data can hurt a marketing team’s ability to personalize and target campaigns. And any statistical analysis based on a data set with missing values could be biased.

The first step to determining data completeness is outlining what data is most essential and what can be unavailable without compromising the usefulness and trustworthiness of your data set.

There are a few different ways to assess the quantitative data completeness of the most important tables or fields within your assets, including:

- Attribute-level approach: Evaluate how many individual attributes or fields you are missing within a data set

- Record-level approach: Evaluate the completeness of entire records or entries in a data set

- Data sampling: Systematically sample your data set to estimate completeness

- Data profiling: Use a tool or programming language to surface metadata about your data

Data Timeliness

Data timeliness is the degree to which data is up-to-date and available at the required time for its intended use. This is important for enabling businesses to make quick and accurate decisions based on the most current information available.

Data timeliness affects data quality by determining the reliability and usefulness of the company’s information.

Timeliness of data can be measured using key metrics such as:

- Data freshness: Age of data and refresh frequency

- Data latency: Delay between data generation and availability

- Data accessibility: Ease of retrieval and use

- Time-to-insight: Total time from data generation to actionable insights

Data Uniqueness

Data uniqueness ensures that there is no duplicate data that’s been copied and shared into another data record in your database.

Without proper data quality testing, duplicate data can wreak all kinds of havoc—from spamming leads and degrading personalization programs to needlessly driving up database costs and causing reputational damage (for instance, duplicate social security numbers or other user IDs).

Data teams can use uniqueness tests to measure their data uniqueness. Uniqueness tests enable data teams to programmatically identify duplicate records to clean and normalize raw data before entering the production warehouse.

Data Validity

Data validity simply means how well does data meet certain criteria, often evolving from analysis of prior data as relationships and issues are revealed.

Data teams can develop data validity rules or data validation tests after profiling the data and seeing where it breaks. These rules might include:

- Valid values in a column

- Column conforms to a specific format

- Primary key integrity

- Nulls in a column

- Valid value combination

- Computational rules

- Chronological rules

- Conditional rules

Data Integrity

Data integrity refers to the accuracy and consistency of data over its lifecycle. When data has integrity, it means it wasn’t altered during storage, retrieval, or processing without authorization. It’s like making sure a letter gets from point A to point B without any of its content being changed.

Data integrity is crucial in fields where the preservation of original data is paramount. Consider the healthcare industry, where patient records must remain unaltered to ensure correct diagnoses and treatments. Even if an incorrect diagnosis was made, you would still want that to remain on the record (along with the correct updated diagnoses) so a medical professional could understand the full history, and context of a patient’s journey.

Maintaining data integrity involves a mix of physical security measures, user access controls, and system checks:

- Physical security can involve storing data in secure environments to prevent unauthorized access.

- User access controls ensure only authorized personnel can modify data, while system checks can involve backup systems and error-checking processes to identify and correct any accidental alterations.

- Version control and audit trails can be used to keep track of all changes made to the data, ensuring its integrity over time.

Monitor your Data Quality with Monte Carlo

With key measurements around these data quality dimensions in place, data teams can more effectively operationalize their data quality management process. But, monitoring all of these data quality dimensions manually is demanding in terms of time and resources.

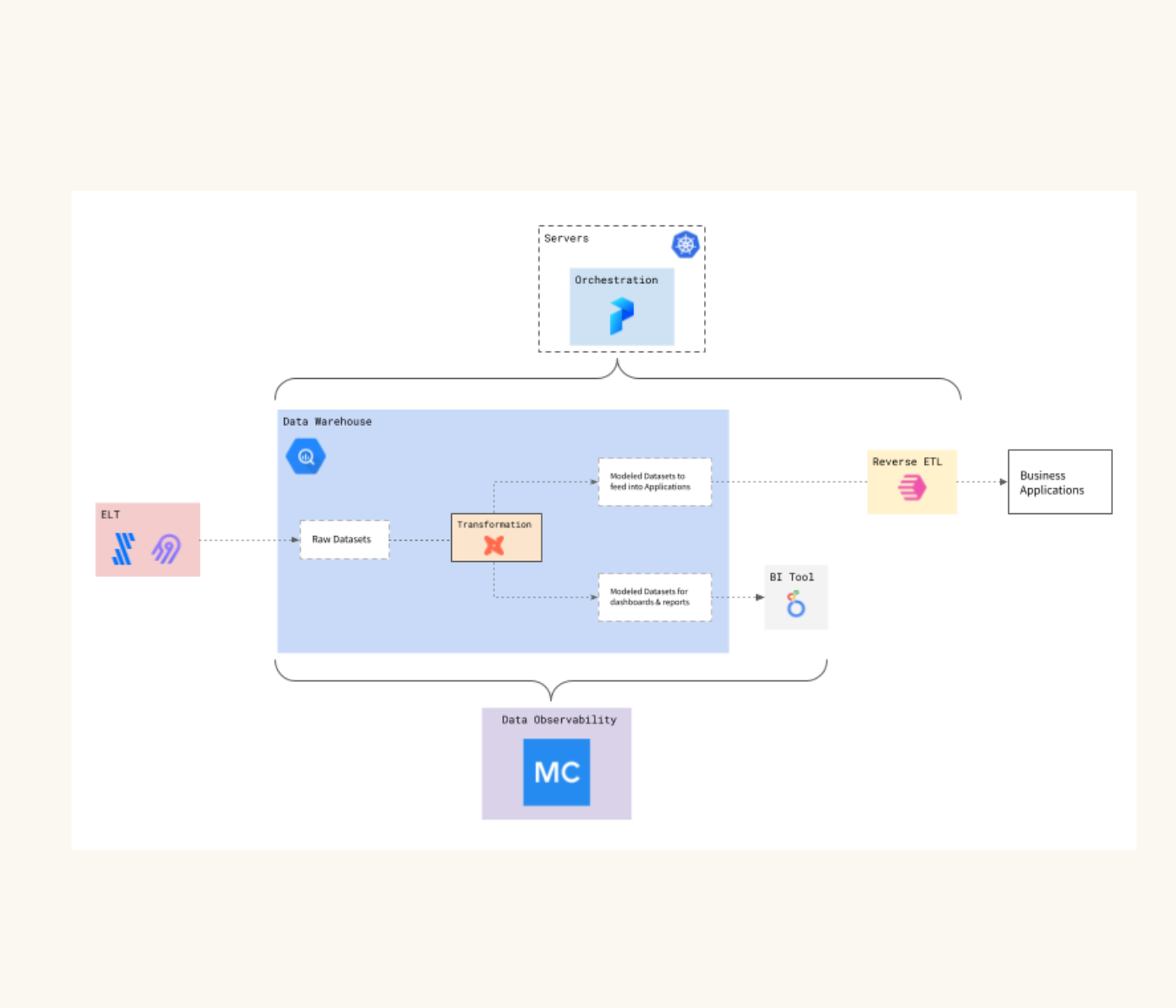

Data observability can help.

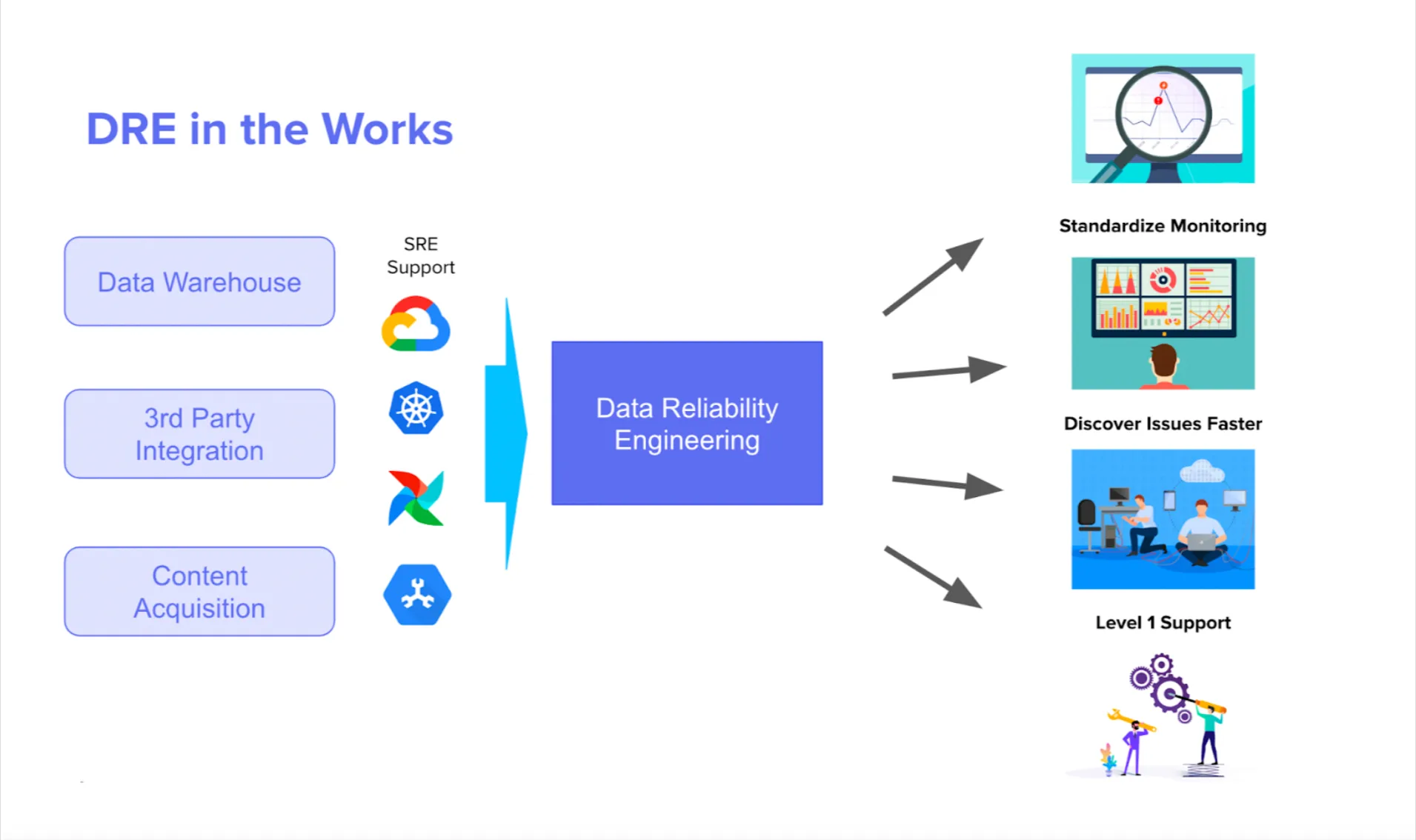

Automated tools, like Monte Carlo’s data observability, can be a far more effective way to ensure that each of these data quality dimensions is met. When anomalies or data issues do occur, a data observability solution can alert stakeholders to the exact root cause of the issue – wherever and whatever it is – so teams can detect and resolve it quickly.

To learn more about how data observability can better enable teams to meet the data quality dimensions that build reliable data, speak to our team.

Our promise: we will show you the product.

Product demo.

Product demo.  3 Steps to AI-Ready Data

3 Steps to AI-Ready Data  What is a data mesh--and how not to mesh it up

What is a data mesh--and how not to mesh it up  The ULTIMATE Guide To Data Lineage

The ULTIMATE Guide To Data Lineage