Iceberg, Right Ahead! 7 Apache Iceberg Best Practices for Smooth Data Sailing

If you don’t know Apache Iceberg, you might find yourself skating on thin ice.

Initially developed by Netflix and later donated to the Apache Software Foundation, Apache Iceberg is an open-source table format for large-scale distributed data sets. It’s designed to improve upon the performance and usability challenges of older data storage formats such as Apache Hive and Apache Parquet.

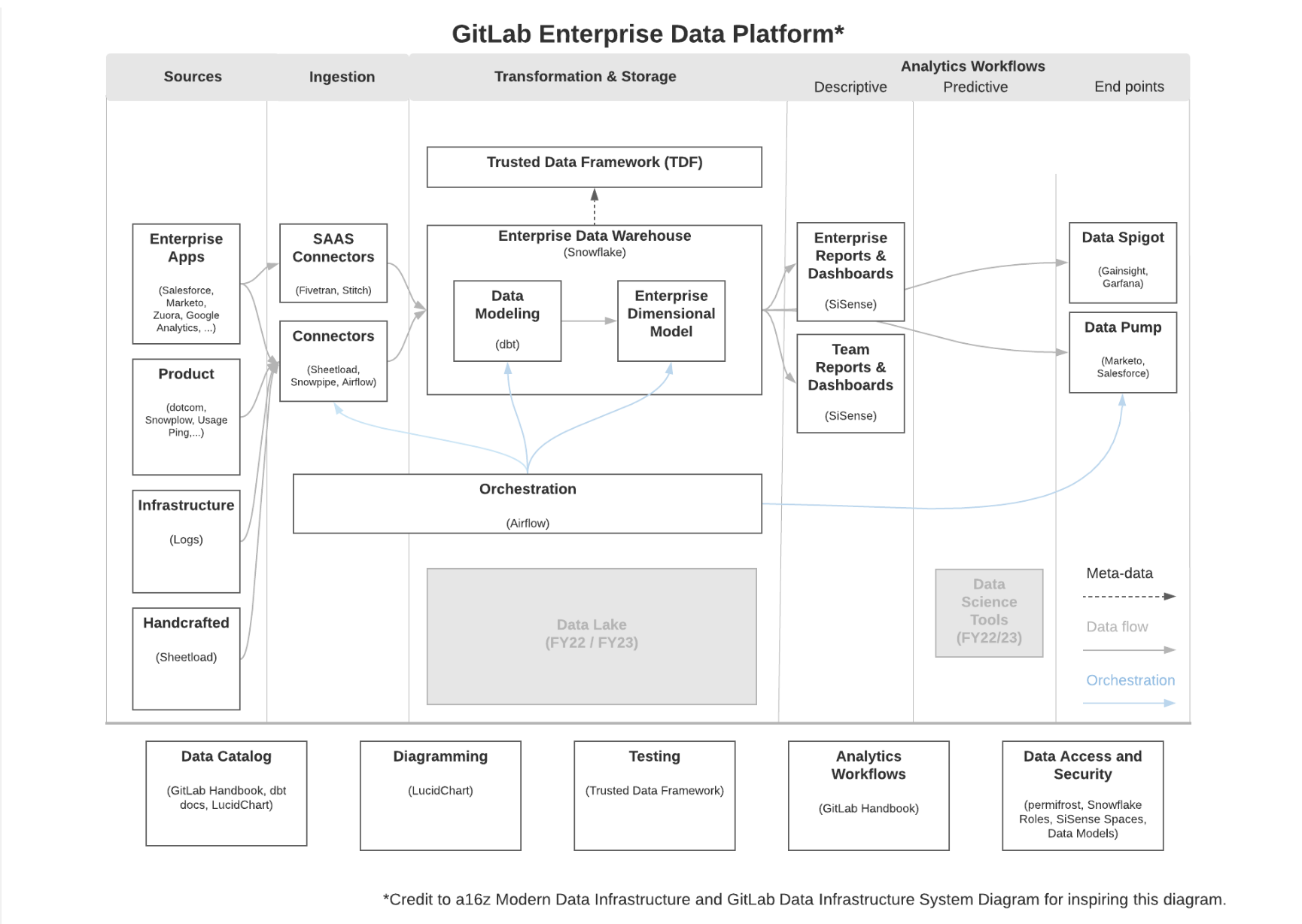

What makes Iceberg tables so appealing is they can store raw data at scale to support typical data lake use cases, but they also have data lakehouse-like properties as well such as well-organized metadata, ACID transactions, and critical features like time travel. Iceberg tables are also incredibly extensible as they support multiple file formats (Parquet, AVRO, ORC) and are compatible with multiple query engines (Snowflake, Trino, Spark, Flink).

They can be used as external tables to meet data residency requirements within a modern data stack as Snowflake recommends, or they can be the foundation of an organization’s data platform, either self-managed or by using a platform such as Tabular.

As more organizations recognize the value of Iceberg and adopt it for their data management needs, it’s crucial to stay ahead of the curve and master the best practices for harnessing its full potential.

1. Choose the right partitioning strategy

Partitioning can significantly improve query performance by reducing the amount of data scanned. Partition your tables based on the access patterns and query requirements of your data.

High-cardinality columns with an even distribution of data values are often good choices for partitioning. Avoid using columns with low cardinality or skewed data distribution, as they can lead to an unbalanced distribution of data across partitions.

In cases where a single partition column does not adequately distribute the data, consider combining partitioning with bucketing. Bucketing can help evenly distribute data across multiple files within each partition, improving query performance and storage efficiency.

Iceberg also supports hidden partitioning, which simplifies querying by keeping partitioning abstracted from to users. This means that users don’t need to be aware of the partitioning scheme when querying the data, enabling more efficient partition pruning and reducing the risk of errors in queries.

Remember to regularly monitor the performance of your partitioning strategy and adjust it as needed.

2. Leverage schema evolution

One of Iceberg’s strengths is its ability to handle schema changes more seamlessly. Make use of this feature to add, update, or delete columns as needed, without having to perform manual data migrations.

Here’s an example to illustrate this concept:

Suppose you have a table called “sales” with the following schema:

1: order_id (long)

2: customer_id (long)

3: order_date (date)

4: product_id (long)

5: quantity (int)Each column in the schema has a unique ID (1 to 5). Now, let’s say you want to update the schema by changing the name of the “customer_id” column to “client_id” and moving it to the last position. The new schema would look like this:

1: order_id (long)

3: order_date (date)

4: product_id (long)

5: quantity (int)

2: client_id (long)Notice that even though the name and position of the column have changed, its unique ID (2) remains the same. Schema changes can still create challenges downstream in the data pipeline and may require data observability or other data monitoring to fully prevent data quality issues.

3. Optimize file sizes

Aim for a balance between too many small files and too few large files. Smaller files can lead to inefficient use of resources, while larger files can slow down query performance. Iceberg supports data compaction to merge small files, which can help maintain optimal file sizes.

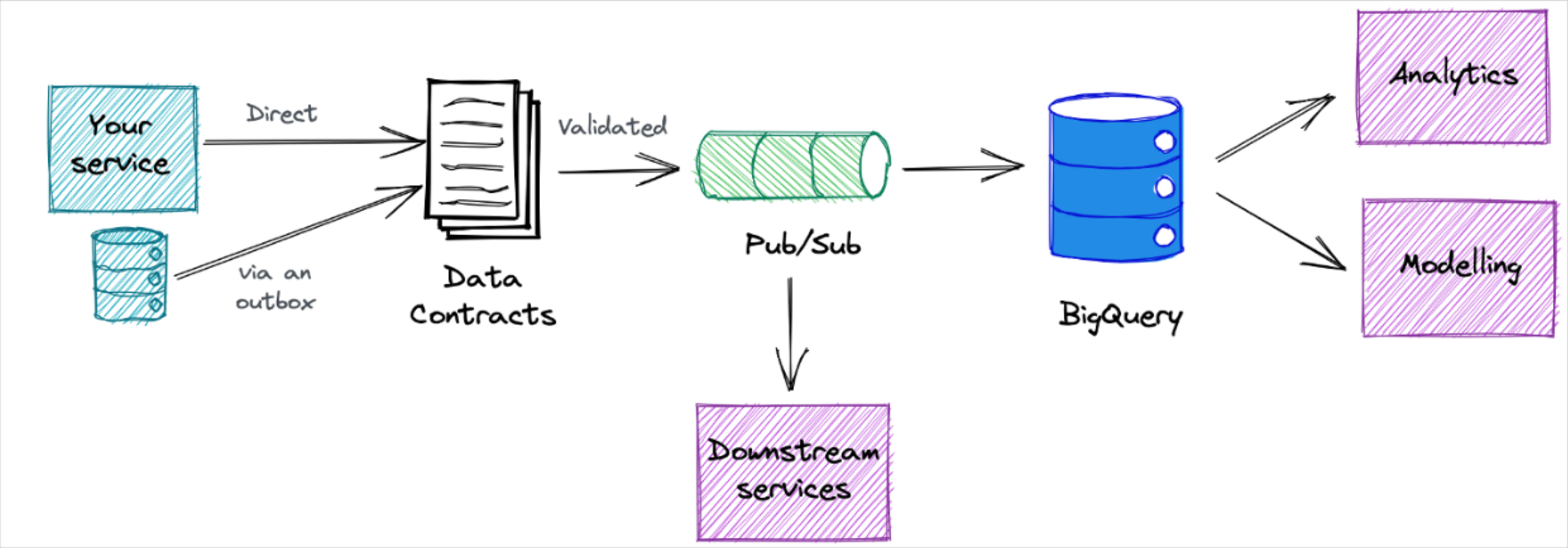

4. Use incremental processing

Iceberg supports incremental processing, in other words reading only the data that has changed between two snapshots. Take advantage of this feature for more efficient data processing, especially for streaming data. See the docs page Structured Streaming for how to do incremental processing with Spark or this Alibaba guide on how to do the same with Flink.

5. Integrate with compatible query engines and other solutions

Query engines enable you to process and analyze data stored in Iceberg tables. They translate queries into operations that can be performed on the data managed by Iceberg. Popular query engines include Apache Spark, Snowflake, and Trino.

You will also want to investigate supporting capabilities as you build out your modern data stack. There may be integration challenges given that Apache Iceberg is a relatively new format and your storage and compute will be less tightly coupled. For example, Monte Carlo can monitor Apache Iceberg tables for data quality incidents, where other data observability platforms may be more limited.

6. Monitor and maintain table metadata

Keep an eye on table metadata growth, especially for tables with frequent updates or schema changes. Regularly clean up metadata by using features like Iceberg’s metadata expiration and time-travel capabilities.

7. Stay up-to-date with Iceberg releases

As an actively maintained project, Iceberg continues to evolve and improve. Keep up with new releases and incorporate the latest features, bug fixes, and performance improvements in your deployment.

With these seven best practices up your sleeve, your team will see you as smooth as ice and as cool as the Arctic.

Want to know more about Monte Carlo’s support for Apache Iceberg? Fill out the form below to talk to us.

Our promise: we will show you the product.

Product demo.

Product demo.  What is data observability?

What is data observability?  What is a data mesh--and how not to mesh it up

What is a data mesh--and how not to mesh it up  The ULTIMATE Guide To Data Lineage

The ULTIMATE Guide To Data Lineage