5 Examples of Bad Data Quality in Business — And How to Avoid Them

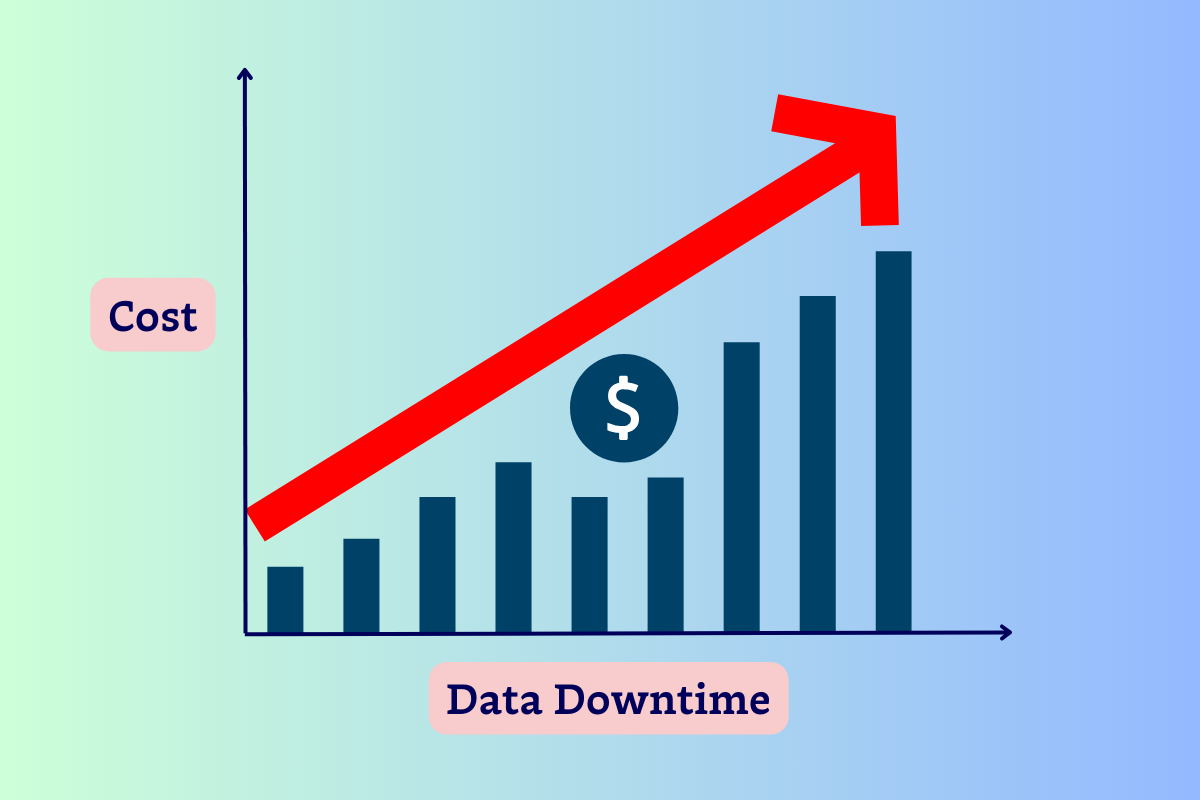

Bad data quality refers to moments when data is inaccurate, missing, or otherwise unreliable, leading to what is termed as data downtime, which can adversely impact business operations and decision-making.

According to our annual survey, incidents of data downtime nearly doubled year over year. This is likely driven by the finding that time-to-resolution for data quality issues increased by 166%.

But when data downtime occurs, what does that actually mean for organizations? What does a data quality incident look like, and what are the business outcomes?

We’re glad you asked. We’ve compiled five of the most egregious examples of bad data quality in recent years: covering what happened, what the business impacts were, and how leaders responded.

And, since we’re optimistic about the potential for heading off data issues before they cause this kind of headline-generating damage, we’ve also provided three best practices to help your team improve data quality across the organization.

But first, the schadenfreude.

Table of Contents

1. Unity Technologies’ $110M Ad Targeting Error

Unity Technologies, notable for its popular real-time 3-D content platform, experienced a significant data quality incident in Q1 2022. Their Audience Pinpoint tool, designed to aid game developers in targeted player acquisition and advertising, ingested bad data from a large customer — causing major inaccuracies in the training sets for its predictive ML algorithms, and a subsequent dip in performance.

As a result, Unity’s revenue-sharing model took a direct hit, leading to a loss of approximately $110 million. According to CEO John Riccitello, that figure included:

- The direct impact on revenue

- Costs associated with recovery to rebuild and retrain models

- Delayed launches of new revenue-driving features due to the prioritization of fixing the data quality issues

Ultimately, Unity shares dropped by 37% and the company saw press coverage about investors “losing faith” in the company’s strategy (and the CEO). Riccitello pledged to repair the situation, telling shareholders, “We are deploying monitoring, alerting and recovery systems and processes to promptly mitigate future complex data issues.”

In other words, Unity’s leadership understood they needed to level up their focus on data quality. Especially when advertising campaigns are involved, it’s crucial for organizations to have trustworthy, reliable data to drive successful bidding strategies and performance over time.

2. Equifax’s Ongoing Inaccurate Credit Score Fiasco

Between March 17 and April 6, 2022, Equifax issued inaccurate credit scores for millions of consumers applying for auto loans, mortgages, and credit cards to major lenders like JPMorgan Chase and Wells Fargo. For over 300,000 individuals, the credit scores were off by 20 points or more — enough to affect interest rates or lead to rejected loan applications.

Equifax leaders blamed the inaccurate reporting on a “coding issue” within a legacy on-premises server. One source told NationalMortgageProfessional.com that certain attribute values, such as the “number of inquiries within one month” or the “age of the oldest tradeline”, were incorrect.

In the aftermath of an exposé by the Wall Street Journal, Equifax stock prices fell by about 5%. Days later, Equifax was hit with a class-action lawsuit, led by a Florida resident who was denied an auto loan after Equifax told the lender her credit score was 130 points lower than they should have.

And Equifax didn’t exactly have public trust or goodwill to spare. Just in 2017, Equifax had agreed to a $700 million settlement after being charged with negligent security practices that led to a massive data breach leaking the birth dates, addresses, and Social Security numbers of nearly 150 million consumers — at the time, the largest settlement ever paid for a data breach.

The company’s public statement detailed their response: “…we are accelerating the migration of this environment to the Equifax Cloud, which will provide additional controls and monitoring that will help to detect and prevent similar issues in the future.”

Data quality is important for every business — but when financial decisions are being made, it’s absolutely vital for information to be reliable, accurate, and secure. We’ll have to wait until the class action lawsuit is settled to learn how much three weeks of data downtime really cost Equifax.

3. Uber’s $45M Driver Payment Miscalculation

In 2017, reports emerged that Uber had been miscalculating its commission and costing New York drivers a percentage of their rightful earnings. Instead of calculating its commission based on its net fare, minus sales tax and other fees, Uber took their cut based on the gross fare. This meant that for two and a half years, the company took 2.6% more from drivers than its own terms and conditions allowed.

Uber leaders reported they were paying back those earnings, plus 9% annual interest, to every driver impacted — an average payment of around $900 each. Given that “tens of thousands” of drivers were underpaid, the Wall Street Journal estimated the cost would be at least $45 million.

Similar to Equifax, Uber was already dealing with dissatisfied drivers at the time this incident became public. In 2017 alone, they settled for $20 million with the FTC for overinflating estimated driver earnings, and their then-CEO was caught on a dashcam video in an unflattering argument with his own Uber driver about decreasing earnings.

In this particular case, Uber’s data wasn’t inaccurate in and of itself, but the company was basing its calculations on the wrong numbers altogether. It’s crucial for all teams to maintain transparency and visibility into data in order to maintain fair business practices — and avoid costly, public embarrassments.

4. Samsung’s $105B “Fat Finger” Data Entry Error

In April 2018, Samsung Securities (the Samsung corporation’s stock trading arm), accidentally distributed shares worth around $105 billion to employees — 30 times more shares than the actual number of total, outstanding shares.

The mishap occurred when an employee made a simple mistake: instead of paying out a dividend worth 2.8 billion won (around $2.1 million USD), they mixed up their keystrokes and entered “shares” instead of “won” into a computer. As a result, 2.8 billion shares (worth $105 billion USD) were issued to employees in a stock ownership plan.

It took 37 minutes for the firm to realize what happened and stop those employees from selling off that mistaken “ghost stock”. But during that window, 16 employees sold five million shares worth around $187 million, just after receiving them.

There are a lot of big numbers here, but the real business costs for Samsung Securities included:

- Stock shares dropped nearly 12%, wiping out around $300 million of its market value

- Lost relationships with major customers, including South Korea’s largest pension fund, due to “concerns of poor safety measures”

- Barred by financial regulators from taking new clients for six months

- Co-CEO Koo Sung-hoon resigned

Human errors will happen. But especially in the financial sector, when investor and partner confidence is at stake, organizations need to have measures in place to detect and mitigate large-scale mistakes in real-time.

5. Public Health England’s Unreported COVID-19 Cases

In the fall of 2020, the UK was experiencing a surge of COVID-19 cases and relying on contact tracers to notify individuals who had been exposed to the virus. But between September 25th and October 2nd, 15,841 positive cases were missing altogether from the daily reports compiled by Public Health England.

It happened due to a “technical error” — essentially, PHE developers were using the outdated XLS file format for their Excel files, instead of the updated XLSX version. Given that old format, the report templates compiled by PHE were only capable of holding around 65,000 rows of data — or around 1,400 cases. But the CSV files that other firms were submitting to PHE for consolidation and reporting included far more data. That meant that thousands of covid cases were missing altogether from the reports PHE compiled, published as the official case counts, and shared with contact tracers.

The ultimate cost of this missing data? More than 50,000 potentially infectious people may have gone about their daily lives, overlooked by contact tracers and totally unaware they had been exposed to COVID-19. During this period of time, prior to vaccines and effective treatments, that’s a potentially deadly mistake.

At the time of the incident, PHE already had plans underway to replace the “legacy software” and upgrade to data analytics tools that were better suited for the job than Excel. But this very unfortunate case study in missing data demonstrates how critically important it is for data to be accurate in healthcare decision-making and crisis management.

How businesses can prevent data downtime

Millions of dollars, leadership positions, public trust, lives and livelihoods: these bad data quality examples just go to show all the ways that data downtime can impact the overall health of your business.

The good news is that organizations can take a proactive approach to minimize data quality issues — and react much more quickly when they do occur. Here are a few best practices your data team can begin to implement to get ahead of data downtime.

Testing for data quality

Data testing helps teams validate assumptions about data, systematically verifying data accuracy, completeness, and consistency. While data testing won’t cover every scenario, it will help you catch specific, known problems that can occur in your data pipelines.

A few common types of data quality testing include:

- Unit testing: Automated tests that can detect whether the data coming through your pipeline looks as expected. Unit tests can evaluate data freshness, detect null values, and check that all values in a column fall within a certain range.

- Integration testing: A testing technique that verifies data from diverse sources is loaded, integrated, and transformed according to set business rules.

Testing your data early and often helps detect common quality issues before they have the chance to impact downstream data consumers or products.

Data governance for structured data management

Data governance frameworks help teams standardize and document policies around how data should be managed, used, and protected within an organization. By outlining important practices like data ownership and accountability, data governance answers questions around who can access data, where it’s stored, how it’s collected and processed, and when it should be retired.

Data governance can also include data quality standards that define benchmarks for accuracy, completeness, and consistency. By aligning all teams to agree on what data quality looks like, you can continue working to ensure all data meets those standards.

Data observability to ensure real-time reliability

Data testing and data governance are important, proactive measures organizations should take to minimize the impact of data downtime for known, pre-defined data quality issues. But to gain visibility into the end-to-end health of your data pipelines for both known and unknown issues, data observability provides the most comprehensive and scalable solution.

Data observability offers automated monitoring and tracking of data quality across the entire data stack, alerting teams when issues occur — such as data that’s inaccurate, erroneous, stale, or missing altogether. Good observability tooling also includes data lineage that helps teams troubleshoot and respond to incidents faster, pinpointing exactly what went wrong and which downstream data assets may be compromised.

By identifying when anomalies and deviations from expected data patterns occur in real-time, businesses can avoid the potentially enormous costs of data quality incidents that would otherwise go undetected for days, weeks, or even months.

Prioritizing data quality for the success of your business

Organizations today rely on data for decision-making more than ever before. We’ve just described how bad data quality around advertising campaigns, financial products, user payments, employee stocks, and public health reporting cost businesses millions of dollars and damaged professional and personal reputations for years to come.

But disastrous data quality issues are not unavoidable. Forward-thinking teams have adopted data testing, data governance, and data observability solutions to help prevent data downtime and improve trust in data throughout their organizations.

Ready to get started on your data quality journey? See exactly how observability comes to life with a free walkthrough of the Monte Carlo platform.

Our promise: we will show you the product.

Product demo.

Product demo.  What is data observability?

What is data observability?  What is a data mesh--and how not to mesh it up

What is a data mesh--and how not to mesh it up  The ULTIMATE Guide To Data Lineage

The ULTIMATE Guide To Data Lineage