Data Optimization Tips From 7 Experienced Data Leaders

In This Article:

It’s not enough for data teams to be magicians transforming raw data into business value, they need to be responsible (and cost conscious) stewards as well. Unfortunately, data optimization is far from an exact science.

Like much of the B2B SaaS universe, costs are based on platform usage. However, infrastructure, and particularly data infrastructure, can be highly elastic, meaning costs can scale down and up (and let’s face it, it’s mostly up) dramatically if not carefully monitored.

While the main drivers of cost are understood (compute, storage, I/O), many best practices are still being formed. We have already predicted that data teams would spend 30 percent more time on data platform cost optimization initiatives in 2023 and provided thoughts on 3 Snowflake cost optimization strategies that aren’t too crazy, but wanted to get additional insights across multiple platforms from some of data’s top leaders. We got some great answers from:

- Tom Milner, Data Engineering Manager, Tenable

- Joel Tone, Data Engineer, Auvik Networks

- Matheus Espanhol, Data Engineering Manager, BairesDev

- Mitchell Posluns, Analytics Manager, Assurance IQ

- Scott Croushore, Data Governance Manager, BestEgg

- Tushar Bhasin, Senior Data Engineer, BlaBlaCar

- Stijn Zanders, Manager Data Engineering, Aiven

- Barr Moses, co-founder and CEO, Monte Carlo

From there we extracted, JOINed, and will now present these data optimization insights for you below.

In This Article:

Cut costs, not business value

The most frequently shared insight across our expert panel was that data teams need to be careful not to cut costs just for the sake of cutting costs. These data optimization initiatives need to be executed with an understanding of the overall business strategy.

“Cutting costs for the sake of cutting costs I believe is the wrong approach. Each cost needs to be understood,” said Scott at BestEgg. “Our approach is to ask: why do we spend this? Is it necessary? What are the benefits? Eliminating wasteful spend is more important than just blanket ‘cutting cost’ directives. Optimization should be the focus.”

One way to properly contextualize a data platform cost optimization initiative is to consider and weigh the potential tradeoffs based on your SLAs.

“[Cost cutting] is always a consideration, but it is a matter of business need and what they are willing to pay for. For example, if we said we could cut costs 50% but it would also mean our dashboards would be 10x slower, there is a good chance they would say forget it and look for cost cutting measures elsewhere. It’s like choosing flying over driving – sometimes it just makes sense to pay more to get to your destination faster,” said Mitchell at Assurance.

But perhaps the best way to understand the business context of different data platform costs is to talk to the business. That’s because business value isn’t entirely an objective measure, but rather more of a subjective execution of strategy based on what the business values.

Joel at Auvik cited the line from The Art of Business Value by Mark Schwartz, “…Understand that there is no magic formula for business value that only the business people know. Ultimately, it turns out that business value is what the business values, and that is that.”

For example, is the business in a mature market and optimizing for profitability or is it seeking to expand its market share with aggressive growth? Nothing is free, and data optimization initiatives come with an opportunity cost.

“Depending on where the organization is at, sometimes cutting costs is top priority. Other times getting results is [much] more important than [cutting] costs,” said Joel.

“The importance of cutting costs is totally dependent [on] the business strategy,” said Matheus at BairesDev. “…The data infrastructure team needs to be aware if it’s time to scale the business as fast as possible…or if it’s time to optimize costs. Optimizing cloud processes takes time from the team and the company needs to find the balance.”

There is more low hanging fruit than you think

The good news is that most data teams won’t typically have to make a devil’s bargain between cost and value. According to our panel, there is more than enough fat to be cut when optimizing data platform costs. That’s partially because good data platform architecture tends to be a cost optimized architecture.

“Costs and architecture often run in parallel,” said Tom at Tenable. “A good architecture will be cost-efficient and keeping an eye on costs can improve performance and latency of data.”

Most data optimization strategies will not be cutting entire workloads that could have an important business use case, but rather creating a better structure to what is already running.

“We’ve been able to cut costs by reducing the test scope on big tables, materializing certain dashboard queries, adding clustering/partitioning, and adding incremental loads to tables. It’s quite easy to fix it once you know the problem,” said Stijn.

Of course, that caveat of “once you know the problem” is key, but more on that later.

Get granular

Once you’ve decided that you should optimize your data platform costs and that it’s possible to do so without impacting business value, the next question is where to get started. The answer, according to our expert panel, is to start breaking down and categorizing your costs.

“The first thing in cutting costs is figuring out where the costs are coming from. The level of granularity depends on what’s needed, but even a rough breakdown by system is a starting point,” said Joel at Auvik.

Typically, the data warehouse, lake, or lakehouse will be a good place to start.

“The majority of our costs come from BigQuery and we’ve split up those costs into different use cases and get a daily report on them,” said Stijn.

Of course, improving visibility into costs often requires a level of discipline and vigor across the data engineering team.

“The approach we followed was first to fix the tracking,” said Bushar at BlaBlaCar. “For every asset…either a table or a report, it is to be tagged and assigned to a team. The communication of the costs are then done [by] each team [at the] stakeholder level.”

This is an excellent transition to our next best practice, which is to empower the teams closest to the workloads and make them accountable for costs.

Incentivize those closest to the costs

If the next question is, “who is responsible for cost optimization,” the answer according to our expert panel is those closest to the workloads driving those costs. These engineers and development teams will be the most knowledgeable and empowered.

Making this a regular process can also encourage these teams to start incorporating many of these best practices as they develop the pipelines and architecture rather than after the fact.

“One good approach is to make development teams responsible for their own costs,” said Tom at Tenable. “It shouldn’t be the responsibility of one person in a CCOE (cloud center of excellence) or in finance. They have no agency to make changes to improve costs.”

One effective way to incentivize these teams and create an esprit de corps can be to make it a friendly competition.

“We ran a successful cost cutter campaign where we made it a competition for all engineering teams to identify areas of improvement, implement solutions, and report on the estimated cost savings,” said Mitchell at Assurance. “It was a big shared effort and prizes were awarded to the teams that had the largest relative impact. some teams naturally have larger services and costs so it was about percentage improvements not necessarily total gross savings, but you could make multiple categories!”

Make data optimization a continuous process

Just like shipping new features, data optimization is best executed as a continuous process versus a series of one time campaigns.

“[Cutting] overall costs of the warehouse and lake…is quite complicated to do in one shot,” said Tushar at BlaBlaCar.

Not only is it complex, but costs are dynamic and constantly changing.

“The rise of consumption based pricing makes this even more critical as the release of a new feature could potentially cause costs to rise exponentially,” said Tom at Tenable. “As the manager of my team, I check our Snowflake costs daily and will make any spike a priority in our backlog.”

Creating resource alerts can be a helpful tool in this regard according to Matheus at BairesDev, “define alerts in all the services with thresholds that makes you review the costs as some significant variations occurs.”

Stijn at Aiven has found it helpful to even set up alerts on each query.

“We’ve got alerts set up when someone queries anything that would cost us more than $1. This is quite a low threshold, but we’ve found that it doesn’t need to cost more than that. We found this to be a good feedback loop. [When this alert occurs] it’s often someone forgetting a filter on a partitioned or clustered column and they can learn quickly.”

He has also found it helpful to look at costs from an annual perspective.

“I found it’s very helpful to translate the daily cost of a table into how much it would cost in a year ($27/day vs $10K/ year). It helps making the trade-off of whether it’s worth it to fix it,” he said.

Understanding dependencies

It’s time to revisit the earlier caveat: “once you know the problem, it’s easy to fix.” This is not only true, but a helpful illustration of the current challenge around cost optimization: root cause analysis.

Optimizing queries isn’t hard but understanding what to optimize can be difficult. There are many reasons a query could have a long runtime:

- It’s returning a lot of rows;

- It’s not not optimally written (perhaps a Select * where a few columns would do or there is an exploding JOIN);

- The underlying table structure isn’t ideal (partitions, indexes, etc);

- The execution is quick but its queued because of other dependencies or waiting on other heavy queries to run;

- Data warehouse size (or compute resource size) isn’t optimal; or

- All of the above!

Some of these reasons will be legitimate and unavoidable, while others can be easily remedied with some re-engineering.

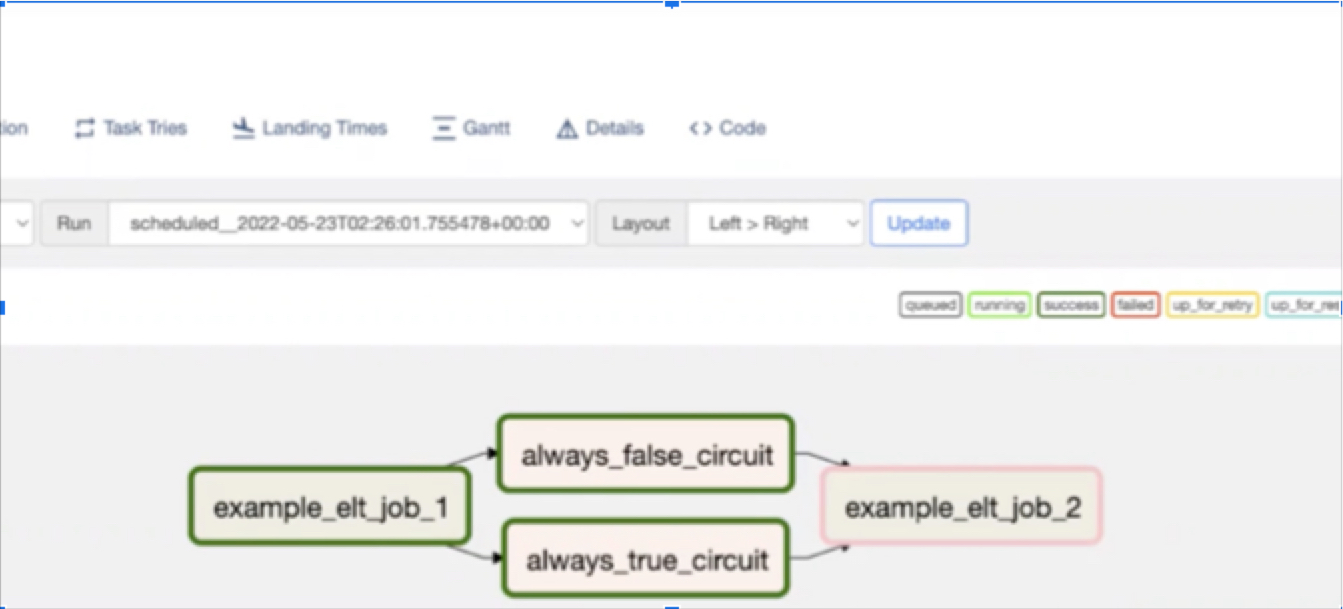

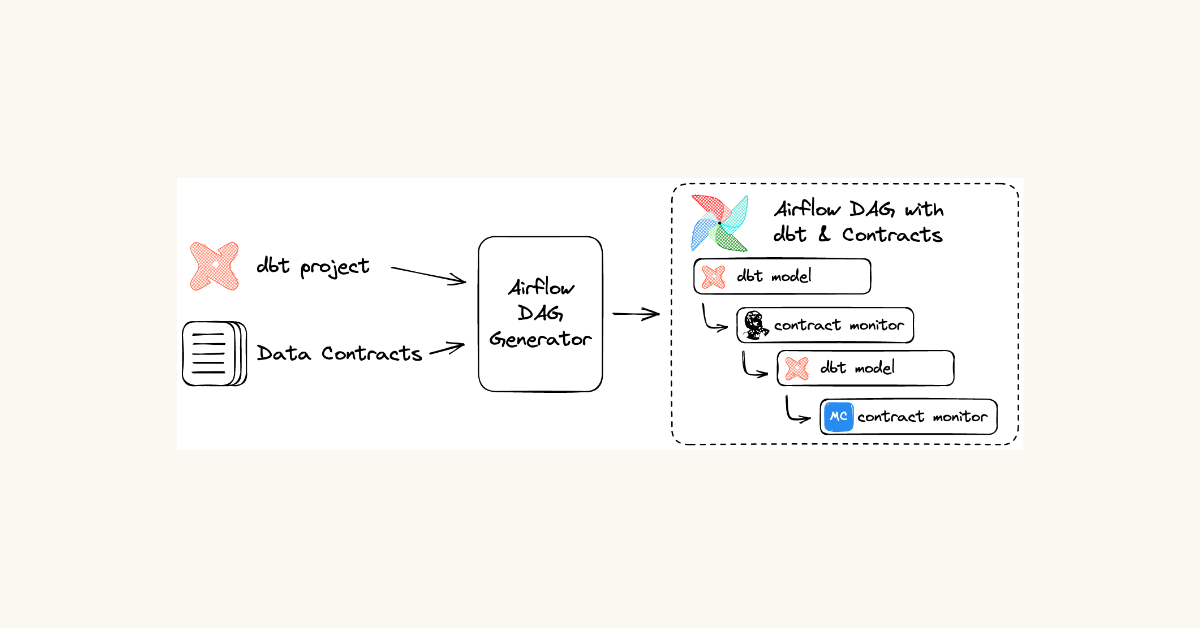

Most data platforms have excellent resource monitoring capabilities as well as the ability to identify long running or heavy queries. However, filtering and grouping queries by warehouse, schema, destination table, dbt model, or Airflow job is typically not as easy. This is where there is an opportunity for a third-party tool to provide additional value (you may want to go ahead and bookmark our announcements page).

“It’s difficult to optimize queries without having the context of their role and dependencies across the data platform” said Barr Moses, co-founder and CEO of Monte Carlo. “Data teams need to be able to get granular and understand which queries correspond to which data assets and whether their runtime is driven by volume, wait times, compute allocation, or something else entirely.”

A penny saved is a penny earned

Data leaders are tasked with adding value to the business, and avoiding unnecessary costs absolutely falls within that purview. Optimized data platforms are performant as are the teams that make it a habit to design and redesign for cost.

Just remember to not get carried away. Cutting too deep can create downtime. It is also potentially an opportunity cost for a team you want primarily focused on business value versus maintaining architecture.

That being said, if you aren’t thinking about data platform cost optimization today, consider adding it to your team’s scope in the near-term. As Tom points out, it signals to the business that your team is trustworthy.

“For most companies keeping a handle on costs is important… As a team lead or manager, being in control of your costs signals to the higher ups that you’re in control of things and are a safe pair of hands for further investment.”

Interested in learning how data observability and data optimization go hand-in-hand? Talk to us!

Our promise: we will show you the product.

Product demo.

Product demo.  3 Steps to AI-Ready Data

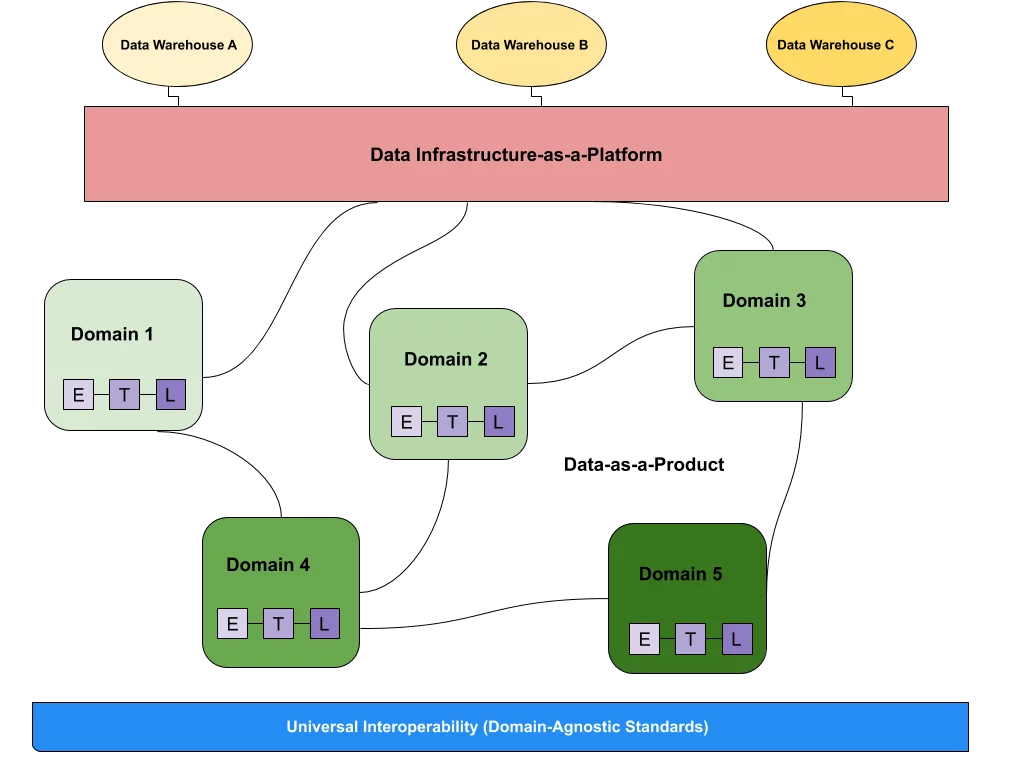

3 Steps to AI-Ready Data  What is a data mesh--and how not to mesh it up

What is a data mesh--and how not to mesh it up  The ULTIMATE Guide To Data Lineage

The ULTIMATE Guide To Data Lineage