4 Ways to Tackle Data Pipeline Optimization

Just as a watchmaker meticulously adjusts every tiny gear and spring in harmonious synchrony for flawless performance, modern data pipeline optimization requires a similar level of finesse and attention to detail.

Sure, a modern data stack can simplify or automate many tasks, but understanding your tools’ capabilities and how they can best be utilized is what really unlocks cost savings and efficiency in your data pipeline.

Learn how cost, processing speed, resilience, and data quality all contribute to effective data pipeline optimization.

Table of Contents

Data Pipeline Optimization: Cost

The goal here is to balance robust data processing capabilities with cost control. You do that by leveraging the flexibility and scalability of your cloud environment. Here’s how to get the most bang for your buck:

- Use Cloud spot instances: Identify non-critical, interruptible tasks in your pipeline, such as batch processing or data analytics. Allocate these tasks to cloud spot instances, which are less expensive than regular instances. This shift can yield significant savings without impacting critical operations.

- Transition data between storage tiers: Analyze your data access patterns and migrate frequently accessed data to high-availability storage and infrequently accessed data to more cost-effective storage solutions. Implement automatic data lifecycle policies to transition data between storage tiers based on age or access patterns.

- Integrate data deduplication tools into your pipeline: These tools scan your datasets, identify and eliminate duplicate data, and help you save on storage space and reduce processing load. Regularly schedule deduplication processes to keep your data storage lean and efficient. If you use AWS, check out Lake Formation FindMatches, a new machine learning (ML) transform that enables you to match records across different datasets as well as identify and remove duplicate records.

- Monitor resource utilization: Set up monitoring systems to track resource usage and performance. Use this data to identify underutilized resources and potential areas for cost reduction. Deploy analytics to predict future resource needs, enabling more informed and cost-effective resource allocation.

- Implement auto-scaling features to adjust resources in response to real-time demand: Configure your systems to automatically scale down or shut off during low-usage periods or non-peak hours. This ensures you’re only paying for what you use.

Data Pipeline Optimization: Processing Speed

Pipelines generally don’t start out slow, but evolving data demands and tech advancements means they inevitably slow down. Here are some strategies to keep things firing on all cylinders:

- Parallelize data processing: Divide large data tasks into smaller, manageable chunks that can be processed concurrently. Identify parts of your data workflow that can be parallelized and adjust your pipeline configuration accordingly.

- Optimize data formats and structures: Evaluate and choose data formats and structures that are inherently faster to process. For instance, columnar storage formats like Parquet or ORC are often more efficient for analytics workloads. Convert your data into these formats and restructure your databases to capitalize on their speedy processing characteristics.

- Use in-memory processing technologies: These technologies store data in RAM, reducing the time taken for disk I/O operations. Identify parts of your pipeline where in-memory processing can be applied, such as real-time analytics, to boost processing speed.

- Regularly review and optimize your database queries: Use query optimization techniques like indexing, partitioning, and choosing the right join types. This reduces the execution time of queries, leading to faster data retrieval and processing.

- Use stream processing for real-time data: These frameworks handle data in real-time as it’s generated, providing quicker insights. Identify use cases in your pipeline, like fraud detection or live dashboards, where stream processing can be advantageous.

Data Pipeline Optimization: Resilience

As data volumes and processing demands grow, you need to ensure your data pipeline remains robust and reliable as your needs evolve. Here are some strategies to help improve the resiliency of your pipelines:

- Design for fault tolerance and redundancy: Use multiple instances of critical components and distribute them across different physical locations (availability zones). This minimizes the impact of component failures and ensures continuous operation.

- Conduct regular stress tests and resilience drills: Simulate different scenarios, including high data loads and component failures, to ensure your pipeline can scale and recover smoothly under various conditions. For example, AWS has their Fault Injection Simulator, a chaos engineering service that provides fault-injection simulations of real-world failures to validate that the application recovers within the defined resilience targets.

- Establish comprehensive data backup and disaster recovery plans. Regularly back up your data and configurations, and test recovery processes to ensure you can quickly restore operations in the event of a system failure or data loss.

Data Pipeline Optimization: Data Quality

No matter how fast, efficient and resilient your data pipeline becomes, if the data flowing through it isn’t high-quality and reliable, stakeholders won’t rely upon it and your whole handling of the data pipeline will be called into question.

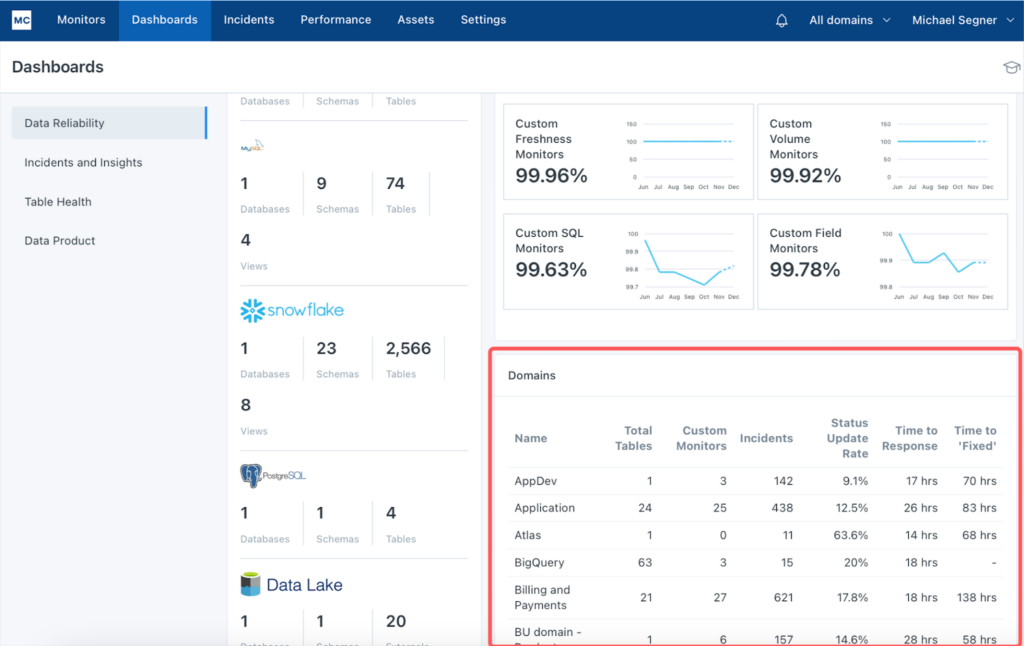

What you need is a data observability tool to detect, triage, and resolve data quality issues before those issues become the talk of the company. That’s where Monte Carlo comes in, offering end-to-end visibility into your data pipelines with features like automated anomaly detection, lineage tracking, and error root cause analysis.

Don’t let data anomalies, schema changes, and pipeline failures get you down. Talk to us today to see how Monte Carlo can proactively manage data quality issues before they escalate.

Our promise: we will show you the product.

Product demo.

Product demo.  What is data observability?

What is data observability?  What is a data mesh--and how not to mesh it up

What is a data mesh--and how not to mesh it up  The ULTIMATE Guide To Data Lineage

The ULTIMATE Guide To Data Lineage