Data Quality Anomaly Detection: Everything You Need to Know

I bet you’re tired of hearing it at this point: garbage in, garbage out. It’s the mantra for data teams, and it underlines the importance of data quality anomaly detection for any organization. The quality of the input affects the quality of the output – and in order for data teams to produce high-quality data products, they need high-quality data from the very start.

But, in reality, a data pipeline is more nuanced than just the quality of your internal or external data sources. Data engineers also need to take into consideration the quality of their systems and code, because anomalies can occur across the pipeline – and no matter where they occur, it’s essential to be able to detect and resolve them fast.

What do data anomalies look like across a data pipeline? Well..

- Data anomalies in your data sources might look like a bunch of NULL values from the very start. That’s “garbage” going in.

- Anomalies in your system might look like some sort of break in your pipeline, like a failed Airflow job (we’ve all been there).

- And, anomalies in your transformation code might occur as your data is being shaped for consumption. This could be a schema change, a bad JOIN… the list goes on.

These types of data anomalies commonly occur across pipelines. That’s why fast data anomaly detection is so essential. And, the most effective anomaly detection processes correlate these instances of bad data with the root cause across each of these three buckets – your data, systems, and code.

Let’s dive into the fundamentals of anomaly detection, best practices for establishing an anomaly detection framework and key techniques for maintaining high-quality data.

Table of Contents

What is Data Quality Anomaly Detection?

Simply put, data quality can be defined as a data’s suitability for a defined purpose. Anomaly detection refers to the identification of events or observations that deviate from the norm.

Data quality can be measured in six dimensions:

- Data accuracy: Does the data represent agreed-upon sources or events?

- Data completeness: What is the percentage of data populated vs. the potential for complete fulfillment?

- Data integrity: Is the data maintaining its consistency, accuracy, and trustworthiness throughout its lifecycle?

- Data validity: Is the data correct and relevant?

- Data timeliness: What is the lag between the actual event time and the time the event was captured in the system to be used?

- Data uniqueness: How many times is an object or event recorded in the dataset?

There are also multiple types of data anomalies that can occur to impact these dimensions of data quality, including data outliers, errors, and inconsistencies. The 8 most common anomalies that occur are:

- NULL values

- Schema change

- Volume issues

- Distribution errors

- Duplicate data

- Relational issues

- Typing errors

- Late data

The ability to detect these issues fast enables teams to decrease their time to resolution, foster trust, and bolster their data governance strategy for less data downtime and better data reliability.

Key Techniques for Data Quality Anomaly Detection

A number of techniques, algorithms, and frameworks exist and are used (and developed) by industry giants like Meta, Google, Uber, and others. For a technical deep dive, head here.

Up until recently, anomaly detection was considered a nice-to-have—not a need-to-have—for many data teams. Now, as data systems become increasingly complex and companies empower employees across functions to use data, it’s imperative that teams take both proactive and reactive approaches to solving for data quality.

There are several key methods for data quality anomaly detection, including statistical methods and machine learning approaches. Statistical methods include Z-Score, IQR, and others.

Statistical methods of data quality anomaly detection:

- Z-Score: A Z-score is measured in terms of standard deviations from the mean. For example, if a Z-score is 0, then the data point’s score is identical to the mean score. If a Z-score is 1.0, then the value is one standard deviation from the mean. A positive Z-score means the score is above the mean, and a negative Z-score is below the mean.

- IQR: IQR, or interquartile range, gives you the range of the second or third quartiles, or the middle half of a dataset. It can be calculated with the formula: IQR = Q3-Q1 (Q3 is the 75th percentile and Q1 is the 25th percentile).

Machine learning approaches to data quality anomaly detection might involve supervised vs. unsupervised learning. Supervised learning uses labeled training data, while an unsupervised machine learning algorithm does not. Unsupervised machine learning algorithms independently learn the data’s structure without guidance.

Some data teams may also employ hybrid models by combining both statistical methods of data quality anomaly detection with machine learning techniques. All of these methods enable data teams to understand and define what high-quality data should look like for their organization, so when a deviation occurs, they can catch it quickly.

Best Practices in Data Quality Anomaly Detection

It is essential to establish a data quality management framework to set benchmarks your team can actively improve against.

There are several ways to establish an effective data quality management framework, but we typically recommend focusing on the following 6 steps:

- Baseline your current data quality levels

- Rally and align the organization on the importance of data quality

- Implementing broad-level data quality monitoring

- Optimize your incident resolution process

- Create custom data quality monitors that suit your critical data products

- Consider incident prevention

Data observability solutions, like Monte Carlo, make it easy to implement a data quality management process by automating the process of anomaly detection and correlating it to the root cause.

Other data quality tools can also help improve data quality with the foundation that data observability provides, including:

- Data governance: Defines data standards and processes

- Data testing: Covers important known data issues

- Data discovery: Makes data more accessible and useful

- Data contracts: Helps keep data consistent

But data observability is the best data quality tool for programmatically building and scaling your data quality into your data, systems, and code.

Challenges in Data Quality Anomaly Detection

Even with the right data quality management framework in place, data quality anomaly detection isn’t always a walk in the park. There’s no one single culprit when it comes to data quality issues.

Certain datasets or processes can bring their own challenges, such as dealing with high-dimensional data, balancing sensitivity and specificity when it comes to highly regulated data, and adapting to evolving data patterns over time.

Monte Carlo monitors for four key categories, and then allows for custom monitors as well, to help prevent data quality anomalies of all sizes. These monitors include:

- Pipeline observability monitors: These monitors learn the normal patterns of updates, size changes, and growth for any given table, and alerts if those patterns are violated. Ideal for detecting breakages and stoppages in the flow of data.

- Metrics monitors: These monitors learn the statistical profile of data within the fields of a table, and alerts if those patterns are violated. Ideal for detecting breakages, outliers, and “unknown unknowns” in data.

- Validations monitors: Users can choose from templates or write their own SQL to check specific qualities of the data. Ideal for row-by-row validations, business-specific logic, or comparing metrics across multiple sources.

- Performance observability monitors: Users can track runtime of queries and be alerted to outliers and queries that aren’t scaling well. Ideal for ensuring that pipelines remain reliable and that costs aren’t spiraling out of control.

How to Solve Data Anomalies

It’s important to understand how to prioritize data quality issues – and this means your data teams need to understand your data pipelines and how the data products they build are being used.

When anomalies occur in several places across pipelines, it can be hard to know where to start – but knowing the data lineage and prioritizing effectively are the best ways to reduce data downtime on data assets with higher criticality.

Consider asking your team the following questions to inform your triage process:

- What tables were impacted?

- How many downstream users were impacted?

- How critical is the pipeline or table to a given stakeholder?

- When will the impacted tables be needed next?

Case study: Kargo Prevents Six-Figure Data Quality Issue with Anomaly Detection

Let’s consider a data quality anomaly in action. Data is crucial to omnichannel advertising company Kargo’s business model. They need to report the results of the campaigns they execute for their clients with a high degree of accuracy, consistency, and reliability. They also leverage data to inform the ML models that help direct their bids to acquire advertising inventory across platforms. This type of digital auction and transaction data operates at an extreme scale.

“Media auctions– a process that consists of advertisers bidding on ads inventory to be displayed by specific users for a particular site–can consist of billions of transactions an hour. Sampling 1% of that traffic is a terabyte today, so we can’t ingest all the data we transact on,” said Andy Owens, VP of Analytics at Kargo. At this scale and velocity, data quality issues can be costly. One such issue in 2021 was the impetus for the team to adopt Monte Carlo to improve their data quality anomaly detection.

“One of our main pipelines failed because an external partner didn’t feed us the right information to process the data. That was a three day issue that became a $500,000 problem. We’ve been growing at double digits every year since then so if that incident happened today [the impact] would be much larger” explained Owens.

Now, Monte Carlo’s data observability platform scales end-to-end across Kargo’s modern data stack to automatically apply data freshness, volume, and schema anomaly alerts without the need for any threshold setting. The Kargo team also sets custom monitors and rules relevant to their specific use case.

“[For the custom monitors] the production apps QA engineer would look at [the data] and create a rule to say we expect the ID to be this many digits long or the cardinality to be this based on the product,” said Owens. “Our machine learning models are built on small cardinality of data and not a lot of columns. If there is a deviation the model will become biased and make poor decisions so the match rate must be tight with the data streams coming in. Pointing Monte Carlo [to monitor that] wasn’t difficult.”

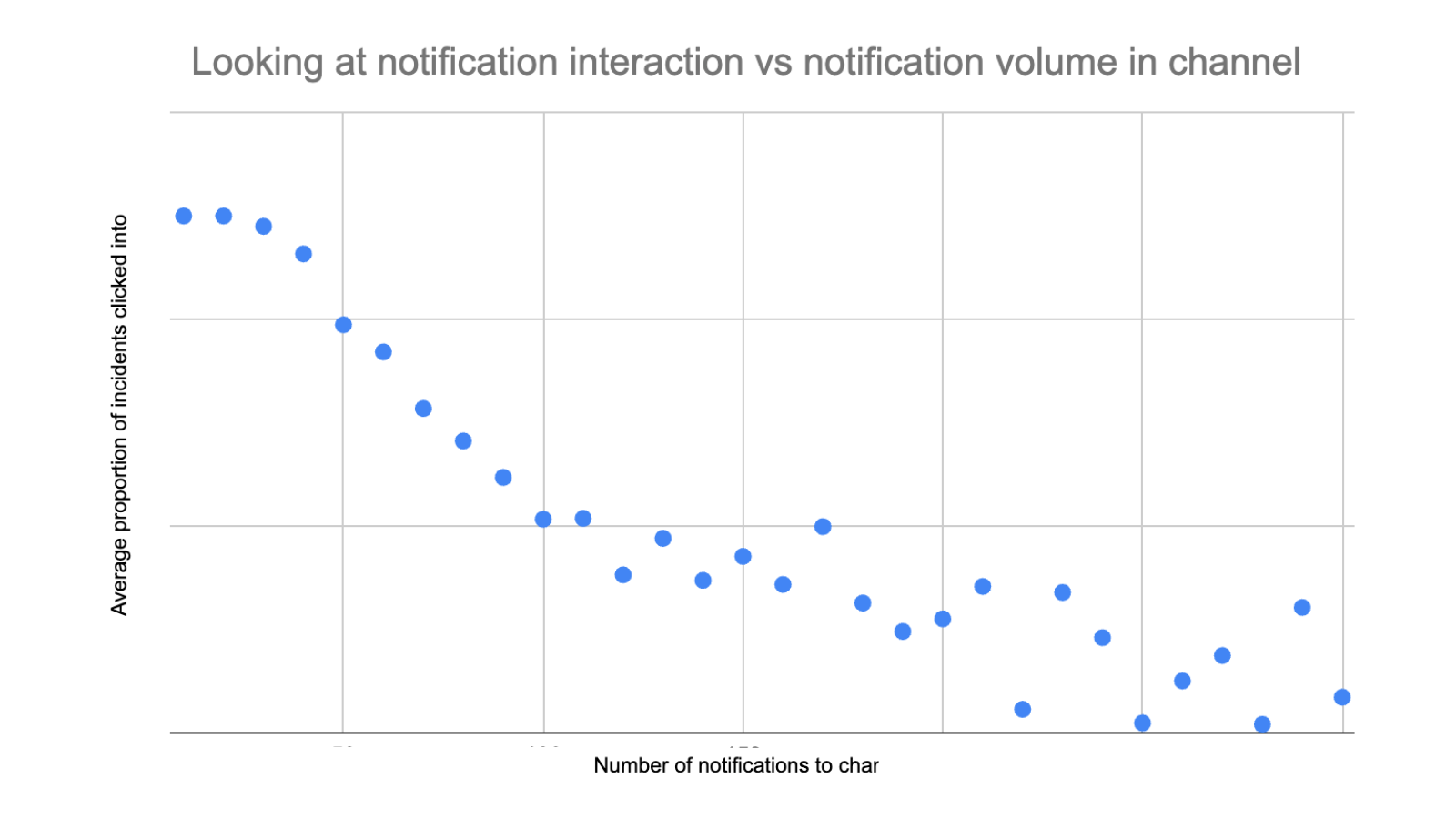

Once the issue is detected, an alert goes to a Slack channel with the relevant developers and business users. Their team can then leverage Monte Carlo root cause analysis functionality – including data lineage, query change detection, correlation analysis, and more–to pinpoint and quickly fix any issues.

“In 2021, we were flying blind. Our developers didn’t know where to investigate and data engineering teams were trying to humpty dumpty fix dashboards. That was a huge waste of time,” said Owens. “Data quality can be death by 1,000 cuts, but with Monte Carlo we have meaningfully increased our reliability levels in a way that has a real impact on the business.”

You can read Kargo’s full story here.

Identify Data Quality Anomalies with Monte Carlo

One thing is clear: no matter where data anomalies occur in your pipelines, data quality anomaly detection is a must-have for high-functioning data teams.

High-quality data is the foundation of sound decision-making for an enterprise business. With a data observability tool like Monte Carlo, data teams can detect, triage, and resolve data anomalies quicker to reduce data downtime and increase data trust. Request a demo from the team to see it in action.

Our promise: we will show you the product.

Frequently Asked Questions

What is an example of anomaly detection?

An example of anomaly detection is identifying a sudden spike in the number of NULL values in a dataset that typically has very few NULL values. This deviation from the norm indicates a potential issue with data quality that needs to be investigated and resolved.

What are the types of data anomalies?

The types of data anomalies include NULL values, schema changes, volume issues, distribution errors, duplicate data, relational issues, typing errors, and late data. These anomalies can impact the accuracy, consistency, and reliability of the data.

What are the best metrics for anomaly detection?

The best metrics for anomaly detection include Z-Score and IQR (Interquartile Range). Z-Score measures how many standard deviations a data point is from the mean, helping identify outliers. IQR gives the range of the middle half of a dataset, which helps detect anomalies in the distribution of data. Additionally, data quality can be measured in dimensions such as accuracy, completeness, integrity, validity, timeliness, and uniqueness.

How much data is needed for anomaly detection?

The amount of data needed for anomaly detection varies based on the specific use case and the method being used. However, sufficient historical data is generally required to establish a baseline for normal behavior. This baseline allows for the identification of deviations and anomalies. For statistical methods like Z-Score and IQR, a representative sample size is needed. Machine learning approaches may require more data to train models effectively, especially for supervised learning, which uses labeled training data.

Product demo.

Product demo.  What is data observability?

What is data observability?  What is a data mesh--and how not to mesh it up

What is a data mesh--and how not to mesh it up  The ULTIMATE Guide To Data Lineage

The ULTIMATE Guide To Data Lineage