5 Data Quality Tools—What They Are & When You Need Them

It’s rare to find a data leader, engineer, scientist, or analyst — or business stakeholder — who doesn’t believe data quality is important. When your company relies on data to power products or decision-making processes, everyone agrees that data needs to be accurate and trustworthy.

So why does the term data quality tools cause so much confusion?

We think it’s because software vendors and well-meaning thought leaders want to align their solutions and frameworks with something that everyone believes in. But when these loud voices select or redefine terms to expand the market of their products or ideas, data professionals end up conflating tools that support data quality with tools that actually resolve data quality. And there’s a huge difference.

One thing is certain: you need both technologies and processes to ensure the quality of your data. But which ones? And when?

Let’s dig in and explore the landscape of the top so-called “data quality tools” — what they are, what they’re not, and whether they’re the right first step towards more reliable data.

Table of Contents

Data governance: important for data quality, but not a data quality tool

What data governance is

Data governance is the decision-making process organizations use to determine how to keep their data available, usable, and secure. When done right, a good data governance program will help organizations answer questions around how data is collected and processed, who should have permission to access it, where it’s stored, and when it’s retired.

Governance helps companies set important standards and achieve higher levels of data security, data accessibility, and data quality. But…

What data governance isn’t

…Data governance isn’t a data quality tool, because it’s not a tool at all. Full stop.

Governance is people, processes, and principles. According to best practices, data governance includes a cross-functional governance team, a steering committee, and data stewards. Those individuals develop shared processes and organizational principles that help teams “decide how to decide” about data.

Data quality and data governance are deeply intertwined. And certain technologies like data discovery tools and data observability (more on those in a moment) can help organizations execute their governance strategy to improve data quality — but governance itself is not a tool.

When to implement data governance

Data governance is now considered a must-have for most organizations because it helps improve compliance with GDPR and other measures, safeguard proprietary data against bad actors, support a distributed data architecture, and maintain data quality. However, it also takes a lot of stakeholder alignment and organizational investment to make data governance happen — it’s not an easy lift.

But if your industry or security requirements are strict, data governance might be a helpful lens to start viewing your MVP approach to data quality. It won’t include everything you need to confront the challenges of bad data, but it will be time well spent in the long run.

Data testing: a tool to measure data quality, but not at scale

What data testing is

Data testing is the process of anticipating specific, known problems in a dataset, and writing logic to proactively detect if those issues occur. Data engineers can use essential data quality tests like NULL value tests, volume tests, freshness checks, and numeric distribution tests, to look for common quality issues like data that’s missing, stale, or falls outside the expected range.

Data testing is an important safeguard against specific, known data quality issues. With testing, you can discover those problems before they enter your production data pipelines and prevent downstream impacts. This helps improve your data health, but…

What data testing isn’t

…Data testing isn’t a scalable or comprehensive solution for data quality.

By its nature, testing involves looking for known unknowns — data quality issues you can predict, like the ones described above. But there’s a second type of data quality issue: unknown unknowns. These are all the ways and places data can break that you can’t predict or test against. Schema changes, API errors, unintended ETL changes, and data drift are just a few examples of unknown unknowns that data testing won’t detect. And these issues become increasingly important as your data scales and your pipelines become more complex.

Additionally, data testing requires data engineers to maintain both specialized domain knowledge and insight into how the data might break. And while data quality testing can signal when a problem has occurred, it doesn’t help facilitate incident resolution — you’ll still need to do a lot of legwork investigating the root cause, fixing the issue, and preventing repeat offenses.

When to implement data quality tests

When you only have a handful of tables to manage, and your current data quality pain is relatively small, then data tests can go a long way towards meeting your data quality needs. And even as you grow, specific data tests will always be useful to ensure your most valuable data assets receive multiple layers of data quality protection.

But as your datasets and pipelines grow, you’ll need a scalable data quality solution that delivers comprehensive coverage across the entire lifecycle — and detects both known unknowns and unknown unknowns to prevent data downtime.

Top data quality testing solutions

- Dbt, a SQL-based command-line tool with robust testing features

- Great Expectations, an open-source data testing framework that includes a library of common unit tests

Data discovery: useful tools, but don’t reflect data quality

What data discovery is

Data discovery tooling — historically known as “data catalogs” — help teams navigate and identify data sources within an organization. These tools are specialized software solutions that use metadata to visualize a picture of your data, the relationships between different assets, and the processing that occurs as data moves throughout your systems.

Data discovery tools help illuminate the dark corners of your data repositories, and enable data consumers and practitioners to access and use data more effectively. That makes data discovery tooling vital for data democratization and data governance, but…

What data discovery is not

…Data discovery solutions don’t tell you anything about data quality.

These tools help domain teams and stakeholders understand and self-serve the right data — but if the data itself has quality issues, they will only exacerbate the problem by making bad data more accessible. Data discovery tooling doesn’t include any information about whether or not the data is reliable, accurate, or trustworthy.

When to implement data discovery

If you don’t have confidence in your data quality, serving it up to more people across your organization probably isn’t the best move.

But once your data is reliable and trustworthy, data discovery solutions can play a big role in unlocking the value of that data — especially in a decentralized data architecture.

Top data discovery solutions

Data teams have no shortage of options when it comes to data discovery tooling. A few of the top performers include:

- Atlan, which supports different user personas while facilitating data governance, compliance, and natural language search

- Alation, which uses AI to determine and surface useful information

- Collibra, which offers a no-code policy builder and automated data lineage

Data contracts: helpful with data quality, but not exactly tools, and don’t detect or resolve issues

What data contracts are

Data contracts are a way of using process and tooling to enforce certain constraints that keep data consistent and usable for downstream users — and keep data producers and consumers on the same page.

For example, the team at Whatnot uses data contracts to help software engineers working across every application consistently interface with logging systems, use common schemas, and meet pre-specified metadata requirements. Data contracts like these keep teams aligned around vital areas like data extraction, ingestion, ownership, and access or anonymization requirements. Ideally, they help simplify decision-making and prevent unintended impacts on data quality, like schema changes that break downstream data pipelines or products.

Especially at later growth stages or in complex data organizations, data contracts can be incredibly useful to prevent data quality headaches as data sprawls. They’re great for setting standards and defining what constitutes bad data and how to allocate responsibility when an incident takes place. But…

What data contracts aren’t

…Data contracts won’t be any help in identifying when data breaks — and it will, no matter how ironclad your contracts.

Even the most robust set of data contracts can’t prevent all instances of broken data. That means teams need other data quality tools to detect incidents and facilitate swift resolution.

When to implement data contracts

Large organizations can benefit greatly from data contracts, but will need to implement contracts in tandem with a data quality monitoring and resolution solution to ensure their overall data health. And smaller companies with more centralized data control likely won’t realize the full value of a data contract — though it may make sense down the road as their data quality needs scale.

Top data contracts solutions

Due to their incredibly customized nature, right now, most teams build data contracts in-house using YAML or JSON formats.

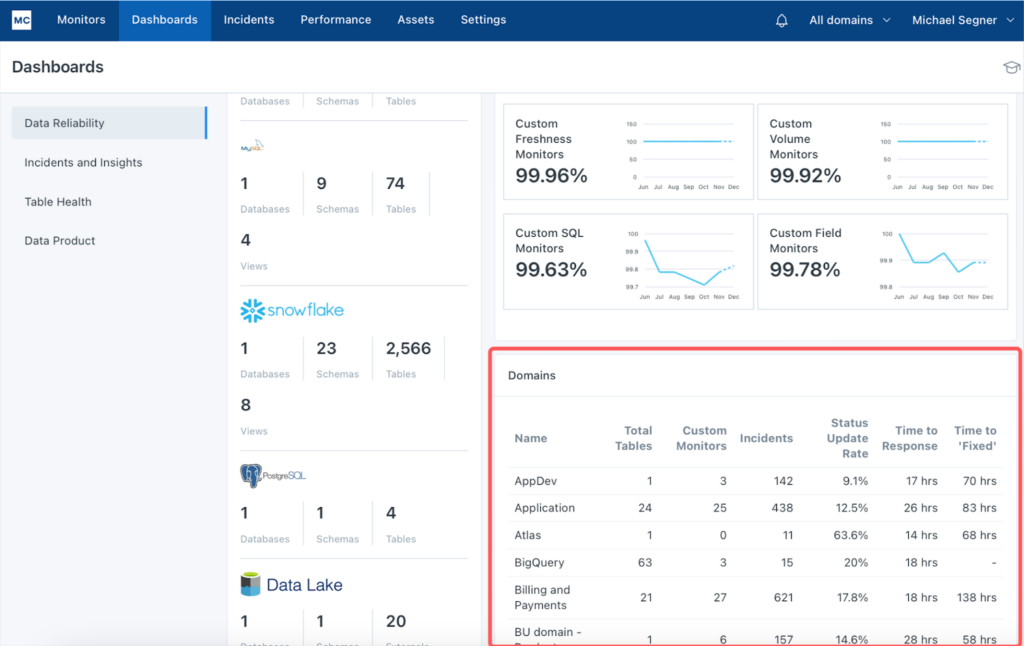

Data observability: the total package for data quality tools

What data observability is

Data observability solutions use automation to deliver comprehensive testing, monitoring and alerting, and lineage to facilitate swift resolution — in a single platform. When it comes to data quality tools, observability is the total package.

And observability addresses the shortcomings of traditional data quality practices in three key ways:

- Lower compute cost: Unlike traditional data quality monitoring, data observability reduces compute by ingesting and monitoring metadata, as opposed to querying the data directly.

- Faster time-to-value: Data observability automates implementation with machine learning-powered monitors that provide instant coverage out of the box, based on the historical patterns in your data — along with custom insights and simplified coding tools to make user-defined testing easier.

- Improved incident resolution: In addition to tagging and alerting, data observability expedites the root-cause process with automated, column-level lineage that lets teams see at a glance what’s been impacted, who needs to know, and where to go to fix it.

Ultimately, data observability is the only data quality tool that identifies issues directly and programmatically — and gives data teams the tools they need to resolve incidents quickly.

When to implement data observability

For most organizations, relying on data observability as your primary data quality tool will be the right path forward. But for very small organizations with limited resources and minimal data quality pain, you’ll probably want to build an MVP data quality practice around manual testing and monitoring until your data starts to scale.

But once you approach that threshold of needing an automated data quality solution, be proactive. Don’t wait until data quality gets out of hand!

Build a foundation of data quality

The other data quality-adjacent tools and frameworks we described above all have their place. Governance defines standards and processes. Testing covers important known issues. Discovery makes data more accessible and useful. And contracts help keep data consistent.

But without data observability, you won’t have the ability to programmatically build and scale your data quality into your data, systems, and code. These other processes and technologies need that solid foundation in order to realize their full value.

Ready to see how data observability can help improve your organization’s data quality? Check out the Monte Carlo platform in action, or contact our team to learn more.

Our promise: we will show you the product.

Frequently Asked Questions

What is a data quality tool?

A data quality tool is a technology or software solution designed to ensure the accuracy, reliability, and trustworthiness of data. Examples include data testing tools, data discovery tools, data contracts, and data observability solutions.

How do I choose a data quality tool?

Choose a data quality tool by assessing your organization’s specific needs, the scale of your data, and the types of data quality issues you need to address. Consider the benefits and limitations of data governance, data testing, data discovery, data contracts, and data observability. Data observability is often recommended for comprehensive and scalable data quality management.

Product demo.

Product demo.  What is data observability?

What is data observability?  What is a data mesh--and how not to mesh it up

What is a data mesh--and how not to mesh it up  The ULTIMATE Guide To Data Lineage

The ULTIMATE Guide To Data Lineage