The Complete Guide to Data Uniqueness

Remember the Healthcare.gov launch fiasco? Millions of Americans tried to sign up for health insurance—and couldn’t. The site crashed under heavy demand and even when people did manage to enroll, the system sometimes created multiple insurance plans for the same person.

Behind this chaos was an often-overlooked but critical aspect of data management: data uniqueness.

Think of data uniqueness as the “no doubles” rule – each piece of data should exist exactly once in your system. When you have duplicates floating around, it’s like having multiple versions of the same document – you never know which one is the one you should trust. Plus, getting rid of duplicates makes your systems run faster since there’s less redundant data to process.

All that Healthcare.gov’s developers had to do was let people pick their plan, allow only one plan per social security number, and you’re done. But by not enforcing data uniqueness they brought down a multi-million dollar government website. Now, how can you prevent something as basic as duplicate data from bringing down your systems?

Table of Contents

How to Measure Data Uniqueness

As one of the six data quality dimensions, data uniqueness is something you should be constantly measuring. There are two main KPIs for measuring it:

- Duplicate Count – The most obvious measure is to count the number of duplicate records in key fields (like customer IDs or email addresses). The lower the number, the better your data uniqueness.

- Uniqueness Ratio – To better track your data uniqueness over time, it is better to calculate the ratio of unique records to total records. For example, if your uniqueness ratio was 96% last month, and dropped to 93% this month, then you can clearly tell that more of your data has been taken over by duplicates.

But how do we actually do this in our database? And how can we try and prevent duplicates in the first place?

How to Enforce Data Uniqueness in PostgreSQL

If all of your important data is in a database (please tell me it’s in a database!), making sure that all data will be unique is straightforward.

Just add the UNIQUE keyword.Imagine we have a table called users, where we want to make sure each user’s email is unique. To enforce this, we can use the UNIQUE constraint like so:

CREATE TABLE users (

id SERIAL PRIMARY KEY,

firstname VARCHAR(50),

lastname VARCHAR(50),

email VARCHAR(100) UNIQUE

);That way when we start inserting users, and someone comes around with the same email:

-- Inserting the first user

INSERT INTO users (username, email) VALUES ('john_doe', 'john@example.com');

-- Inserting the second user with the same email as the first

INSERT INTO users (username, email) VALUES ('johnny', 'john@example.com');We will get this error message:

ERROR: duplicate key value violates unique constraint "users_email_key"

DETAIL: Key (email)=(john@example.com) already exists.How to Check for Duplicate Data

But what if we think there is already some sort of duplicate data in our database?To find any exact duplicates, we can use a query with GROUP BY and HAVING:

SELECT email, COUNT(*)

FROM users

GROUP BY email

HAVING COUNT(*) > 1;Where the output could look like this:

| count | |

|---|---|

| john@example.com | 2 |

| jane@example.com | 3 |

Meaning that the email john@example.com was found twice while jane@example.com was found three times.

Finding near duplicates is also possible. For example, if you want to consider “Avenue” and “Ave.” as duplicates. The best way to do this is with a trigram search, which we can enable with the pg_trgm extension:

CREATE EXTENSION pg_trgm;Then we can use this query to find any other addresses in the users table that are similar to the address 123 Avenue Street:

SELECT id, address, similarity(address, '123 Avenue Street') AS sim_score

FROM addresses

WHERE similarity(address, '123 Avenue Street') > 0.6

ORDER BY sim_score DESC;[similarity(address, ‘123 Avenue Street’)] is the key here. It calculates a similarity score for the address field based on 123 Avenue Street. Then the most similar addresses (those with a score higher than 0.6) are selected.

So the results might look something like this:

| id | address | sim_score |

|---|---|---|

| 1 | 123 Avenue Street | 1.0 |

| 2 | 123 Ave Street | 0.923 |

| 3 | 123 Avenue St | 0.762 |

| 4 | 123 Avenue St. | 0.675 |

And once we verify that these duplicates are not wanted, we can then pass them to a DELETE statement, cleaning up our database and improving our data uniqueness.

But there are even better ways to make sure that data is unique not only in your database, but also across your entire data infrastructure.

How Can You Make Sure Your Data is Unique?

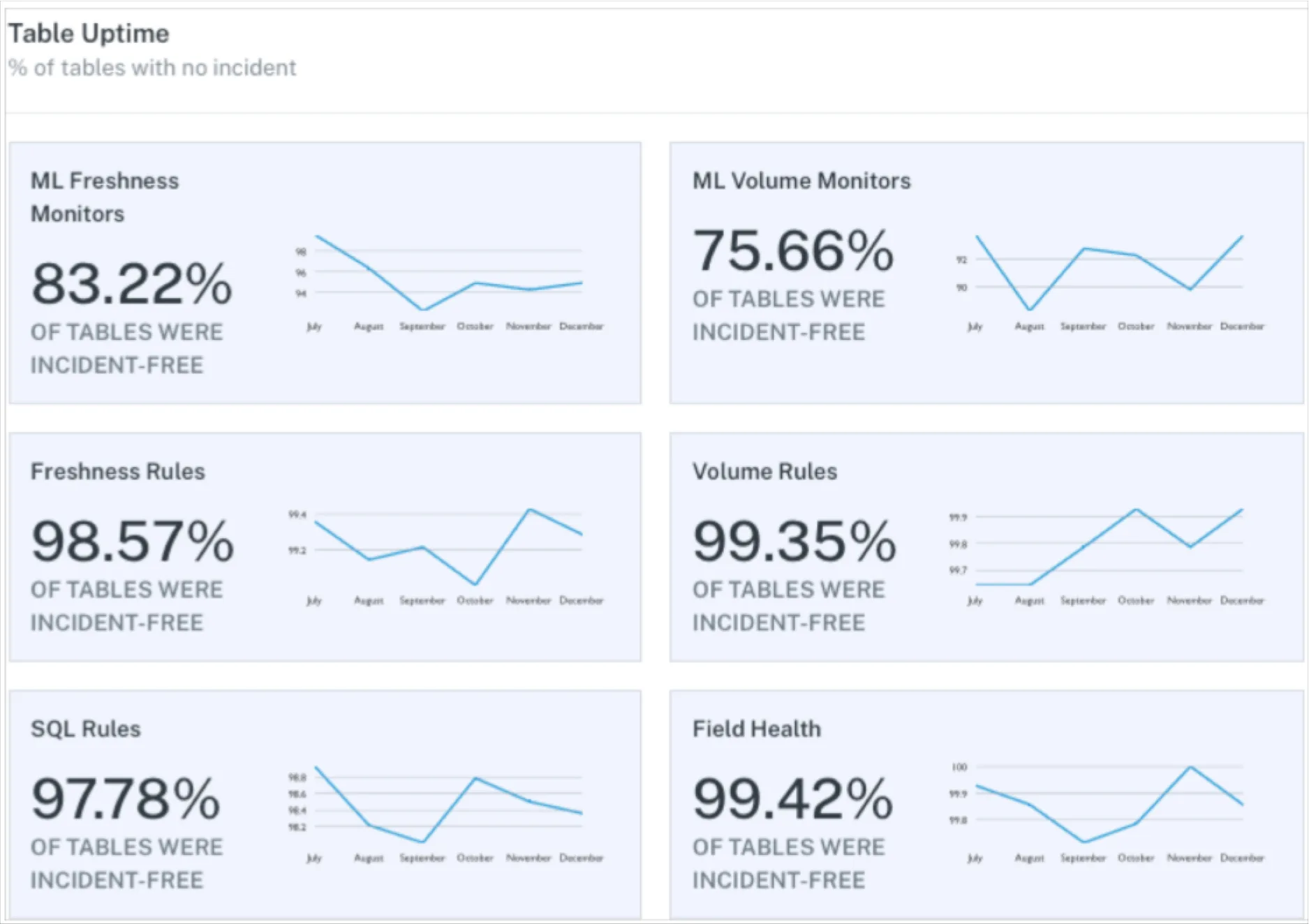

Even with all of the constraints and checks, duplicate data can still sneak its way into your systems. You should always use a data observability platform like Monte Carlo to check your data for duplicates in real-time. That way you can deal with them quickly before they become a bigger problem.

| METRIC | DESCRIPTION | COLUMN Types | MAC NAME |

|---|---|---|---|

| Unique (%) | Percentage of unique values across all rows | All | UNIQUE_RATE |

| Unique (count) | Count of unique values | All | UNIQUE_COUNT |

| Duplicate (count) | Count of duplicate values, calculated as the difference between the total number of rows with non-null values and total number of distinct non-null values | All | DUPLICATE_COUNT |

A data observability platform like Monte Carlo also lets you:

- Track your data lineage, automatically detecting any other anomalies hiding in your system.

- Monitor the quality of datasets to see how you improve over time.

- Find the root cause for data issues quickly by analyzing trends and patterns.

Enter your email below to learn more about how Monte Carlo can help clean up your duplicate data.

Our promise: we will show you the product.

Frequently Asked Questions

What is uniqueness in data?

Uniqueness in data ensures that each record exists only once in a dataset or system, eliminating duplicates. It helps maintain data integrity, improves system efficiency, and fosters trust in data quality.

How to ensure data uniqueness?

You can ensure data uniqueness by implementing constraints like the UNIQUE keyword in databases, running queries to detect and handle duplicates, using tools like trigram search for near duplicates, and leveraging data observability platforms to monitor and resolve duplicate data in real-time.

Product demo.

Product demo.  3 Steps to AI-Ready Data

3 Steps to AI-Ready Data  What is a data mesh--and how not to mesh it up

What is a data mesh--and how not to mesh it up  The ULTIMATE Guide To Data Lineage

The ULTIMATE Guide To Data Lineage