Generative AI and the Future of Data Engineering

Generative AI is taking the world by storm – here’s what it means for data engineering and why data observability is critical for this groundbreaking technology to succeed.

Maybe you’ve noticed the world has dumped the internet, mobile, social, cloud and even crypto in favor of an obsession with generative AI.

But is there more to generative AI than a fancy demo on Twitter? And how will it impact data?

Let’s assess.

How generative AI will disrupt data

With the advent of generative AI, large language models became much more useful to the vast majority of humans.

Need a drawing of a dinosaur riding a unicycle for your three-year-old’s birthday party? Done. How about a draft of an email to employees about your company’s new work from home policy? Easy as pie.

It’s inevitable that generative AI will disrupt data, too. After speaking with hundreds of data leaders across companies from Fortune 500s to startups, we came up with a few predictions:

Access to data will become much easier – and more ubiquitous.

Chat-like interfaces will allow users to ask questions about data in natural language. People that are not proficient in SQL and business intelligence will no longer need to ask an analyst or analytics engineer to create a dashboard for them. Simultaneously, those who are proficient will be able to answer their own questions and build data products quicker and more efficiently.

This will not displace SQL and business intelligence (or data professionals) for that matter, but it will lower the bar for data access and open it up to more stakeholders across more use cases. As a result, data will become more ubiquitous and more useful to organizations, with the opportunity to drive greater impact.

Simultaneously, data engineers will become more productive.

In the long term, bots may eat us (just kidding – mostly), but for the foreseeable future, generative AI won’t be able to replace data engineers, just make their lives easier – and that’s great. Check out what GitHub Copilot does if you need more evidence. While generative AI will relieve data professionals of some of their more ad hoc work, it will also give data people AI-assisted tools to more easily build, maintain, and optimize data pipelines. Generative AI models are already great at creating SQL/Python code, debugging it and optimizing it and they will only get better.

These enhancements may be baked into current staples of your data stack, or entirely new solutions being engineered by a soon-to-be launched seed stage startup. Either way, the outcome will be more data pipelines and more data products to be consumed by end users.

Still, like any change, these advancements won’t be without their hurdles. Greater data access and greater productivity increases both the criticality of data and its complexity, making data harder to govern and trust. I don’t predict that bots shaped like Looker dashboards and Tableau reports will run amok, but I do foresee a world in which pipelines turn into figurative Frankenstein Monsters and business users rely on data with little insight into where the data came or guidance around what to use. In this brave new world, data governance and reliability will become much more important.

Software engineering teams have long been practicing DevOps and automating their tooling to improve developer workflows, increase productivity, and build more useful products – all while ensuring the reliability of complex systems. Similarly, we are going to have to step up our game in the data space and become more operationally disciplined than ever before. Data observability will play a similar role for data teams to manage the reliability of data – and data products – at scale, and will become more critical and powerful.

Building, tuning and leveraging LLMs

Last month, Datadog announced that they are integrating with ChatGPT to better manage the performance and reliability of OpenAI APIs by tracking usage patterns, cost and performance.

Monitoring the OpenAI API is massive, but what happens when data teams start using LLMs as part of their data processing pipelines? What happens when teams use their own datasets to fine tune LLMs or even create them from scratch? Needless to say, broken pipelines and faulty data will severely impact the quality and reliability of the end product.

During Snowflake’s Q1 2023 earnings call, Frank Slootman, CEO of Snowflake, argued that “generative AI is powered by data. That’s how models train and become progressively more interesting and relevant… You cannot just indiscriminately let these [LLMs] loose on data that people don’t understand in terms of its quality and its definition and its lineage.”

We’ve already seen the implications of unreliable model training before the advent of LLMs. Just last year, Equifax, the global credit giant, shared that an ML model trained on bad data caused them to send lenders incorrect credit scores for millions of consumers. And not long before that, Unity Technologies reported a revenue loss of $110M due to bad ads data fueling its targeting algorithms.

According to Slootman (and likely execs at Equifax and Unity now, too), having AI simply isn’t enough to succeed with it – you need to manage its reliability, too. Not just that, but teams need an automated, scalable, end-to-end, and comprehensive approach to managing the detection, resolution, and ultimately, prevention of bad models powered by bad data.

Data observability will play a key role in bringing LLMs to production and making them reliable enough for companies and individuals to adopt in production use cases.

Data observability will make generative AI better – and vice versa

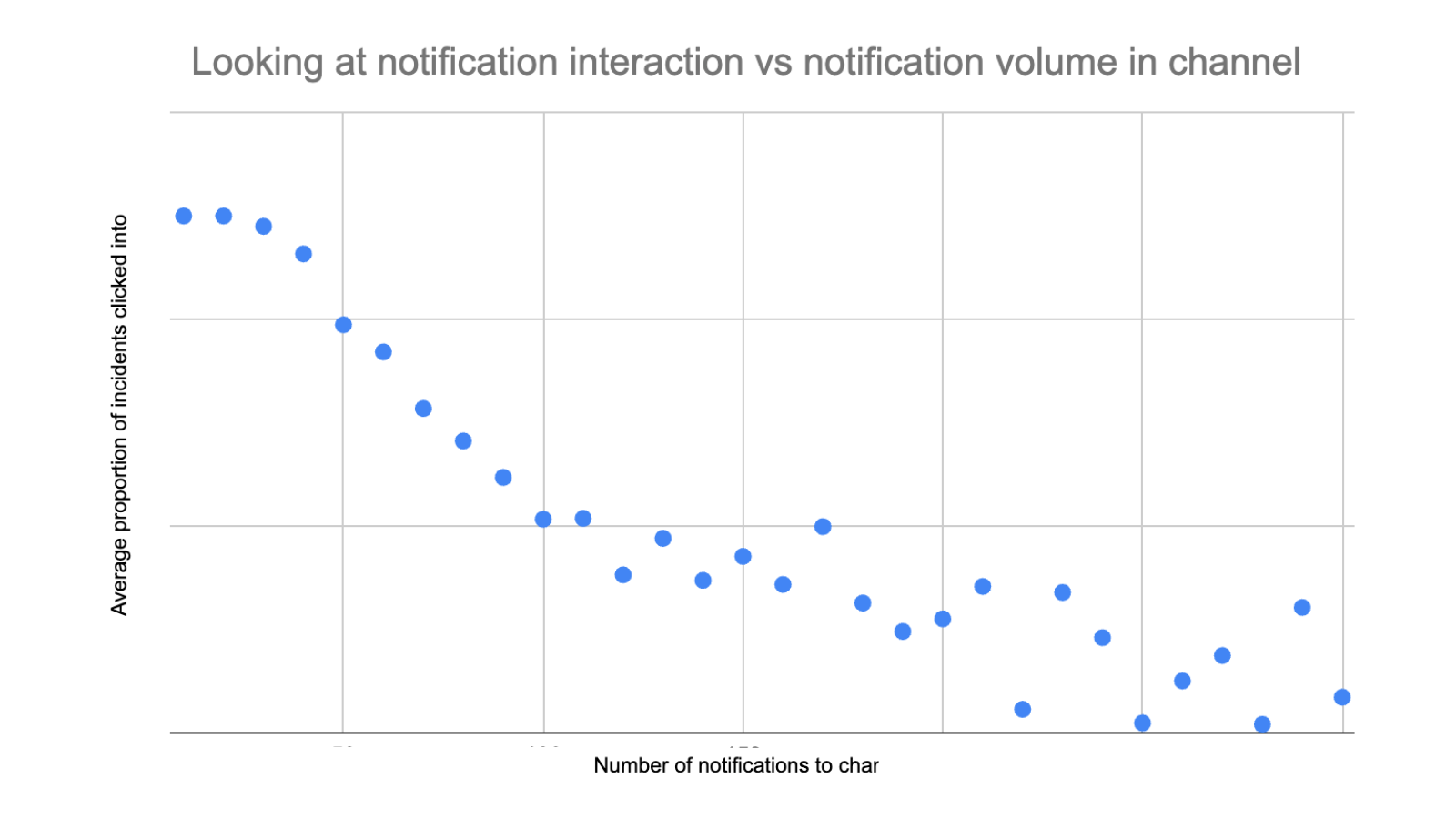

Data observability gives teams critical insights into the health of their data at each stage in the pipeline, automatically monitoring data and letting you know when systems break. Data observability also surfaces rich context with field-level lineage, logs, correlations and other insights that enables rapid triage, incident resolution, and effective communication with stakeholders impacted by data reliability issues - all critical for both trustworthy analytics and AI products.

Simultaneously, data observability workflows often involve correlating vast amounts of information and creating complex queries and configuration. These workflows lend themselves very well to the power of Generative AI, and we have already identified several dozen opportunities to simplify, streamline and accelerate data observability using LLMs.

At Monte Carlo, we are hard at work to make these a reality and help our users get their work done faster. Not only do we see data observability critical to the success of generative AI, but we’re dedicated to building the only solution that’s generative AI-first, complete with generative AI-enabled features.

In fact, we’re already integrating with OpenAI's API to offer users troubleshooting advice when their SQL queries fail to speed up the creation and deployment of data monitoring rules. And in the coming months, we are planning to extend our use of AI to help our users increase their efficiency when monitoring their data environments and resolving data incidents.

Data observability is critical to the future success of generative AI and Monte Carlo is charting the path forward. Will you join us?

Learn how Monte Carlo and data observability are making generative AI reliable and successful at scale. Request a demo today.

Product demo.

Product demo.  3 Steps to AI-Ready Data

3 Steps to AI-Ready Data  What is a data mesh--and how not to mesh it up

What is a data mesh--and how not to mesh it up  The ULTIMATE Guide To Data Lineage

The ULTIMATE Guide To Data Lineage