How Accolade Builds Trust in Data Decisions by Leveraging a Data Observability Framework

Our healthcare system is often fragmented and inefficient–but it doesn’t have to be. Accolade provides personalized care delivery, navigation, and advocacy services to create a more caring experience that saves our members’ time.

We close these healthcare gaps by using an approach that is personal, value based, and data driven. The last part is where our data infrastructure and engineering team comes in. We help stitch together the disconnected healthcare data that is foundational to great, personalized patient experiences.

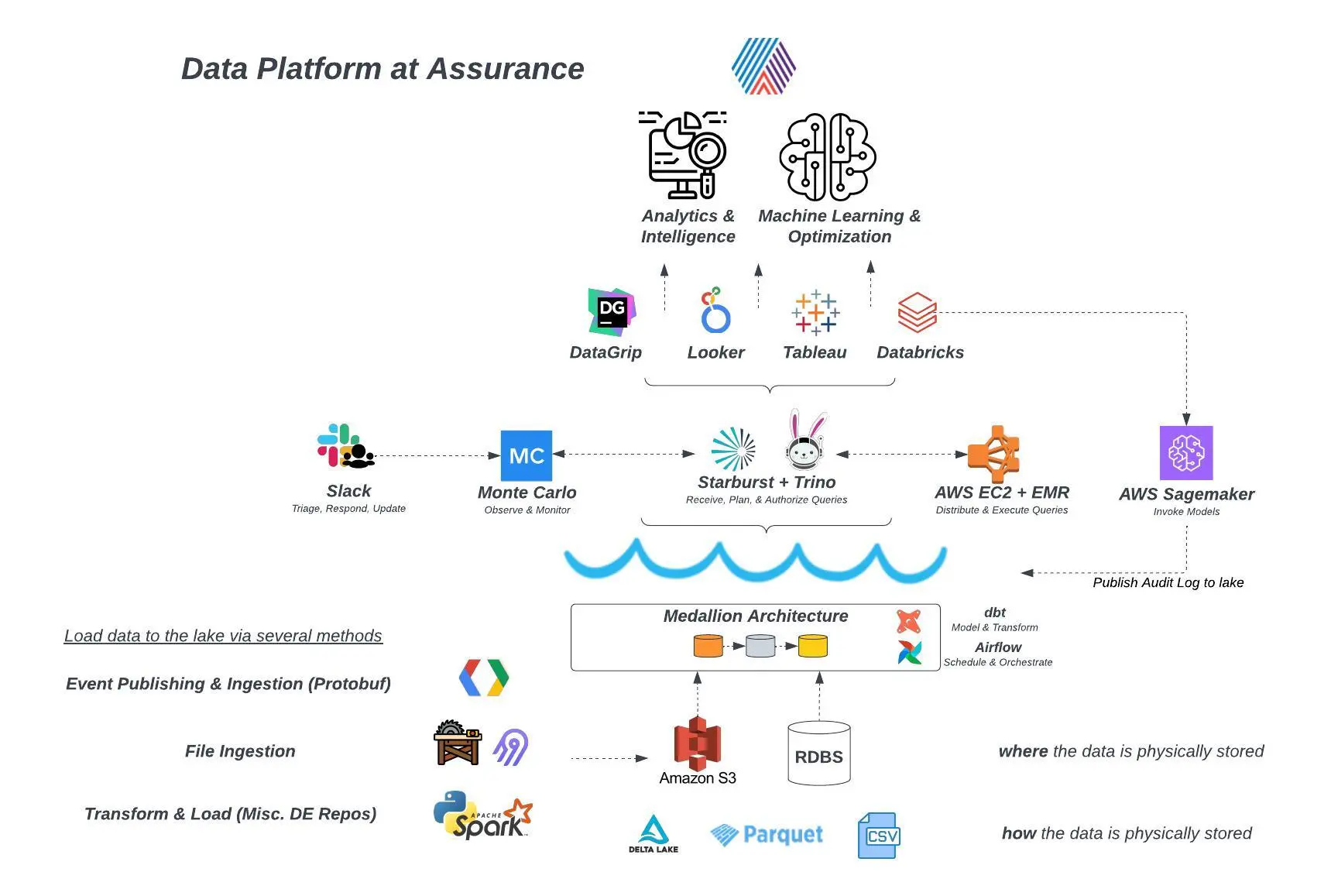

Powering all of this is a modern data stack is a platform built in AWS featuring S3, Kinesis Firehose, SNS, SQS, EMR, AWS Batch, Step Functions, and Redshift. Our environment ingests data from hundreds of data sources and from a sophisticated micro-service based architecture.

Just like our healthcare system, modern data pipelines consist of a series of complex, interdependent connections. When these connections break or are delayed, it causes disruption that ripples downstream, ultimately impacting the consumer or members of our platform.

What we needed was a solution to provide full visibility into systems so our data team could proactively detect and resolve these hiccups before they negatively impacted data quality and delivery for our customers. What we needed was data observability and Monte Carlo.

Table of Contents

Challenge: High standards for internal and external data sources

As a public company in the healthcare sector, there is a high bar for data quality at Accolade. Data quality can be an overloaded term, but we’ve invested considerable time and resources in building an understanding of how our internal and external data is leveraged throughout our entire platform. Our philosophy is that trust in our data is paramount. Data Quality is not a nice to have or an afterthought, it’s something that needs to be embedded in the heart of your data organization. We don’t take this tenet lightly because we understand the ramifications of using incorrect data to drive business decisions.

Internally, Accolade’s robust micro-service IT infrastructure supports unparalleled customer service, but frequent application/service changes can disrupt our data pipelines downstream. In most organizations a centralized data engineering team has less resources than a full micro-service organization, which means we need to find a way to scale our data platform architecture and provide the tools necessary to support proactive alerting.

When supporting customers and our members, Accolade leverages many data sources including demographic information, medical, pharmacy, and utilization management (UM) claims, and more. Ensuring all data arrives on time and volumes meet expectations is essential to ensuring trust. If issues do occur, we need to know as quickly as possible, so our team can proactively notify the provider or the customer before their care is consequently delayed.

Solution: Healthy pipelines, better outcomes

Monte Carlo has not only made our data quality processes more efficient and built trust internally – it is contributing to the customer experience. Here’s how.

ML-driven incident detection

Monte Carlo’s reliability monitors proactively notify us via Slack or Pager Duty when there are data freshness, volume, or schema anomalies. Without having to write a single test or set a manual threshold, we are alerted when the data has changed, it isn’t arriving on time, or when data volumes breach expectations.

Another important feature is the ability to create custom monitors on our data tables. For example, the monitor below alerted us to an instance where a third-party vendor accidentally sent millions of records instead of what should have only been a few hundred.

Monitors as code

All our custom data quality monitors are built via YAML config files and stored in our GitHub repository. As a new monitor gets approved via a pull request, the monitors are automatically deployed to Monte Carlo via our automated CI/CD process and cannot be changed directly in the UI.

Smart triaging and accelerated incident resolution

I can understand the impact of the incident, know exactly which reports were impacted, and prioritize it accordingly. Our internal data consumers can also see the current health status of the dataset through the integration with our data catalog in Atlan.

With the automated lineage generated from Monte Carlo, I can also trace the incident upstream to determine the source of this incident and even the owner of that source to expedite troubleshooting.

We estimate our team has saved around 40 hours a month by having data observability across just our financial domain alone.

A Favorable Diagnosis

Monte Carlo’s automated monitors have given us the scale and coverage we need to build trust with our internal and external data consumers, but we can also set custom, granular alerts to ensure service levels are being upheld.

Monte Carlo has made our data team more efficient and now operational stakeholders feel more confident using data to make decisions. We can proactively reach out to our customers to show them we’re driving efficiency for them, or proactively let them know that one of their providers isn’t sending data in a fresh or consistent manner, and it’s causing a discrepancy downstream. With Monte Carlo, we can track incidents at the customer level to track these highly important feeds.

As we continue to build our data platform, we are thinking of ways to create a live understanding of our data quality with a microservice health dashboard, giving our operational stakeholders a more holistic and transparent view of the data platform.

To learn more about how Monte Carlo can save your team 40 hours a week, speak to the team. Learn more about Monte Carlo’s integration with Atlan here.

Our promise: we will show you the product.

Product demo.

Product demo.  3 Steps to AI-Ready Data

3 Steps to AI-Ready Data  What is a data mesh--and how not to mesh it up

What is a data mesh--and how not to mesh it up  The ULTIMATE Guide To Data Lineage

The ULTIMATE Guide To Data Lineage