Supporting Global Expansion and Critical Data Products: How PrimaryBid Ensures Data Reliability at Scale

London-based PrimaryBid is on a mission: creating greater fairness, inclusion, and retail access to capital market transactions. Their fintech platform helps companies connect retail investors to capital market transactions like IPOs, follow-on fundraisings, and debt raises at the same time and price as institutional investors.

That requires operating in a regulated industry and ensuring real-time, secure access to sensitive financial transaction data. PrimaryBid’s team has delivered some of the largest retail IPOs in history, and they’ve been part of over 350 transactions in the UK and EU.

But things are about to get a lot more complicated. The company is preparing for a global expansion, moving beyond Europe to the US and the Middle East. They plan to massively increase the scale of their operations and continue delivering more external data products to their customers, which means significantly higher stakes around maintaining accurate, reliable data.

So we sat down with four of the company’s data leaders — Andy Turner, Director Data & AI; Ian Harris, Director of Data Engineering, Jonny Dungay, Analytics & BI Lead, and Rick Wang, Staff Data Engineer — to learn how their team is preparing for the future of data quality at PrimaryBid.

Table of Contents

PrimaryBid’s new normal: external data products

Initially, PrimaryBid’s data team focused on internal empowerment — giving teams across the organization the data and analytics they needed to keep the business running as efficiently as possible. But over the last few years, the company has expanded its scope to provide data products to its external partners and end users.

That means providing CEOs, CFOs, and other key stakeholders with live dashboards to monitor real-time data around transactions, such as how many people are requesting shares for an IPO. PrimaryBid also builds proprietary data products that help organizations flexibly allocate shares to retail investors in real time.

The data team takes this responsibility very seriously. “Often the founders of a company have spent years growing their business through several fundraising rounds, and then the IPO — a culmination of years and years of hard work — might only be a six-day process,” says Andy.

This isn’t a recurring occurrence for any business — it’s a one-time, highly important milestone in a company’s lifecycle. And they rely on PrimaryBid to give them a live view of how that very important transaction is performing.

“It’s absolutely crucial that we deliver a high quality experience, every time,” says Andy.

This is a different reality from their previous business model. PrimaryBid teams would run and operate deals internally, so it was important to have accurate and reliable information. “When you share things externally, the stakes are a lot higher,” says Andy. “It’s a pivot from doing this ourselves to offering it as a service to other businesses.”

To ensure they could meet these new demands and standards, PrimaryBid undertook a major initiative towards the end of 2022: rebuilding their data stack.

Why PrimaryBid rebuilt their data stack – and evolved beyond data quality testing and monitoring

In PrimaryBid’s early days, they were focused on growth. That meant managing data on a spreadsheet, then spinning up a database in AWS. But as the business began its pivot to a global scale with external data products, things needed to change. The organization had decided that data was going to be a first-class citizen in how they ran the business.

As the data team began to prepare for its next chapter, they knew that ensuring their data stack delivered accurate, fresh, reliable data was the top priority.

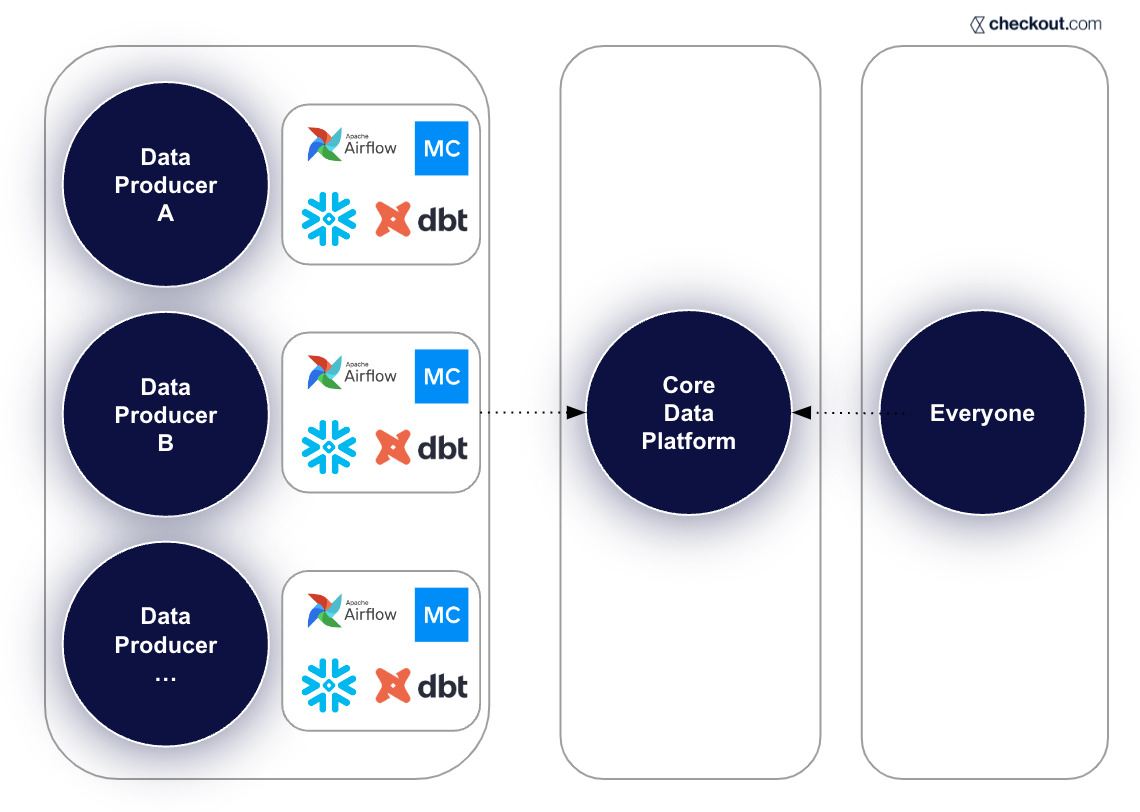

So the team began a ground-up review of their data stack, which led to several significant migrations. They moved from AWS to Google Cloud Platform, from Segment to Rudderstack, from Looker to Omni, and from Redshift to BigQuery. They also adopted Fivetran, dbt, pub/sub and Airflow, managed in Google Cloud Composer.

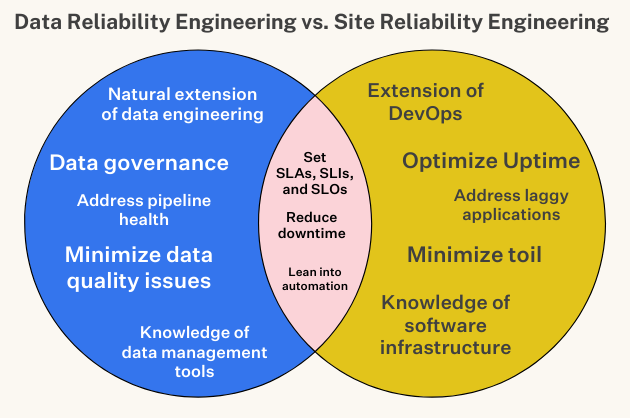

Finally, the data team knew they needed a more robust approach to data quality. While their team and their transactional data was smaller than some organizations, the standards for data freshness and reliability were about to skyrocket.

Previously, they relied on manual tests and after-the-fact detection of data issues. “It was often pretty obvious when something was going wrong, but detecting staleness, inadvertent or unexpected schema changes was quite difficult to do back-end,” says Ian.

When PrimaryBid was managing deals internally, there would occasionally be instances of reports and dashboards not being updated for 20 or 30 minutes after a deal would go live. “We’ve had to fix it quickly, but it’s not the end of the world, because it’s just an internal team member who notices and alerts us to the issue,” says Jonny. “With internal users, there’s a little bit more slack in the rope. But we needed to get ahead of an external party asking us ‘What’s going on?’ You get one chance with external users, and you can’t blow it.”

The team began exploring possible solutions for data observability. They wanted to be proactive and get ahead of the curve — and after evaluating several options, chose to implement Monte Carlo’s data observability platform.

“The biggest data consumers in the UK use Monte Carlo,” says Andy. “We needed to improve our confidence in our data, and when we signed up for Monte Carlo, we were sure that we were picking the best-in-class for data observability.”

The solution: data observability

With Monte Carlo’s automated monitoring, alerting, and end-to-end data lineage in place, the PrimaryBid team finally felt they had a security blanket for their data.

Moving forward into their global expansion, they can rest assured that their data will be fresh and trustworthy — and any incidents that might occur will be quick to resolve.

Outcome: Ensuring fresh data

One vital component to PrimaryBid’s data offering is ensuring fresh, near real-time data — but with a few unusual dimensions. The nature of their business meant that while they didn’t have a constant flow of data orders being placed, once a deal was live, a lot of important activity happened all at once.

“Some of our transactions only last for a few hours, and it’s absolutely critical that our systems are working unbelievably well — and then the deal is closed. It’s done. And then, you’re on to the next deal,” says Andy.

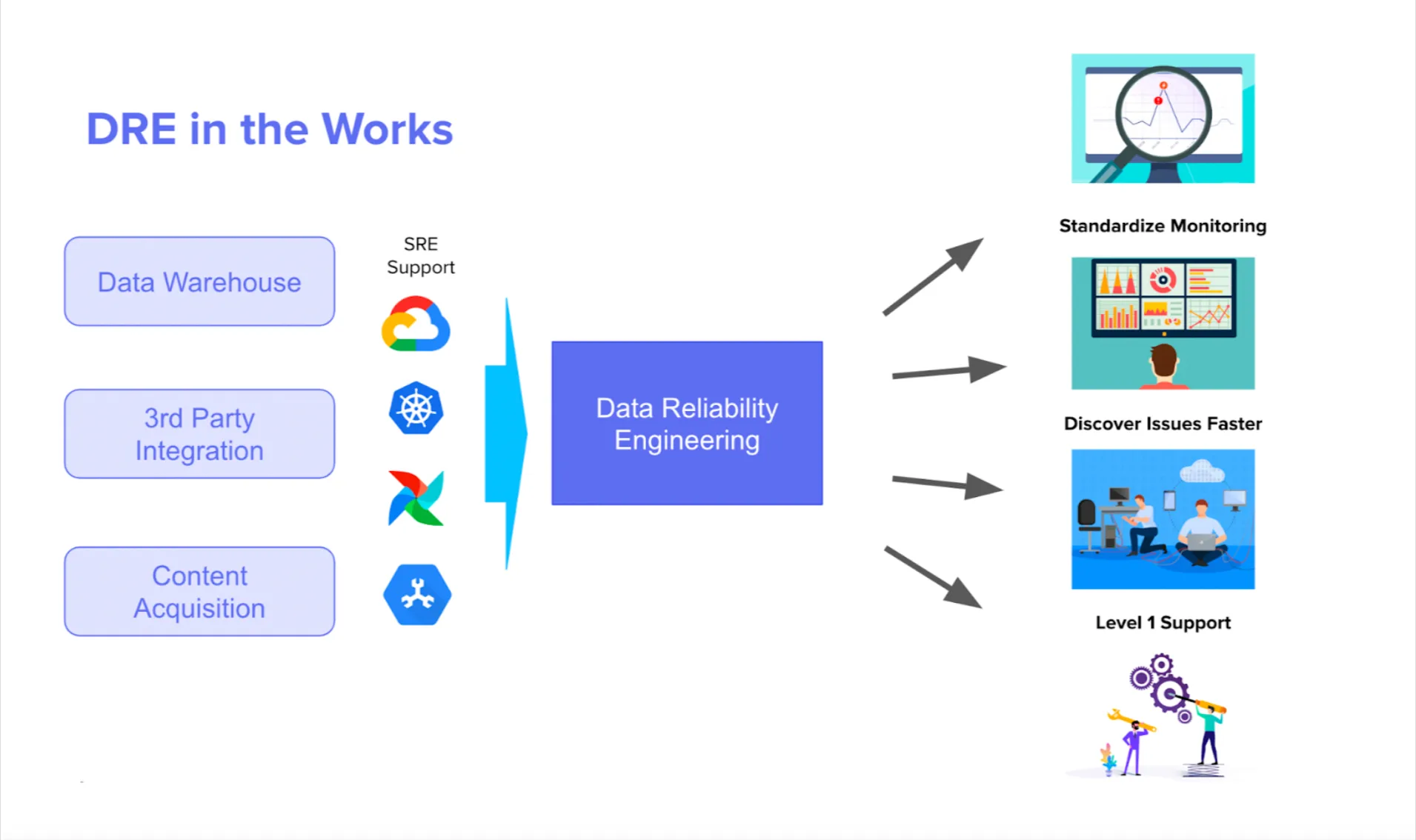

This meant that processing real-time data and ensuring its freshness were vital requirements in supporting the kind of scalability and data quality PrimaryBid customers needed. So, the data team built a change daily capture public server for writing their own change logs, improving their 10-minute latency to 3-4 seconds. And with Monte Carlo’s data observability platform layered across their data stack, if any staleness occurs, they’ll be proactively alerted — rather than waiting for an internal or external customer to notice and bring the issue to their attention.

Additionally, as PrimaryBid expands to serve new markets, their data ingestion sources will become more varied and changeable. “We’re bringing online a few data sources associated with our move to the US, including some public repositories that require scraping data and running it programmatically,” says Andy. “It will be a very easy-to-break data process since it’s not coming through a vendor or API, so we’ll need observability checks throughout.”

Outcome: Achieving reliable incident management

As the PrimaryBid team was evaluating data observability vendors, they knew incident management would become particularly important as they began operating across global time zones and dealing with a far more complex data landscape.

“The scale, infrastructure, and requirements to hold and host data in these different geographies — you can imagine the number of checks and pipelines are going to scale massively as we do that,” says Andy. “We’re now spinning five times as many plates as we were here in the UK. As we spin those plates, we need to know when they fall.”

Raising alerts and triaging incidents would mean waking people up — so being able to prioritize and route incidents appropriately was a priority. The buying committee found that several observability tools boasted their use of machine learning and AI to detect anomalies, relying on historical usage data and patterns, but Monte Carlo’s was the only solution up to par.

“Monte Carlo was the best platform we evaluated for triaging those problems, allowing you to flag something as expected,” says Andy. “ Now that we’re trying to go both to the west with the US and the east with the Middle East, we need a process that is robust, that alerts us to issues, and allows us to resolve stuff that’s not important very, very quickly.”

Monte Carlo’s incident management functionality allows PrimaryBid to set up intelligent alert routing, quickly resolve or de-prioritize incidents, and easily visualize the status of broken pipelines.

Outcome: Delivering the gold standard of data to internal teams

In addition to rebuilding its tech stack, PrimaryBid also made organizational changes to support its newfound focus on reliable data. Whereas teams across the company had maintained their own records and kept data in silos, they migrated to one version of truth owned by the data function.

These changes meant their data had to be the gold standard, and data observability helps them deliver on that promise and build data trust.

“As teams abandon their own set of reporting and join up with us instead, we can’t be giving them incorrect figures,” says Andy. “Schema changes, breaking changes, freshness, out-of-date data — we need to be all over that to make sure the one version of truth is actually correct.”

The data team also had to ensure its machine learning and AI teams could rely on reliable data.

“Building and maintaining predictive models requires a steady stream of market stock price data,” says Andy. “The source of that data needs to be absolutely 100% robust, or the predictions coming out of the models that we’re serving in production will fail.”

By allowing the data team to receive instant alerts and proactively communicate any data downtime issues with impacted users, data observability will help maintain data trust across the organization.

Getting started with data observability

The PrimaryBid team sees data observability as a must-have for any organization that places a premium on data — and in their eyes, that’s pretty much everyone these days.

“In the last 18 months to two years, data has become a tier one service offered by the business — both to internal users, and now to external partners as well,” says Andy.

“Things being broken or processes not working is no longer acceptable. It’s no longer ‘We can’t do this week’s report’ — it’s ‘A client is unable to get value from a data product they’ve paid for’. In my opinion, that pivot means data observability is massively more important than it was five years ago.”

Ready to learn how data observability can help your organization take its data quality to the next level? Contact our team to learn more.

Our promise: we will show you the product.

Product demo.

Product demo.  3 Steps to AI-Ready Data

3 Steps to AI-Ready Data  What is a data mesh--and how not to mesh it up

What is a data mesh--and how not to mesh it up  The ULTIMATE Guide To Data Lineage

The ULTIMATE Guide To Data Lineage