How to Build a Data Quality Integrity Framework

In a data-driven world, data integrity is the law of the land. And if data integrity is the law, then a data quality integrity framework is the FBI, the FDA, and the IRS all rolled into one.

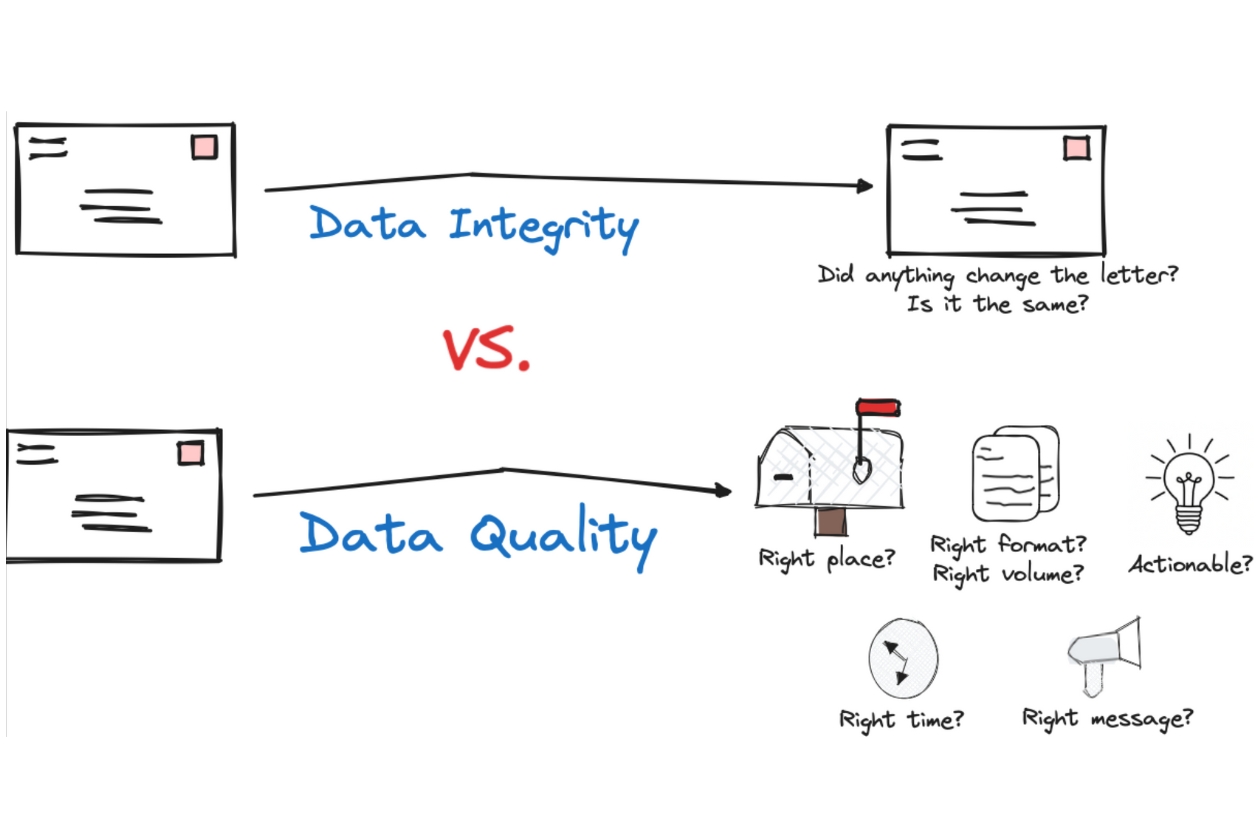

Data integrity is the ability to trust that a company’s data is reliable, compliant, and secure based on internal, industry, and regulatory standards.

Because if we can’t trust our data, we also can’t trust the products they’re creating.

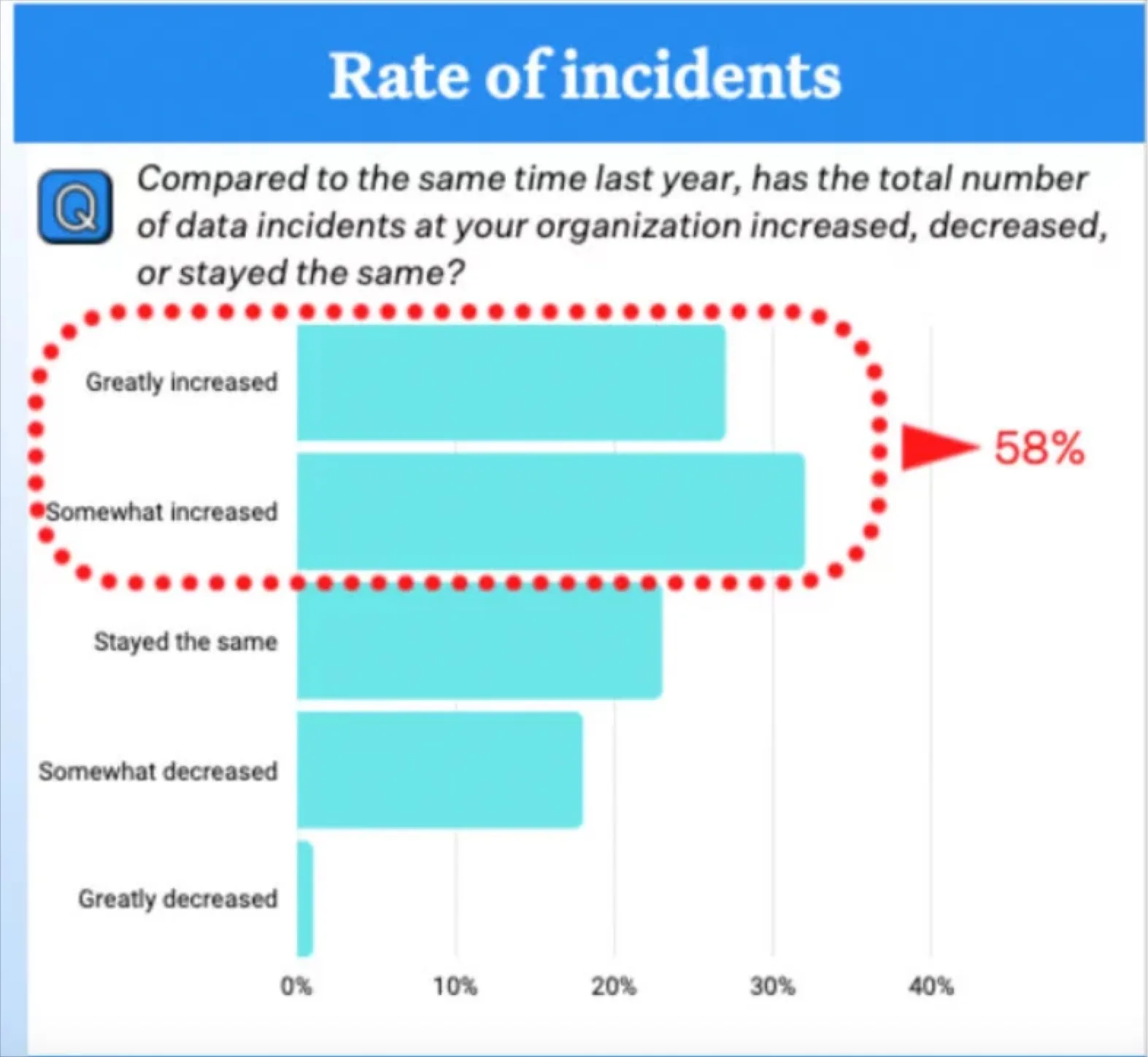

So, as organizations continue to ingest more and more data and complicate their pipelines to support new use cases, the need to protect data integrity also becomes increasingly important.

In this article, we’ll define what a data integrity framework is, how it supports data trust across an organization, and what you’ll need to get started.

Sounds like what you’re looking for? Let’s jump into it.

What is a Data Integrity Framework?

A variety of factors can threaten the integrity of your data, from human error and ineffective training to a lack of security and liberal data access.

A data integrity framework is a system of processes and technologies that work together to improve data quality, security, and compliance at scale. An effective data integrity framework will account for both the physical integrity of an organization’s data (i.e., proper storage and continued access) as well as its logical integrity (i.e., ensuring the data is accurate and reliable).

The need for data integrity isn’t limited by industry or team size either. Data teams of all stripes have an imperative to ensure data meets internal and external standards. And in the same way that no two organizations are identical, no two data integrity frameworks will be either.

Companies that leverage CRMs might mitigate risks related to broad domain access by implementing a framework that includes data collection controls, human-error checks, restricted raw data access, cybersecurity countermeasures, and frequent data back-ups.

On the other hand, healthcare organizations with strict compliance standards related to sensitive patient information might require a completely different set of data integrity processes to maintain internal and external standards. According to a study published in 2021, “Attackers specifically target healthcare sub-domains to manipulate valuable data. Hence, protecting the integrity of data in medical [sic] is the most prioritized issue.” This study goes on to suggest that healthcare companies should consider instituting data integrity frameworks that leverage blockchain technologies in addition to other data quality measures

Or in the supply chain industry where speed is paramount, data integrity framework can be used to deliver faster and more accurate tracking information or more informed decisions about suppliers, shipping carriers, or even replenishment thresholds. Supply chain management company Zencargo recommends a data integrity framework that automates rules for exception management, integrates various data sources, and automates data updates across sources.

Key Components of a Data Integrity Framework

Data integrity doesn’t exist in a vacuum: it’s the result of thoughtful processes and procedures that combine to deliver data that’s accurate, complete, consistent, reliable, and accessible. A comprehensive and effective data integrity framework should consider each of the following components:

Identify data integrity requirements

While “data integrity” may sound like a fairly qualitative term, your own data integrity framework will be defined by a variety of objective requirements that will need to be fulfilled in order to achieve data integrity. Here are some of the requirements you’ll need to define at the outset of developing your data integrity framework:.

Regulatory requirements

According to Compliance Online, regulatory requirements for data integrity include:

- Frequent, comprehensive data back-ups

- Physical data security (i.e., storing data in its original unaltered format and protecting it from deletion)

- Comprehensive documentation

- Storage of original records or true copies

- Complete data

Data quality

In addition to the regulatory requirements of data integrity, a comprehensive data integrity framework needs to account for the quality of the data.

A complete data quality solution should address each of the five pillars of data observability: freshness, quality, volume, schema, and lineage. These five pillars combine to facilitate data that’s accurate, complete, and reliable for production.

Data security

Finally, in order for a data integrity framework to be considered complete, it needs to account for data security. As a component of the compliance needs inherent to much of data usage, data security protects data from leaks, corruption, and piracy.

Some of the data security processes you might choose to operationalize within your data integrity framework include monitoring access controls and maintaining audit trails to understand who’s accessing the data and when.

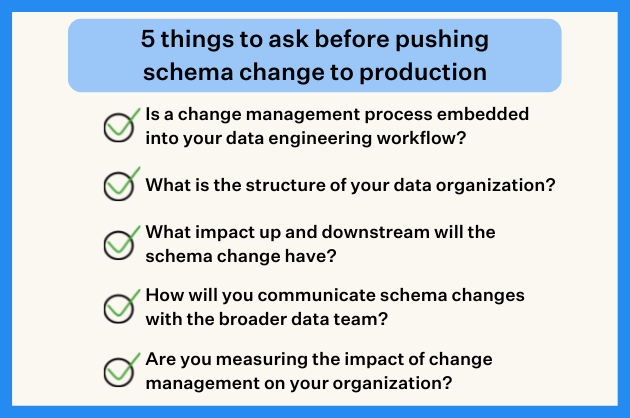

Establish data validation and consistency rules

One of the keys to data reliability is the consistency of the data. When data gets too far outside a standard deviation, there’s a good chance there’s a gremlin or two in the pipeline. So, how do you know when someone’s been feeding the data after midnight?.

Data validation is the process of restricting values to a predefined range based on historical data to eliminate errors and inconsistencies within the data and provide context to a given data field.

These rules can include validating data types and ranges, validating data against an external source, validating the structure of data, or ensuring the logical consistency of data with rules to validate that dates are arranged chronologically, words are spelled correctly, etc.

SELECT COUNT(*) as num_invalid_codesFROM table_nameWHERE column_name IS NOT NULL AND column_name NOT IN ( SELECT code FROM iso_3166_1_alpha_2)Example of data validation test to compare the country code in your column to the list of valid codes in the lookup table.

While data validation can technically be managed manually, it’s a time-consuming process that’s often prone to additional human-errors. If you can, consider establishing automated data validation and consistency rules instead to speed time-to-value and limit errors within your validation practices.

Perform a data audit

Before you can establish a framework to ensure the future integrity of your data, you first need to understand the state it’s in today. A data audit can help you understand the challenges and opportunities associated with data across your organization.

Your data audit should include three steps:

- Implement first-party data best practices, including appropriate consent measures.

- Establish best practices for compliance to understand what data is collected and how it can be used now and in the future.

- Identify and remediate gaps within your strategy and your data stack.

Implement access controls and security measures

One of the most obvious threats to data quality and integrity is access. Providing too much access to too many users can threaten not only your data’s security but its quality as well.

As you establish your data integrity framework, be sure to implement controls that provide appropriate access for appropriate domain users to minimize the physical and logical risks to data integrity.

Plan for data backups and recovery

Frequent data backups may be required for regulatory compliance, but they’re also good practice for companies in unregulated industries as well. The team over at Infosec Institute said it well when they wrote that, “No security solution can guarantee that something wrong will not happen to your data for whatever reason. The only way to be sure that you will get off scot-free if something happens to your data is to regularly and properly back it up.”

Do yourself a favor and make data backups a component of your data integrity framework. You’ll sleep a little easier.

Monitor and maintain data integrity

Ensuring data integrity is never a one-and-done exercise: establishing a data integrity framework is just the first step in a constant, consistent process of data monitoring and maintenance. It’s critical to institute steps that enable transparent oversight of your company’s data, so you can intervene early when something goes wrong. That’s where data observability comes into play.

A data observability platform gives data leaders unparalleled visibility into their data’s integrity with automated monitoring, automated root cause analysis, and automated lineage to detect, resolve, and prevent data incidents faster.

Implementing Data Integrity in Practice

Once you’ve determined the key components of your data integrity framework, it’s time to implement it.

Successfully implementing a data integrity framework is more than flipping a switch. It requires structural and cultural changes, careful adherence to best practices, and a plan to address challenges that are bound to arise throughout the process.

How to set up a data integrity framework

The data integrity framework you’re creating won’t exist in a silo. It will require buy-in from multiple stakeholders—including business leaders and your own data team—in order to be successful.

To facilitate adoption, consider forming a data governance board that oversees your data assets and sets the strategic direction of initiatives like those recommended in your integrity framework. In addition to establishing the policies and practices for data management, this board can work to generate organization-wide support for data integrity management over the long-term.

In addition to a data governance board, your data integrity framework implementation will also require clear documentation to identify and define practices of data management, architecture, and integration within your organization.

Certain aspects of your data integrity framework may also require specific tools and technologies like observability or orchestration. Before you design your framework, ensure that resources are available and earmarked for any required tooling that may be identified during the design process. This will expedite approval and development when you get to the implementation phase of the project.

Best practices for data integrity management

As you prepare to manage data integrity, consider instituting certain best practices to make data integrity maintenance a bit easier over the long haul, like:

- Maintain more strict access controls

- Automate audit trails

- Schedule consistent data backups

- Keep software up-to-date and understand the weaknesses of your systems

- Manage the physical security of your organizations data with broader security protocols

- And create a culture of data integrity across the organization with educational resources and training to keep users fresh.

Overcoming challenges during data integrity implementation

Implementing a data integrity framework is an essential step in maintaining data integrity over time, but it doesn’t come without its challenges. As you build your framework, it’s important to prepare a plan that will equip you to overcome potential challenges with a combination of processes, procedures, and external tools.

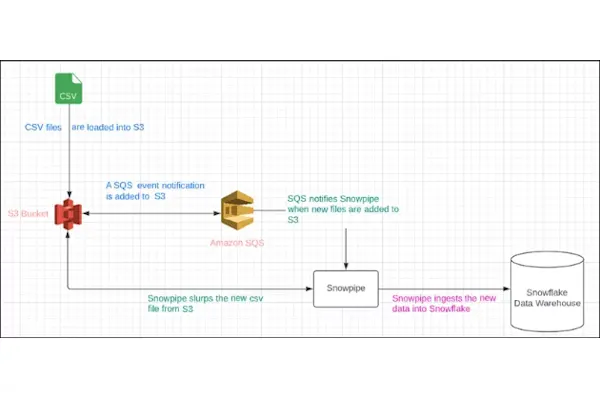

For example, disparate data sources that don’t integrate easily present a challenge to data integrity in the form of inconsistencies, inaccuracies, duplication, and diluted security. As you build your data integrity framework, consider ways to more seamlessly integrate your data, like utilizing more cloud-based orchestration tools to control ingestion across siloed pipelines and end-to-end observability for a complete picture of your data’s health throughout your stack. You should also consider replacing manual data entry with automatic tools wherever possible to reduce the potential for human-error within your database.

A lack of existing auditing and security measures also poses a challenge. Data security is absolutely essential to data integrity, but implementing net-new practices where they didn’t exist previously is never an easy proposition. Before implementing your data integrity framework, establish a transparent system with clearly defined roles and responsibilities to prepare teams to take the right actions when the time comes.

Maintaining Data Integrity Over the Long-Term

Of course, implementation is just the beginning of your data integrity journey.

Once you’ve implemented your data integrity framework, you’ll need to be ready to maintain that integrity over the long-term.

Here are some best practices for maintaining data integrity:

Conduct regular data audits and assessments

Make data audits and assessments a regular part of your organization’s protocols. As your organization evolves, your data will too, so it’s important to set aside time on a recurring basis to review your data and identify areas for improvement.

Strive for continuous improvement and adaptation

Consider your framework a dynamic tool that will grow and adapt with your organization. Once you’ve implemented your data integrity framework, strive for continuous improvement by constantly refining your system to better meet the needs of your organization and its stakeholders. Better processes, better tools, better oversight—all these can work to strengthen your framework and deliver more trustworthy data to your consumers.

Provide ongoing training and education

Data integrity is a team sport. It requires involvement and enthusiasm from players at every level of your organization—not just the members of your data team. Create a culture that understands and values data integrity by providing ongoing training and education to ensure policy compliance, mitigate security threats, and establish buy-in across stakeholders.

Leverage Monte Carlo for Data Integrity and Observability

Creating a culture of data integrity is a journey—but it’s one you don’t have to take alone. By leveraging Monte Carlo’s data observability platform, maintaining data quality instantly becomes the simplest part of your data integrity framework.

As a critical component of your data integrity process, Monte Carlo automated monitoring provides visibility into the health of your entire database at a glance. And as you develop SLAs and thresholds to fine-tune your data integrity, our deep custom monitors are right there with you.

From table and column-level lineage to streamlined user dashboards, Monte Carlo’s end-to-end observability democratizes data quality and provides unparalleled visibility into data integrity when and where you need it most.

Ready to learn more? Schedule a demo to find out how Monte Carlo’s data observability can elevate your data integrity today.

Our promise: we will show you the product.

Product demo.

Product demo.  What is data observability?

What is data observability?  What is a data mesh--and how not to mesh it up

What is a data mesh--and how not to mesh it up  The ULTIMATE Guide To Data Lineage

The ULTIMATE Guide To Data Lineage