Must-have Priorities for Your Data Team in 2021

Over the past few weeks, I’ve been having lots of conversations with some of the world’s best data teams about their 2021 priorities. With many are focused on upgrading or scaling out existing infrastructure, two “resolutions” have really stuck out to me:

- Bringing engineering and data organizations closer together

- Directly connecting data producers with data consumers

Unlike so many others, these two priorities are decidedly not technical, speaking to the need not just for smarter tooling (think: augmented analytics, data lakehouses, and data platforms) but also an entirely new mindset about building and scaling data teams.

Let’s start with the facts. Modern companies are leveraging more and more data to stay ahead of the competitive curve and drive innovation. As such, more and more people at said companies are using and accessing this data to drive critical business functions.

To make this domain-oriented data infrastructure a reality, many data teams are adopting a “data mesh” approach that gives cross-functional teams the option to support “data-as-a-product,” with each area handling their own pipelines and analytics.

The “data mesh” concept continues to gain steam, and while I fully support it, the fact of the matter is that most organizations aren’t set up for success when they start to adopt it. To move fast at scale, engineering teams and data organizations need to work together. For many companies, these two areas work in silos, making it easy to “mesh” up this approach.

That being said, if you’re starting out with those two priorities above, you’re well on your way to success. Here’s how to get there:

Apply a DevOps Mindset to Data

First, we’ve seen firsthand that introducing software engineering and DevOps concepts to data teams and workflows is a surefire way to improve data reliability and, in turn, data trust across the organization.

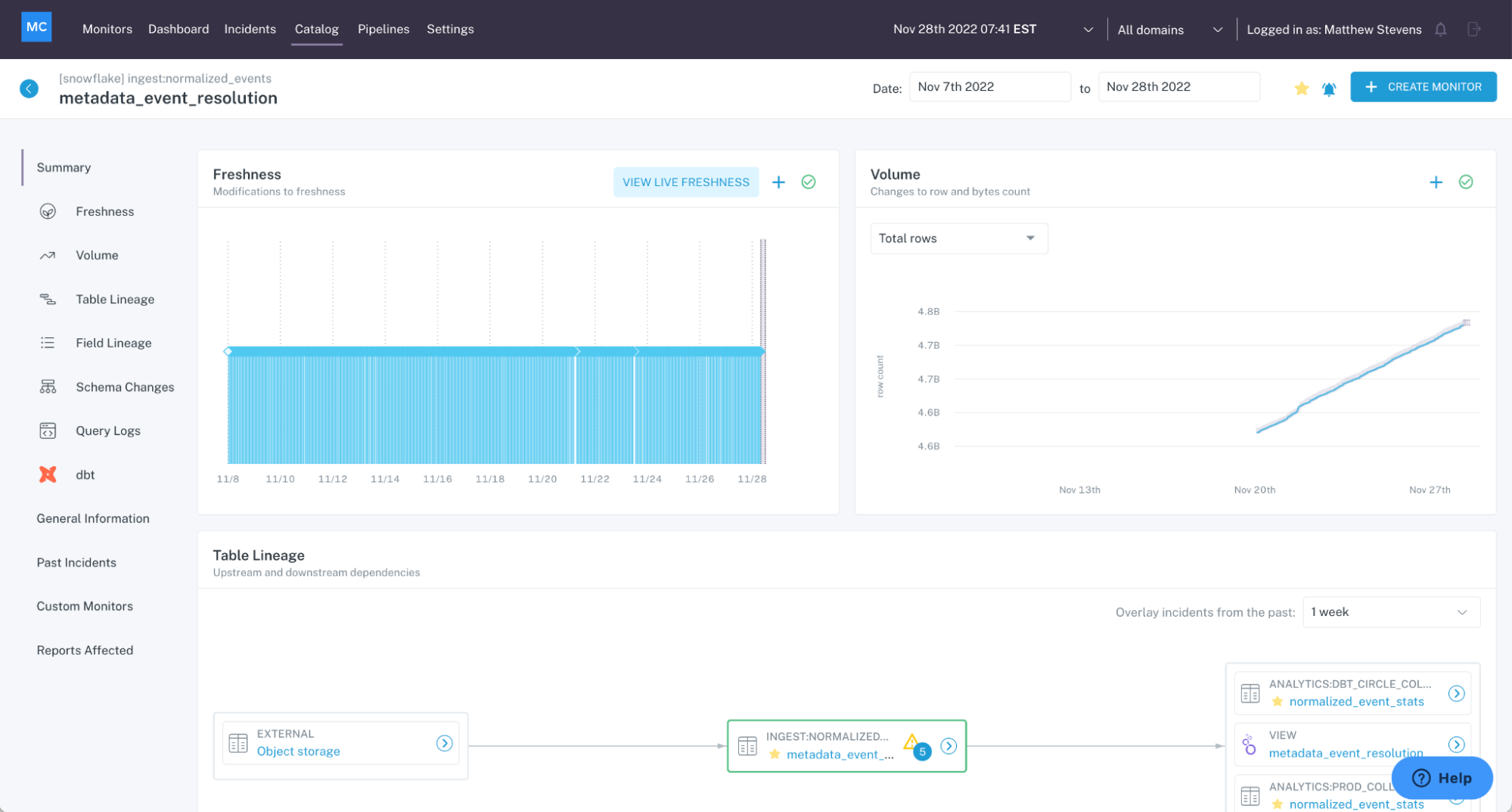

Specifically, data teams can apply data observability best practices to monitoring, alerting, and troubleshooting for data incidents that arise in your pipelines. Better yet, leveraging lineage to map upstream and downstream dependencies can make it that much easier to collaborate between engineering and data teams when things break.

Pro-tip: Most impactful: use an SLA/ SLO framework (coupled with end-to-end observability to monitor!) to ensure alignment and accountability. This post by eBay’s Head of Product, Data Services has some great insights on this.

Invest in Data Discovery

Once teams are aligned with clear ownership and accountability for specific data domains, teams must turn to data discovery to reduce friction for operationalizing data and insights.

Data discovery holds data owners held accountable for their data as products, as well as for facilitating communication between distributed data across different locations. Once data has been served to and transformed by a given domain, the domain data owners can leverage the data for their operational or analytic needs. Unlike a data catalog, data discovery surfaces a real-time understanding of the data’s current state as opposed to it’s ideal or “cataloged” state.

Pro-tip: Having a shared, self-service platform for technical and non-technical users alike to find what data they need and to better understand it (where does it come from, is it up to date, etc.) is critical to empowering data consumers.

By adopting DevOps best practices and investing in automated, scalable data discovery, data teams can meet these priorities — and more. With the right approach, greater data reliability and collaboration are in sight.

Interested in learning more about the relationship between data observability and discovery? Reach out to Scott and the rest of the Monte Carlo team!

Here are a few more resources that should give you a good idea of how to think about building your modern data team:

Product demo.

Product demo.  What is data observability?

What is data observability?  What is a data mesh--and how not to mesh it up

What is a data mesh--and how not to mesh it up  The ULTIMATE Guide To Data Lineage

The ULTIMATE Guide To Data Lineage