Table Types Are Evolving And So Is Monte Carlo

Tables seem like a simple concept. You have columns and you have rows, what could be easier?

As any data engineer currently rolling their eyes would tell you, the reality has long been more nuanced. Is a materialized view a view or a table? Should you distinguish between tables and external tables? And what about wildcard tables?

These questions just scratch the surface of the painful delightfully complex world of data engineering.

And as crazy as it sounds at first blush, innovations in table formats got prime air-time at both Snowflake and Databricks annual conferences in 2023. There is a method to the madness–these and other table innovations will support new workflows that allow engineers to deliver data more quickly, and with the help of Monte Carlo, more reliably.

So let’s dive into a quick high-level overview of how data observability works and then dive into some of the nuances of Iceberg and Delta tables as well as Delta Live Tables and Snowflake Dynamic Tables.

Table of Contents

How Data Observability Works

Data observability provides full visibility into your data, systems, and code so you can find and fix bad data quickly.

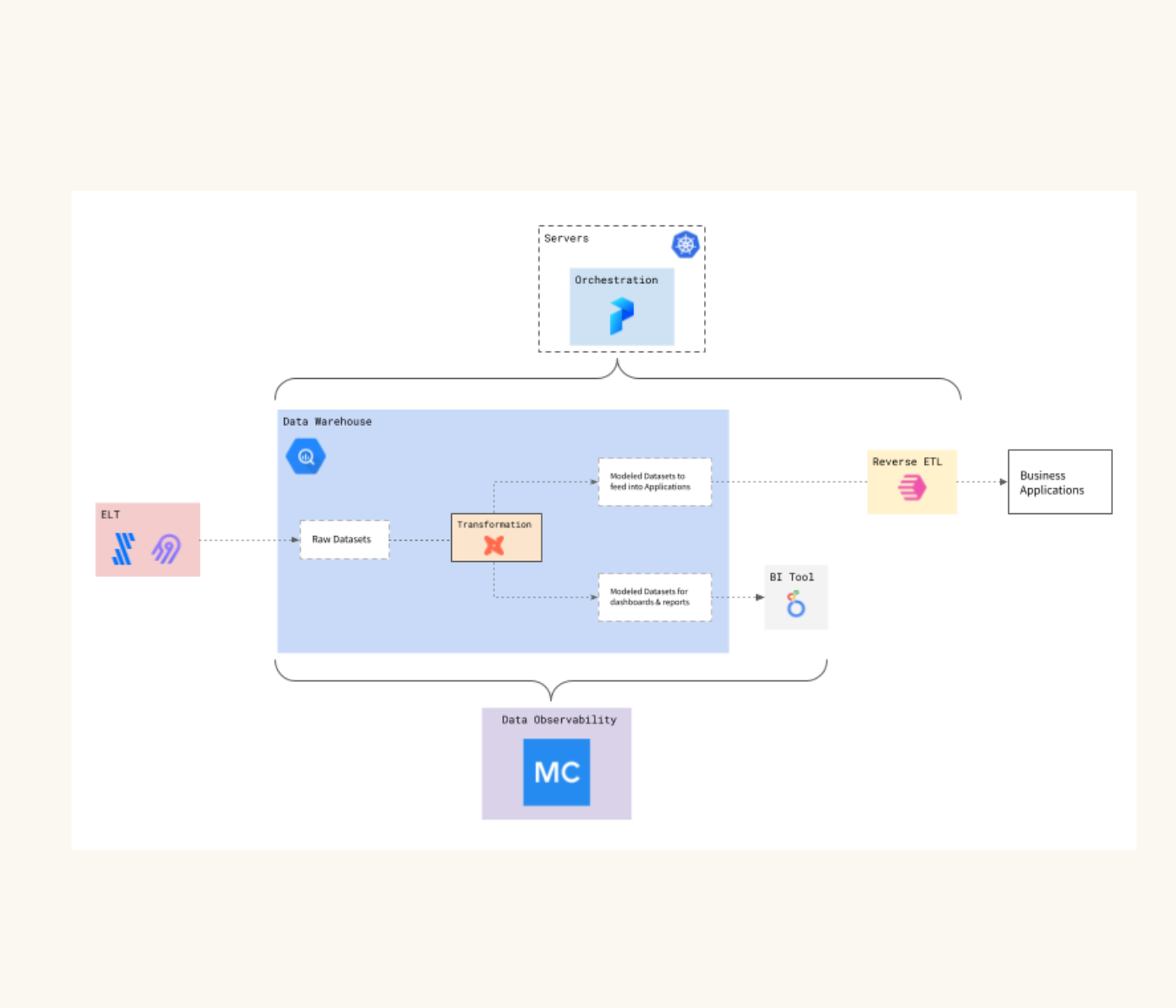

At a broad feature level this translates to machine learning monitors that understand how these aspects of your data platform normally behave and alert you when anomalies occur. These solutions also provide you the data lineage and root cause analysis insights you need to understand how to fix them.

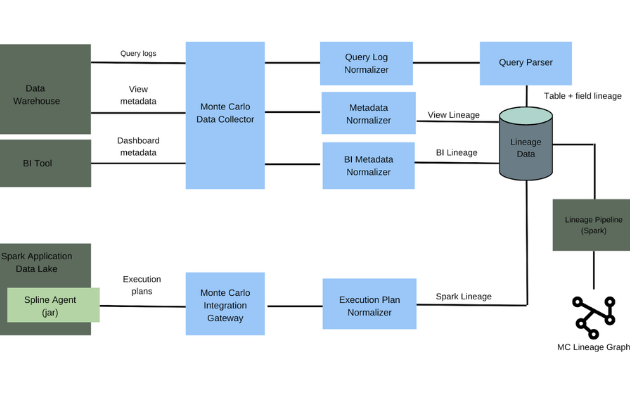

To do this, data observability solutions like Monte Carlo will piece together information from query logs, data aggregates and system metadata. Some platforms have these components centralized while others do not.

All of this is to say that how table formats are structured, and specifically the metadata, impacts how they can be monitored and observed.

Made For The Lakehouse: Iceberg and Delta

There was a point in time not too long ago that data lake vs. data warehouse was a heated and important debate. Today, these concepts have largely merged into a lakehouse architecture. Most major data cloud providers support both use cases.

Part (but not all) of that evolution is thanks to new table formats like Delta and Iceberg that provide the structure and metadata needed for advanced governance and management tasks.

Previously a data lake would need to be accompanied by a metastore like Hive to enable many of Monte Carlo’s monitors. Now, Delta or Iceberg tables are now sufficient in many environments that leverage Databricks, Snowflake, or other means of compute.

So for example, a data team may be using Snowflake with external Iceberg tables to leverage the convenience of modern metadata management while keeping the data well structured and efficient under the hood.

This team can benefit from all of Monte Carlo’s detection and resolution capabilities, but may especially appreciate schema change detections. Even though Iceberg supports in-place schema changes without needing to re-write the data file, a change in table definition can still affect various different access-patterns and downstream usages.

Now data teams can have their lake and eat it too.

Transform in place: Dynamic Tables and Delta Live Tables

Data doesn’t arrive neatly packaged with a pretty bow–it needs to be refined, aggregated, and otherwise transformed for its intended business use.

These transformations are largely done today with layers of code, third-party tools, or workflows running in the warehouse or lakehouse. Databricks Delta Live Tables and Snowflake Dynamic Tables provide another option.

In both cases these table types will transform the data or evolve the schema once they have received data without needing to create a target table. While perhaps not as suitable for more complex operations, they can be helpful tools in a data engineer’s belt.

For example, Snowflake Dynamic Tables are amazing for situations where you don’t want to define a strict schedule of ETL and materialization. The tables can automatically update based on certain criteria so you can assert the necessary freshness in the table definition itself!

However, the outcomes of all your JOINs or other complex operations often become unpredictable as you layer more logic to your pipelines. In these cases, data teams will benefit from Monte Carlo’s ability to correlate data incidents with changes in code.

Databricks Delta Live Tables are a great tool for simplifying the developer experience in Databricks. They aren’t technically a table type when you look under the hood, but rather a combination of streaming tables and materialized views that has been helpfully abstracted away.

Of course, data teams need to ensure that not only are those transformations operating as intended, but the data being transformed is correct in the first place. Monte Carlo automatic volume monitors and SQL rules alert teams to issues inherent within the data itself.

While both Dynamic Tables and Delta Live Tables can create added complexity the critical takeaway is that Monte Carlo fully supports and monitors both table types so data engineers can leverage these tools without worrying about compromising reliability.

Why This Matters

Data reliability requires full visibility into your data, data systems, and code so you can find and fix bad data quickly. Data pipelines and architectures are evolving rapidly.

When you are evaluating a data observability solution be sure to consider not only the support levels for your current systems, but their track record of innovating alongside the latest and greatest data engineering advancements.

Curious about what’s coming next for data engineering, table formats, and data observability? We’d love to talk to you!

Our promise: we will show you the product.

Product demo.

Product demo.  What is data observability?

What is data observability?  What is a data mesh--and how not to mesh it up

What is a data mesh--and how not to mesh it up  The ULTIMATE Guide To Data Lineage

The ULTIMATE Guide To Data Lineage