The (Ongoing) Evolution of Table Formats

As organizations seek greater value from their data, data architectures are evolving to meet the demand — and table formats are no exception.

Modern table formats are far more than a collection of columns and rows. Depending on the quantity of data flowing through an organization’s pipeline — or the format the data typically takes — the right modern table format can help to make workflows more efficient, increase access, extend functionality, and even offer new opportunities to activate your unstructured data.

But while the modern data stack, and how it’s structured, may be evolving, the need for reliable data is not — and that also has some real implications for your data platform.

So, how did we get here? And where are we going? In this piece, we’ll take a look back at where table formats began, dive deep into where we are today, where we’re headed tomorrow, and what all this change means for the perennial challenge of data quality.

Table of Contents

Table formats: Where we started, and where we’re headed

It may sound unnecessary, but let’s start by getting on the same page: what is a data table format? The answer isn’t as simple as it used to be.

At its core, a table format is a sophisticated metadata layer that defines, organizes, and interprets multiple underlying data files. Table formats incorporate aspects like columns, rows, data types, and relationships, but can also include information about the structure of the data itself. For example, a single table named ‘Customers’ is actually an aggregation of metadata that manages and references several data files, ensuring that the table behaves as a cohesive unit.

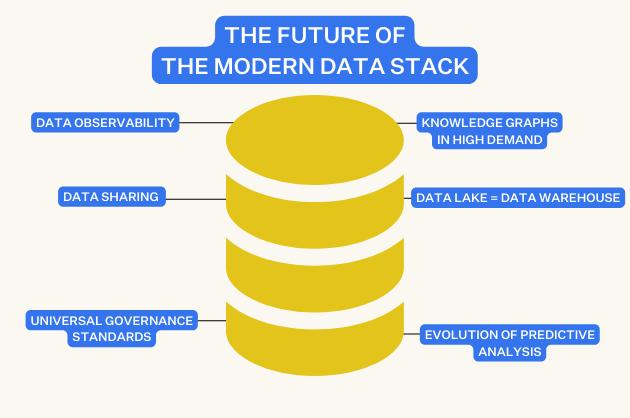

As the modern data stack has gained popularity — and especially as data architectures have decentralized across different platforms and services — table formats have evolved to keep up by integrating and functioning efficiently across complex, scattered environments. This evolution has happened at a breakneck pace.

The “legacy” table formats

The data landscape has evolved so quickly that table formats pioneered within the last 25 years are already achieving “legacy” status. Let’s revisit how several of those key table formats have emerged and developed over time:

- Apache Avro: Developed as part of the Hadoop project and released in 2009, Apache Avro provides efficient data serialization with a schema-based structure. It was designed to support high-volume data exchange and compatibility across different system versions, which is essential for streaming architectures such as Apache Kafka.

- Apache Hive: Initially developed at Facebook and released in 2010, Hive was designed to bring SQL capabilities to Apache Hadoop and allows SQL developers to perform queries on large data systems. It bridges the gap between traditional databases and the big data world, providing a platform for complex data transformations and batch processing in environments that require deep data analysis.

- Apache ORC (Optimized Row Columnar): In 2013, ORC was developed for the Hadoop ecosystem to improve the efficiency of data storage and retrieval. It enhances performance specifically for large-scale data processing tasks, offering advanced optimizations for superior data compression and fast data scans, essential in data warehousing and analytics applications.

- Apache Parquet: Also released in 2013 and emerging from the need to improve I/O performance for complex and nested data structures, Apache Parquet provides a columnar storage format that allows for significant reductions in storage space and faster data retrieval. Its development was geared toward analytical workloads where quick access to large volumes of data is crucial, and it was widely adopted for its performance benefits across various data processing frameworks.

- Hudi (Apache Hudi): Originally developed at Uber and released in 2017, Apache Hudi (Hadoop Upserts Deletes and Incrementals) addressed the need for more efficient data processing pipelines that can handle real-time data streams. It provides functionalities like upserts, incremental loads, and efficient change data capture, which are essential for systems requiring up-to-the-minute data freshness and quick query capabilities.

- Delta Lake: Released by Databricks in 2019, Delta Lake was created to bring reliability and robustness to data lakes, incorporating ACID (Atomicity, Consistency, Isolation, Durability) transactions into Apache Spark to maintain data integrity across complex transformations and updates. This development was crucial for enabling both batch and streaming data workflows in dynamic environments, ensuring consistency and durability in big data processing.

The new kids on the table format block

Within the last five or six years, as data management needs have grown in complexity and scale, newer table formats have emerged. These new kids on the table format block are specifically designed to enhance data governance, management, and real-time processing capabilities:

- Apache Iceberg: Developed initially by Netflix and open-sourced as an Apache incubator project in 2018, Apache Iceberg was created to address the complexities and limitations of managing large datasets within traditional table formats. Iceberg provides advanced features such as schema evolution, which allows modifications to a table’s schema without downtime, and snapshot isolation, which ensures data consistency. Its design focuses on simplifying data table complexities and offering robustness and straightforward maintenance for massive data stores, making it a favorite for organizations looking to enhance data governance and management.

- Delta Live Tables: Released by Databricks in 2022, Delta Live Tables extend the capabilities of Delta Lake by focusing on automating and simplifying data pipeline constructions. These live tables streamline the management of complex data transformations, incorporating error handling and performance optimization directly into data pipeline definitions. They are particularly effective for environments that demand high data reliability and quality, simplifying the ETL processes and enhancing data operations with automated governance integrated into the system.

- Snowflake Dynamic Tables: Introduced by Snowflake in 2023, Dynamic Tables bring flexibility and real-time processing capabilities to the cloud data platform. These tables are designed to handle continuous data streams with automatic schema evolution, allowing them to adapt quickly to changing data requirements. Ideal for scenarios where data formats and structures are frequently updated, Dynamic Tables eliminate the need for predefined schemas, facilitating immediate data querying and analysis directly on streaming data.

As these new table formats have emerged, it’s changed the way data lakes are structured and managed.

For example, in many environments that use Databricks or Snowflake, Delta and Iceberg tables can integrate metadata management within the table format itself. That provides the structure necessary for advanced data governance and management rather than relying on additional systems like to manage metadata and enable comprehensive monitoring, like Monte Carlo’s data observability tools (more on that in a minute).

This greater autonomy means data lakes can function with greater scalability and flexibility, making it easier for organizations to manage large datasets and ensure data quality. And that matters — because these new table formats are also introducing complexity in other ways.

The rise of open formats — and staying power of data reliability

We’ve been talking about data lakes, data warehouses, and data lakehouses for a few years now — but the latest evolution is the “open lakehouse model”. While data platforms have often locked users into specific, proprietary formats, open formats like Iceberg offer a more flexible and modular approach to data architecture.

For example, Starburst’s Icehouse implementation pairs Iceberg with open query engine Trino. This combination allows users to use standard SQL statements to manage and query data lakes with the same finesse as traditional databases. Iceberg doesn’t just sit back and store data — it brings features like ACID transactions to the table, ensuring that operations like inserts, updates, and deletes are handled with precision.

With open table formats like Iceberg and open query engines like Trino, teams can mix and match the best tools for their needs without worrying about compatibility issues. They streamline a lot of the complexity traditionally associated with big data by simplifying how data is stored and accessed, delivering a more robust data management experience (similar to traditional data warehouses) while providing scalability and cost-efficiency provided by modern data lakes.

Data observability: from “nice-to-have” to “must-have”

As open table formats are more widely adopted, data quality also becomes more critical to maintain the integrity of your pipelines. For example, while Iceberg supports in-place schema changes without needing to re-write the data file, any change in table definitions can still affect downstream usages and access patterns.

As it becomes easier for teams to use and interact with data, it becomes increasingly important to ensure that data is accurate, fresh, and reliable. But as the opportunity for data breaks expand, the old methods for data quality become increasingly less efficient. That’s where automated end-to-end data observability goes from a “nice-to-have” to a “must-have” for enterprise data teams.

Fortunately, modern open table formats like Iceberg simplify the infrastructure needed to support data observability in data lakes.

Traditionally, data lakes required a metastore like Hive to manage metadata and support data observability tools effectively. These metastores acted as a central catalog of information that observability tools could access to monitor, alert, and fix data issues. But Iceberg, for example, integrates these metadata management capabilities directly within the table format itself. By storing detailed metadata about each operation and change within the data itself, it’s easier for data observability tools like Monte Carlo to monitor data quality without the need for a separate metastore.

Consider a data team using Snowflake with external Iceberg tables. Here, Iceberg’s handling of metadata means that all necessary information about data structures, changes, and the history of data is embedded directly within the data lake. This setup allows Monte Carlo’s monitors to tap into a rich vein of metadata directly from the tables, making monitoring and anomaly detection more effective and precise.

The new era of metadata and observability

Modern metadata management capabilities like automated schema evolution and detailed change tracking are crucial for maintaining high standards of data quality and reliability.

Monte Carlo’s observability solutions are particularly well-equipped to work in these environments, offering comprehensive tools for detection and resolution of data issues, ensuring the integrity and usability of the data throughout its lifecycle.

Especially as organizations move towards more decentralized data architectures, like the data mesh, data observability will only become more important. When ownership is distributed across domains and self-serve infrastructures support greater access to data, it’s essential that anomalies and errors don’t fall through the cracks. And when issues are detected, notifications need to be automatically directed to the correct owner of the data so that resolution can happen swiftly.

We can’t wait to see how table formats will evolve to deliver even more flexibility and efficiency for data teams — and how data observability will support quality at every step.

If you’d like to learn more about how Monte Carlo can protect your data health in the open lakehouse — or across any layer of your data stack — contact our team today.

Our promise: we will show you the product.

Frequently Asked Questions

What are the different types of table formats?

Different types of table formats include Apache Avro, Apache Hive, Apache ORC, Apache Parquet, Hudi (Apache Hudi), Delta Lake, Apache Iceberg, Delta Live Tables, and Snowflake Dynamic Tables.

What is a data table format?

A data table format is a sophisticated metadata layer that defines, organizes, and interprets multiple underlying data files. It manages aspects like columns, rows, data types, relationships, and data structure.

How do you write a table format?

Writing a table format involves structuring metadata to define columns, rows, data types, and relationships while managing underlying data files. Modern formats often integrate governance, management, and real-time processing.

Product demo.

Product demo.  3 Steps to AI-Ready Data

3 Steps to AI-Ready Data  What is a data mesh--and how not to mesh it up

What is a data mesh--and how not to mesh it up  The ULTIMATE Guide To Data Lineage

The ULTIMATE Guide To Data Lineage