The Smart Approach to ETL Monitoring

We’re the middle children of the data revolution, born into systems promised to be ‘set it and forget it,’ taught to believe that our pipelines would run forever. They won’t.

The first rule of data pipelines is: they will break. The second rule of data pipelines is: THEY WILL BREAK.

You could spend your nights staring at broken dashboards… or you can put in place an ETL monitoring strategy and avoid those everything-is-broken moments at three in the morning.

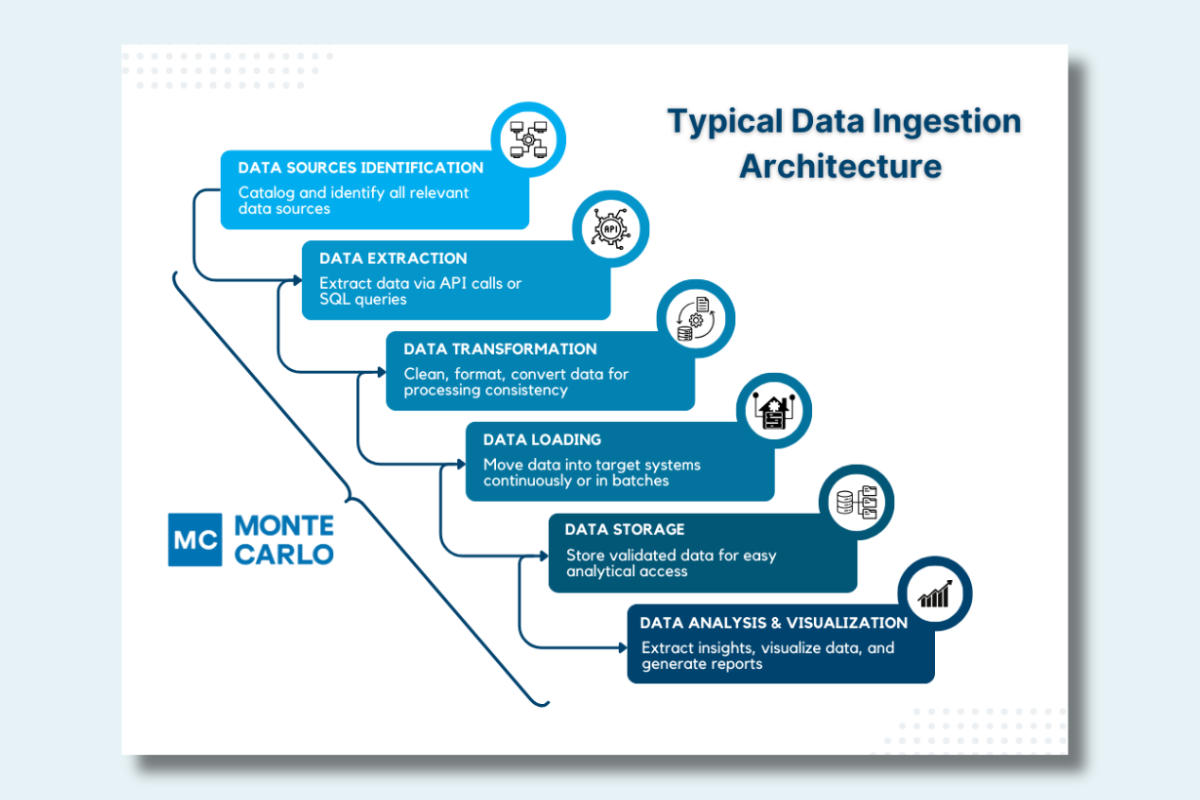

ETL monitoring is how smart engineers track and validate data pipeline health across the extraction, transformation, and loading stages so they can sleep soundly.

Table of Contents

The Trouble with Piecemeal Monitoring

It’s something I’ve seen a hundred times: Something goes wrong with your ERP connection, and suddenly you can’t access your financial data. The knee-jerk reaction? Slap together some quick monitoring for that specific issue. Before you know it, you’ve created a monster—the foundation of a much bigger (and messier) monitoring setup than you planned for.

Think about it: your ETL pipeline probably uses a bunch of different tools and services—databases, spreadsheets, analytics platforms, you name it. If you monitor each one separately, you’ll end up with a hodgepodge of metrics that don’t relate to each other. Sure, each service might be “monitored,” but good luck getting a clear picture of your overall pipeline health.

And that’s just the metrics. The logs are a whole ‘nother headache, scattered across various systems with different formats and different levels of detail. Each new system you add only makes this puzzle more complicated.

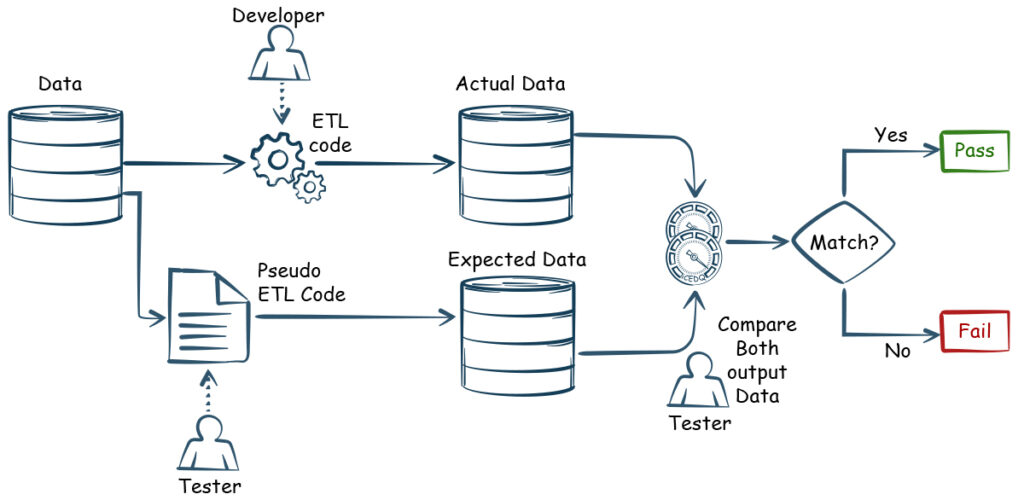

The trickiest part? The “Transform” step of ETL is often a black box. That means you’ll need to roll up your sleeves and do some engineering work to get those transformation scripts to report their progress. Worse, even if all your monitoring shows green lights, your data could still be silently corrupted or incomplete. You’ve got to actually check the data quality to know for sure.

Smart ETL Monitoring Strategies

Still want to build your own ETL monitoring? Here are some tips to keep in mind.

First up: be smart about alerts. Not every hiccup needs to wake someone up at 3 AM. And when you do send an alert, make it useful—include enough details so you (or whoever’s on call) can actually fix the problem. “Data quality dropped” isn’t helpful. “Customer transaction data from the APAC region shows 25% null values in the payment_method field” tells you exactly where to look.

But effective alerts are just the beginning. The real game-changer is seeing the big picture—don’t just monitor pieces of your pipeline in isolation. Set up end-to-end monitoring that tracks your data’s full journey from source to destination. When something goes wrong, having this complete view of your data lineage makes it way easier to track down the problem.

Of course, tracking the flow isn’t enough if the data itself is wrong. That’s why you also need to build validation checks into your monitoring that will flag issues like missing records, incorrect formats, or other quality problems before they cause headaches downstream. It is the quality control for your data factory.

With these basics in place, the next is to stop just reacting when things break. Instead, you want to analyze historical patterns to spot potential issues before they become real problems. If your processing times are slowly creeping up, you might want to optimize before things grind to a halt.

Finally, remember that data pipelines don’t exist in a vacuum—they serve the whole business. Create clear, user-friendly dashboards that help non-technical team members understand the status of your pipelines at a glance. This keeps everyone in the loop and helps avoid those dreaded “is the data ready yet?” messages. Plus, when stakeholders can see the pipeline status themselves, they’ll (hopefully!) better understand any delays or issues that pop up.

The Right ETL Monitoring Tool? Probably the One You Are Already Using

For most people, the monitoring that comes built into their ETL tool (like Airflow, or what you get from cloud vendors, Talend, or Informatica) is good enough to start with. Though that’s all it is – a start. It is just a smaller feature of a larger product, so it might not be as feature-full as you one day may need.

If you need to dig deeper, tools like Datadog can offer detailed performance monitoring for each piece of your ETL pipeline. And since we are talking about data systems, that’s still just scratching the surface. What if you really want to understand your data quality?

Monitoring ETL with Data Observability

This is where data observability comes in—it’s monitoring on steroids. Instead of just watching for technical hiccups, it gives you the full picture of your ETL pipeline, tracking both system health and data quality every step of the way.

Like traditional monitoring tools, data observability platforms plug right into your existing stack, whether it’s on-prem or in the cloud. They come with ready-to-use dashboards that make setup a breeze—just point them at your services and they handle the rest.

They also come with all of the features we discussed earlier, plus more! You get:

- Root cause analysis to find problems fast.

- Historical trend tracking to spot patterns before they become problems.

- ML-powered anomaly detection for the more subtle issues that more basic monitoring will miss.

- Great compliance features for tracking the age and access of your data.

Start ETL Monitoring with Monte Carlo

Ready to get started with data observability? Monte Carlo makes it simple.

Our automated platform monitors your pipelines end-to-end, catching issues before they affect your business. With smart AI detecting quality issues and detailed lineage tracking pinpointing their source, you’ll spend less time firefighting and more time deriving value from your data.

Want to see how Monte Carlo can transform your data reliability? Enter your email below to learn more.

Our promise: we will show you the product.

Product demo.

Product demo.  What is data observability?

What is data observability?  What is a data mesh--and how not to mesh it up

What is a data mesh--and how not to mesh it up  The ULTIMATE Guide To Data Lineage

The ULTIMATE Guide To Data Lineage