You Can’t Out-Architect Bad Data

Say it with me: bad data is inevitable.

It doesn’t care about how proactive you are at writing dbt tests, how perfectly your data is modeled, or how robust your architecture is. The possibility of a major data incident (Null value? Errant schema change? Failed model?) that reverberates across the company is always lurking around the corner.

That’s not to say things like data testing, validation, data contracts, domain-driven data ownership, and data diffing don’t play a role in reducing data incidents. They do. In fact, we’ve written extensively about emerging data quality best practices like data SLAs, circuit breakers, immutable/semantic warehouses, schema change management, data asset certification, and moving toward a data mesh.

As any data practitioner will tell you, throwing technologies at the problem is not a silver bullet for data quality, but they can be used as guardrails against some of the less obvious issues that wouldn’t otherwise be caught by diffing or testing.

In the wise words of our Site Reliability Engineering forebearers: “Embrace risk.”

Here’s why.

Can we outwit the inevitable?

In a great piece by dbt Labs CEO, Tristan Handy, he provides several helpful suggestions for tackling the upstream data issues that occur when software engineers push updates that impact data outputs in tightly coupled systems.

It’s a valuable response to one of our earlier posts on data contracts, and these types of insights are why we’re excited for him to present at our upcoming IMPACT conference. While I agree with his overall thesis of trying to prevent as many data incidents from hitting prod as possible, I would quibble with one of his subpoints that:

“Probably, one of those expectations should be: don’t make changes that break downstream stuff.

Does that feel as painfully obvious to you as it does to me? Rather than building systems that detect and alert on breakages, build systems that don’t break.”

I don’t mean to single out Tristan (who regularly writes to share his expertise with the community and will be joining our founder’s panel at IMPACT!) because this is a thought lurking in the subconscious of quite a few data practitioners. The idea that, “Maybe I can build something so well that bad data never enters my pipelines and my system never breaks.”

It’s an optimistic – and tempting – proposition. After all, data engineers, and data analytics professionals more broadly, need to have a certain “get it done against all odds” mindset to even decide to enter the profession in the first place.

The odds are against us in the number and diversity of ad-hoc requests we have to field on a daily basis; the odds are against us in the growing size – and scale – of our tables we have to manage; and the odds are against us when it comes to understanding the growing complexity of our company’s data needs.

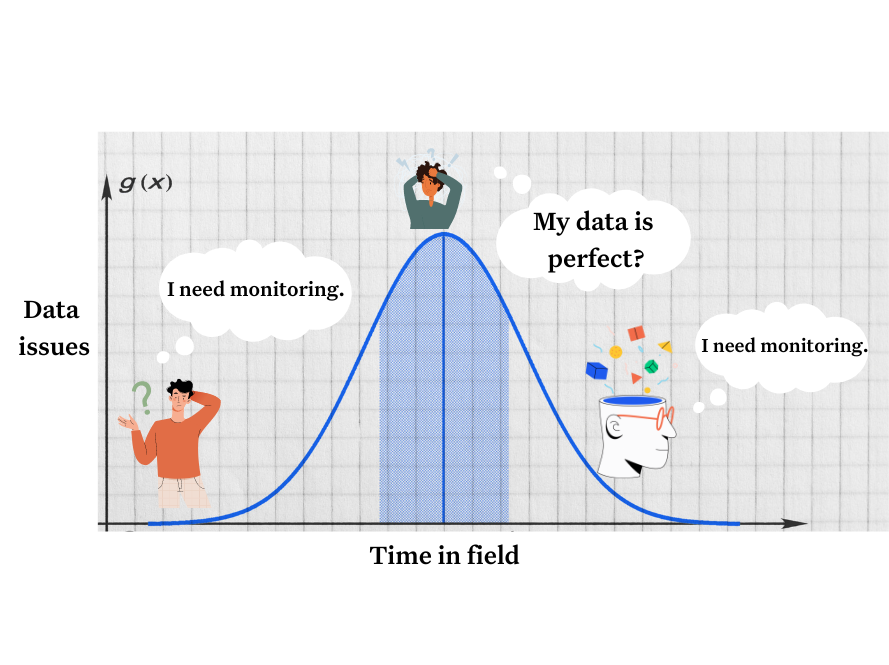

But the best data engineers and leaders understand two things: 1) they can’t anticipate all the ways data incidents can arise and 2) the consequences for these incidents are becoming more severe.

This post will enumerate a few reasons why you can’t out-architect bad data and then see what data professionals can glean from how other industries such as cybersecurity, logistics, and software engineering have solved this problem (hint: it’s not by building perfect systems or having perfect code).

Why you can’t out-architect bad data

While there are a near infinite number of reasons even the most perfect architectures suffer from bad data, I’ll list a few of the most common using real-life examples.

Human error

No architecture is perfect because these systems involve people and humans aren’t perfect. To err is to be human. These types of incidents could result from something as simple as a mistake during manual data entry to bad code being moved into production.

I know no one at your company uses Excel and that data couldn’t possibly enter your perfect system, nor has there ever been an overly rushed Q&A resulting in an approved pull request with bad code, but believe me it happens in the real world.

For example, one online retail company released a bug into production where users weren’t able to check out, which was luckily caught and resolved within 45 minutes. Another marketing technology company added Iterable tests that included dormant users and saw their consumption (and costs being incurred) in their customer data platform nearly triple overnight. An online language learning platform added a problematic filter to their ETL pipeline that stopped their tables growing at the right rate.

All of these issues, had they not been caught by some form of automation, would have had negative consequences and none of them would have been prevented by the most well architected platform.

Third-party dependencies

With apologies to John Donne, no system is an island entire of itself. Your data ecosystem likely consists of numerous data sources that are out of your control. This can range from less mature third-party partners who didn’t hit send on their weekly report to even the most sophisticated, automated systems at your largest partners.

For example, one media company was the first to realize their online video partner’s (a tech giant serving millions of users) data feed sent files four hours later than usual which resulted in the ETL job not updating correctly.

Or for example, a gaming company noticed drift in their new user acquisition data. The social media platform they were advertising on changed their data schedule so they were delivering data every 12 hours instead of 24. The company’s ETLs were set to pick up data only once per day, so this meant that suddenly half of the campaign data that was being sent to them wasn’t getting processed or passed downstream, skewing their new user metrics away from “paid” and towards “organic.”

So even if you have designed the perfect process for preventing upstream data issues in your own systems (which is quite the feat because as we’ve shown above even the largest tech companies have issues), you will need all of your partners to be perfect as well. Good luck with that.

New initiatives

As one of our colleagues likes to say, “data is like fashion, it’s never finished – it’s always evolving.” Even in a hypothetical world where you have finished the last brushstroke on your architectural masterpiece, there will be new requirements and use cases from the business that will necessitate change.

Even with greatest change management processes in place, when you are breaking new ground there will be trial and error. Pushing the envelope (which great data teams do) lowers the safety margin by definition.

For example, an online retail company is pushing themselves to achieve lower data latency within their analytics dataset, moving from 30 to 5 minutes. They have a number of moving pieces including replicating mySQL data and Kafka streams. During this initiative there was an issue that caused a complete outage impacting more than 100 tables populating executive dashboards.

Another example would be the financial company that adopted a new financial instrument involving cryptocurrency, which required the data type to be a float rather than an integer, unlike all of their other more traditional instruments.

Testing & data diffing can only scale so far

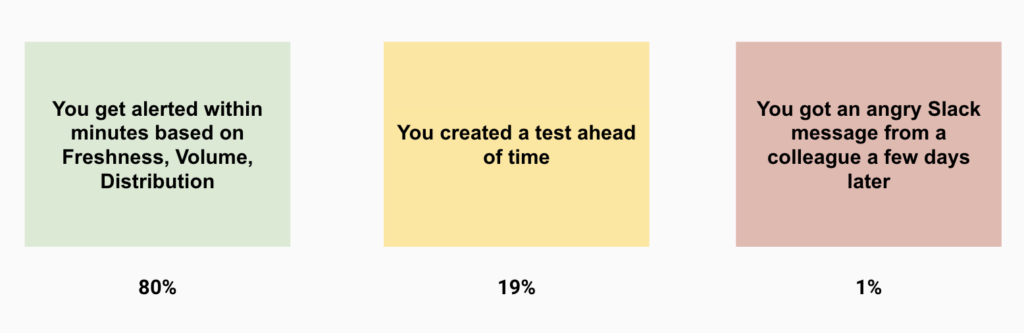

I’ve mentioned a few times now that it’s impossible to anticipate all the ways bad data can enter pipelines. To put a fine point on it, that’s why testing (or data circuit breakers that are really testing on steroids) will only catch about 19% of your data issues.

For example, one logistics company had an important pipeline that went to their most important, strategic customers. They monitored the quality meticulously with more than 90 separate rules on that pipeline. And yet, an issue still managed to bypass all of their tests and result in a half million rows going missing and a bunch of nulls popping up like weeds.

Lessons in reliability from other industries

So if the challenge of bad data is inevitable, what is the solution? The short answer is more, smarter automation.

For the longer answer and explanation, let’s take a look at how our colleagues in the cyber, logistics, and software engineering worlds tackle similar issues.

Cybersecurity professionals treat security breaches as inevitable

You won’t find any credible cybersecurity vendors promising perfect security or that they will eliminate all risk. Even their marketers know there is no such thing.

The challenge is the same. Humans are fallible and the defense has to be perfect whereas the attacker (in our case bad data) only has to be successful once to potentially create negative consequences. Your systems are only as strong as your weakest link.

So what do they do? They build the best possible layered defense they can, and then they invest resources into incident resolution and mitigation. They have plans to understand the impact and minimize the impact of the inevitable breach.

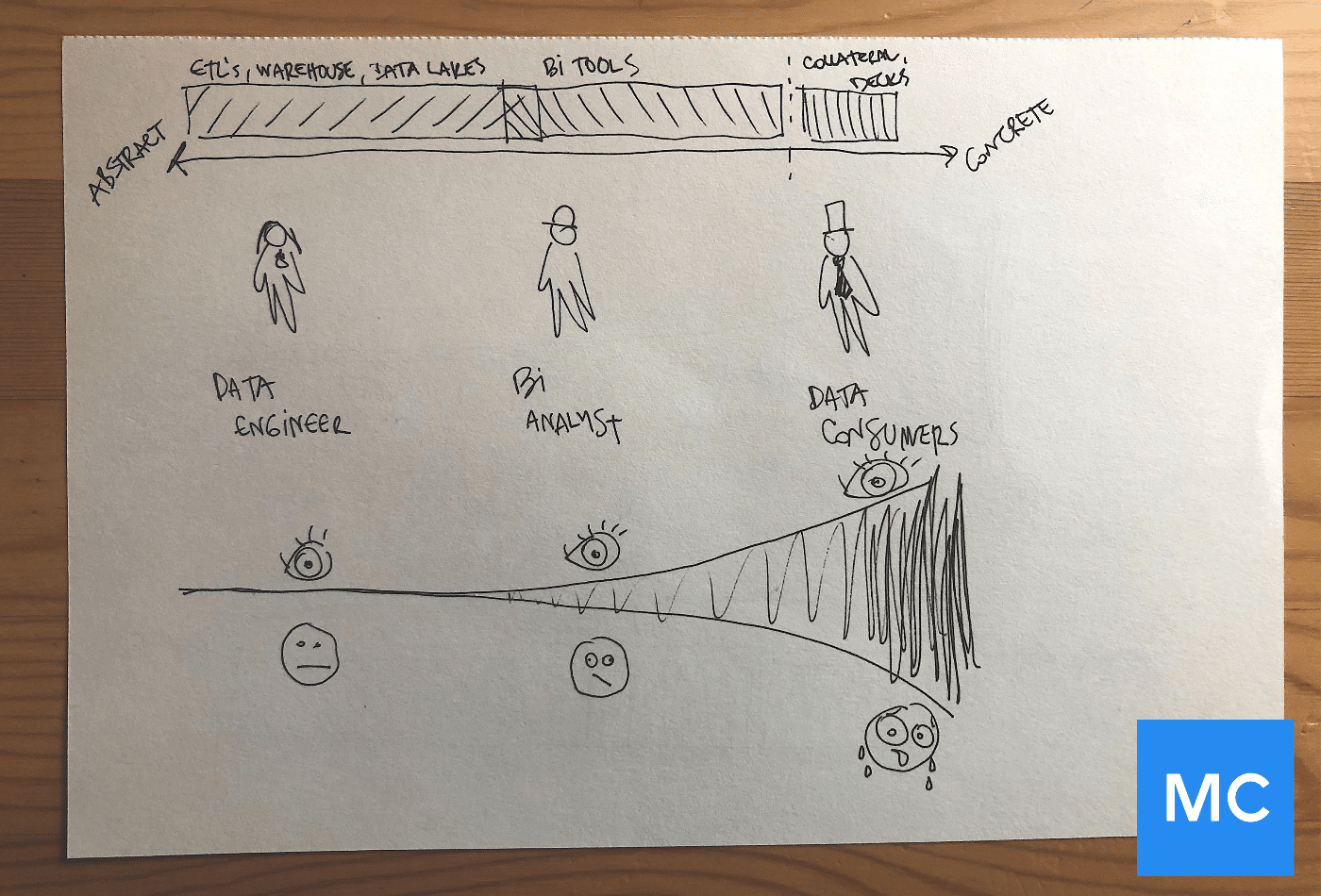

On the data side, we need to ensure we are not just investing in prevention, but in detection, alerting and resolution as well. For example, just like it would be vital for a cybersecurity professional to understand how a breach spread to compromise adjacent systems, data engineers need to understand how bad data in one dataset impacts other assets downstream including BI dashboards and the consumers using them.

Logistics best practices focus on the entire value chain

The manufacturing and logistics industries were among the first to elevate the quality assurance discipline to where it is today.

In these industries, 3.4 defects out of one million events is considered efficient (our data shows the average organization has 70 data incidents for every 1,000 tables in their environment a year so by that measure we still have a ways to go on our quality journey).

Lean Six Sigma originated in the 1980s as a methodology for removing waste and defects while increasing quality and is still widely popular today. One common LSS practice is “value chain analysis,” which involves monitoring the core business activities that add value to a product.

It doesn’t take a wild imagination to see the data engineering equivalents:

- Inbound logistics = Extract

- Operations = Transform

- Outbound logistics = Load

- Marketing and sales = Adoption initiatives, data literacy, building data trust

- Services = data self-service, ad-hoc analysis/reporting

The key to value chain analysis is to not only understand and monitor the primary and secondary activities at each stage, but to understand the connections between them, which is considered the most difficult part of the process. This speaks to the need for data engineers to have automated monitoring end-to-end across their systems (not just in the data warehouse) and ensure they have updated data lineage to understand the linkages across systems as well.

Software engineers invest in automated monitoring

Software engineering is a near perfect analogy and roadmap for the evolution of data engineering, and specifically data reliability engineering.

There are three key trends that have led to software applications measuring their reliability in terms like “5 9s” (99.999% uptime).

One is better collaboration. Developers were once left in the dark once their applications were running, leading to the same mistakes occurring repeatedly as developers lacked insight into application performance and didn’t know where to start looking to debug their code if something failed.

The solution was the widely adopted concept of DevOps, a new approach that mandates collaboration and continuous iteration between developers (Dev) and operations (Ops) teams during the software deployment and development process.

DataOps is a discipline that merges data engineering and data science teams to support an organization’s data needs, in a similar way to how DevOps helped scale software engineering. Similar to how DevOps applies CI/CD to software development and operations, DataOps entails a CI/CD-like, automation-first approach to building and scaling data products.

The second trend is specialization. The rise of the site reliability engineer and Google’s SRE handbook serving to advance reliability through improved expertise, best practices and accountability. We are seeing this specialization starting to emerge now with the rise of the data reliability engineer.

The third trend is increased investments in application performance management and observability. The explosive growth of observability vendors like DataDog and New Relic speak volumes in terms of how valuable software engineers continue to find these solutions to monitor and alert on anomalies in real-time. This investment has only increased over time (even though their applications are far more reliable than our data products), to the point it’s odd when a team hasn’t invested in observability.

As our software colleagues will tell you, improving reliability isn’t just about adopting a new solution. Nor is it about having built the perfect system. It’s a combination of people (SREs), process (DevOps), and technology (observability).

There is no need to free solo

All of the investments the organization has made in your data team is towards the goal of making better decisions driven by data. Your data needs to be highly reliable to build that trust, accelerate adoption, and scale a data-first culture–otherwise these investments become overhead instead of a revenue driver.

This is a hard mountain to climb, but there is no need to “free solo.” Here’s where monitoring, data observability, lineage, and other automated approaches to covering your data quality bases can come in handy.

It never hurts to cut yourself a little slack. Trust me: your stakeholders will thank you.

Our promise: we will show you the product.

Product demo.

Product demo.  What is data observability?

What is data observability?  What is a data mesh--and how not to mesh it up

What is a data mesh--and how not to mesh it up  The ULTIMATE Guide To Data Lineage

The ULTIMATE Guide To Data Lineage