Data Contracts and Data Observability: Whatnot’s Full Circle Journey to Data Trust

Over the last two years, data contracts have become one of most talked about (and hotly debated) topics in data engineering. And like any new — and admittedly abstract — concept, there are several different definitions of data contracts floating around on LinkedIn, Medium, and Substack.

We’ve already written an intro guide to data contracts. Now, let’s follow the fundamental principle of storytelling: show, don’t tell.

At our recent IMPACT conference, Zach Klein, software engineer for data platforms at Whatnot, pulled back the curtains on how data contracts are changing the game for his teams. He walked us through the data challenges that led Whatnot to adopting data contracts, exactly how they set up and implemented them, and even took us through an example scenario of putting one into place.

And if Whatnot can make data contracts happen, anyone can. Founded in 2019, Whatnot quickly became the most popular livestream marketplace on the internet. And they generate and manage an enormous amount of data, much of it real-time streaming.

On Whatnot, merchandise sellers conduct livestream auctions, interacting with and accepting bids from would-be buyers, and posting content on their dedicated channels. Those buyers can chat, make purchases, share reactions, and follow other users. This all takes place across Whatnot’s platform, including its iOS, Android, and web apps.

And as the cherry on top, Whatnot has one of the most robust self-serve data cultures we’ve encountered. The founders expect that all of their employees — 450 and counting — can access and query organizational data for their own analytics needs.

As you can imagine, it’s crucial that Whatnot’s data is accessible, useful, and reliable. That’s where the powerhouse combo of data contracts and data observability comes in — and where Zach’s story begins.

Table of Contents

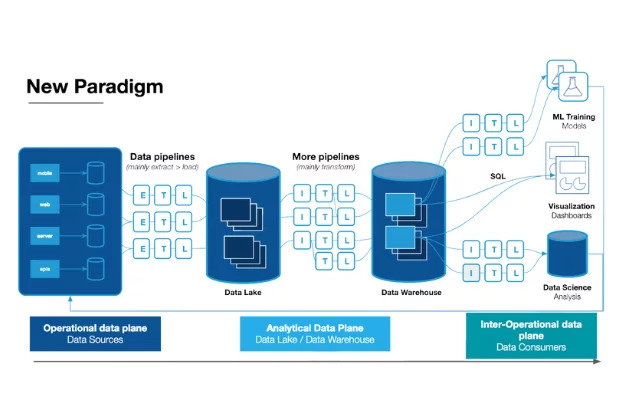

Building Whatnot’s modern data stack

First, let’s get the lay of the tech-stack landscape. When Zach joined Whatnot in June 2021, the data stack was in its infancy — what he described as its v.0 stage. But today, the company has a high-performing, modern data stack.

Whatnot is primarily an AWS shop, but Zach highlights a few key components that are especially relevant to our discussion of data contracts and observability:

Data producers: First-party data from Whatnot systems includes change data capture logs from Amazon databases and events in Segment and Confluent, while third-party data is produced by Stripe, Zendesk, Airtable, and similar tools.

Data processing: Whatnot data teams rely on Snowflake and dbt for processing, with orchestration in Dagster.

Observability and monitoring: Within their data warehouse, Monte Carlo’s data observability platform provides automated monitoring, alerting, and end-to-end lineage, while the team augments with Datadog for in-flight monitoring.

Whatnot’s data stack needs to be able to power “an incredibly rich experience,” says Zach. “All kinds of things are happening in real time — people chatting, sending reactions, things being bought and sold. It’s quite dynamic, and analytics events that represent ephemeral things happening in real time are incredibly valuable for us. The amount of events is just massive, and we have seen a huge increase in the number of events that have come through as the platform, the team, and the product have grown.”

Data quality challenges at Whatnot

And you know what they say: mo’ data, mo’ problems.

Along with Whatnot’s exponential increase in event growth came a steep increase in data quality challenges.

This includes Whatnot’s Snowflake instance, which Zach described as “a pretty bad user experience” and “a little bit chaotic”. Whatnot encourages its employees to access and use data on their own, but when new employees would go to find data in Snowflake, they would encounter hundreds of tables without any consistent naming conventions.

It wasn’t clear what each table represented, or how they were different from each other. Data consumers needed a very high level of context to understand what data meant and the nuance associated with it.

Data consumers weren’t the only employees confused by this lack of consistency. When the startup’s fast-moving engineering teams were building new features and needed to implement new logs or events, they asked the same questions, over and over:

- What do I log?

- Where do I log it?

- What fields should be attached?

Every time a new event was added, the data platform team had to have the same conversation, starting from scratch. That would slow down the organization’s velocity, and often led to the end data consumers being left out of the discussion. “It was a lose-lose all around,” Zach said.

Finally, fixing data incidents was increasingly thorny. Just like any product, the software emitting data or events would occasionally experience bugs — sending strings instead of integers, for example. And while monitoring or quality checks would usually catch those data quality incidents, the data platform team had a hard time pinpointing exactly where fixes were needed, and which person or team should be responsible for addressing them.

“You need to have a really good mechanism for communicating with the right people who have enough context to go in and fix the actual things that are broken,” Zach says.

With an enormous amount of data produced and consumed across the organization, Whatnot’s data teams needed to introduce a process to help everyone get and stay on the same page — even as the platform continued to evolve and grow.

Enter data contracts.

Introducing data contracts at Whatnot

To the uninitiated, data contracts sound like dense legal documents — but as Zach’s story shows, they’re actually a way to use process and tooling to enforce certain constraints that keep production data in a consistent, usable format for downstream users. (And, as implemented at Whatnot, without a lot of bureaucratic red tape required.)

For Zach and his team, defining data contracts came down to two key decisions: simplifying the way people could log data in their system, and providing a default mental model for everyone to use when thinking about events.

“First, we decided to invest heavily into unifying all of the different ways that you could log in our system into one data highway,” says Zach. “There’s now one way to do it — and you’re either doing it the way it should be done, or you’re not. It’s a dramatic simplification of the way you even could emit logs.”

Then, the team conformed around a default mental model for events: Actor, Action, Object. For example, an event could be “user placed order” or “system sent push notification”.

“The idea here isn’t that every single event will fit this model,” Zach says. “It’s that having this structured way of thinking can accelerate conversations. So when you find yourself needing to log new things, there’s a default path you can go down.”

How Whatnot implemented data contracts

With these frameworks in mind, Zach and his team began layering data contracts into their data infrastructure in four specific ways:

- Common interfaces across every event producer

- Common schema to serve as the source of truth for what analytics events should look like

- An extended and refactored ingestion pipeline to support the contracts

- Simplified, common exposures for consumers to easily query data

Let’s dive into each.

- A common interface

Across every possible event producer (e.g. Whatnot’s Python application, Elixir application, iOS app, Android app, web app, and ingestion pipeline) software engineers now encounter a consistent interface to interact with the logging system.

“The idea here is that whichever code base an engineer is working in, they’re going through the same general flow in each place,” says Zach. “While there are some differences in the interface across event producers, depending on the norms of the frameworks this tool lives inside of, the experience is consistent. There’s only one way of doing it.”

By constraining how engineers can add events or logs, Zach’s team ensures the right path is being followed — because it’s the only path available within the interface.

- A common schema

So what exactly is the “it” that’s consistent across event producers? The common schema.

“Having the common schema is basically the way that we define the source of truth of what goes through these interfaces,” Zach says.

His team uses Protobuf, a data definition language, to describe how data should look in a specific format. They can require certain metadata to be attached to different events that come through the system, such as descriptions, a team owner, and other critical information to keep data consistent and usable. That schema is then pushed through the common interface.

Protobuf offers the added benefit of code generation, supported for all the different event producers.

“Once you merge your pull request with a change to the schema, you get a code-generated object in whatever language you’re using,” says Zach. “You can take that generated object and go implement it in the producer — that way, there’s a really nice continuity between the schema and the interface. That’s the connective tissue.”

- An extended ingestion pipeline

Zach’s team also extended their ingestion pipeline, refactoring the way they were using their existing infrastructure and put a few interfaces in front of it.

“One of the things that went really well about this particular system is that we were able to take advantage of a lot of tools that we already had — we just started using them in a more opinionated and structured way,” Zach says.

- Common exposures

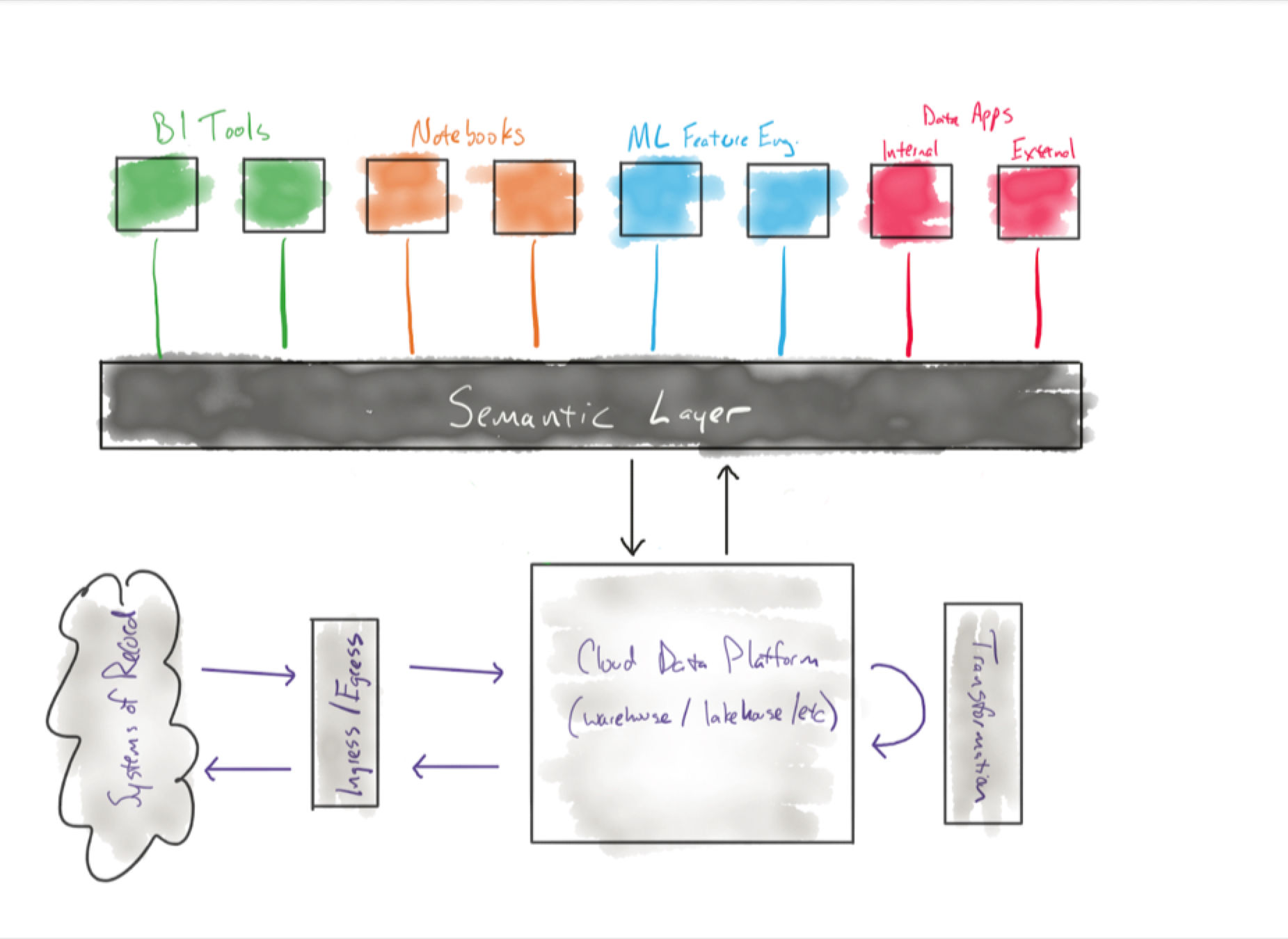

Finally, the data platform team implemented common exposures on top of the raw data — a clean, queryable layer that consumers can read much more easily than the old spiderweb of Snowflake tables. They used this exposure to consolidate their tables down to two: backend_events and frontend_events.

“By consolidating the interface down into these two very simple tables, we’ve enabled very simple queries to be able to explore different events,” says Zach. “Now, the level of context you need to be able to approach this data is much, much lower.”

Example: using data contracts to emit a UserFolllowUser event

Zach provided a specific example of how engineers follow data contracts — the typical social media functionality of a user following another user, which should emit an event. If you, a Whatnot software engineer, wanted to implement this event, you would need to follow three steps:

Step 1: Declare schema

You would open a pull request against the Git repo that holds the schema, and add a Protobuf message that follows the mental model: Actor, Action, Object. In this case, your message is UserFollowedUser.

The common interface and common schema would require you to enter certain metadata, like the description and team owner. Once you’ve entered the metadata, submitted your pull request, and had it approved and merged, you would receive the code-generated UserFollowedUser event object to carry into step two.

Step 2: Implement the producer

As Zach describes it, “You take your object, hydrate it with some data, and call it from wherever in your code makes sense.”

Each library also contains built-in testing harnesses and unit testing capabilities, allowing the data platform team to test whether events are firing when they’re supposed to, schema is correct, and data types are correct.

Additionally, the data team can leverage semantic data quality checks. “Through very complex flows in the app like different endpoint calls, we want to ensure events are submitted with particular data,” Zach says. “We can check that semantic quality via unit tests as well, and shift that level of QA upstream.”

Step 3: Query

Once the unit testing is complete, you ship it off — and the data is now available to show up, just as intended, in one of the two consolidated tables. Data consumers can now query the data to access your UserFollowedUser events.

“The data just works,” Zach says.

Because no matter where an engineer is working, they’re using the same interface and quality standards via data contracts.

The role of data testing in Whatnot’s data contracts

Along every stage of the process, data testing plays a vital role to ensure contracts are being followed and data quality remains high.

“Before anything gets implemented, because we have this common shared schema, we can run all different kinds of checks in the pull request to change the schema,” says Zach. These checks can test for basic elements like backwards compatibility or not changing certain data types or field names. “But there’s also more bespoke things with a more semantic meaning than schema. For example, which team owns which event — we can make sure there’s an enum of a team attached to each event so that we have good lineage on where to go if we notice that things are broken.”

Additionally, Whatnot’s data platform team uses unit testing frameworks to ensure quality during development, such as checking that the right events are firing with the right data. And they have built-in monitoring through their common producer interfaces to continue ensuring quality after code has shipped.

How data observability scales data reliability in Snowflake

As the data platform team began enforcing data contracts, they knew they needed a more scalable solution to data reliability than testing alone. The team invested in data observability proactively, layering Monte Carlo’s automated monitoring, alerting, and end-to-end lineage across the data warehouse to ensure data freshness, volume, and schema accuracy.

“We have Monte Carlo running in the warehouse that can keep an eye on anomalously high or low volumes of data coming through,” says Zach. “We can look at particular fields and make sure that nothing got through all the upstream steps.”

Right away, Monte Carlo began helping the data team identify and resolve issues. Even with exponential growth and increasing democratization of data, the Whatnot team has seen data incidents remain flat.

Together, data observability and data contracts are ensuring Whatnot’s data remains useful, accessible, and reliable across their entire tech stack. And when any data quality incidents do occur, the right teams are notified and looped in to fix issues as quickly as possible — minimizing the downstream impacts.

Looking ahead to data quality

As the Whatnot looks forward, they plan to increase their investment in real-time analytics to catch and address incidents like abusive behavior during a livestream, fraudulent credit card usage, or sellers encountering issues with pricing.

And since real-time analytics use cases are rare, they want to make it easier for newer engineers to work with these systems — providing Python packages or abstractions to establish patterns that anyone in the company can easily adopt.

As Whatnot’s team continues to grow, and its platform evolves, data quality will always be a necessity. Together, the powerful combination of data contracts and data observability will help ensure data is accurate, reliable, and available across the organization.

If you’re exploring data contracts but need to improve the accuracy, freshness, and completeness of your underlying data, it’s time to learn about observability.

Contact our team to ask all your data quality questions and see how Monte Carlo’s data observability platform fits into your stack.

Our promise: we will show you the product.

Product demo.

Product demo.  What is data observability?

What is data observability?  What is a data mesh--and how not to mesh it up

What is a data mesh--and how not to mesh it up  The ULTIMATE Guide To Data Lineage

The ULTIMATE Guide To Data Lineage